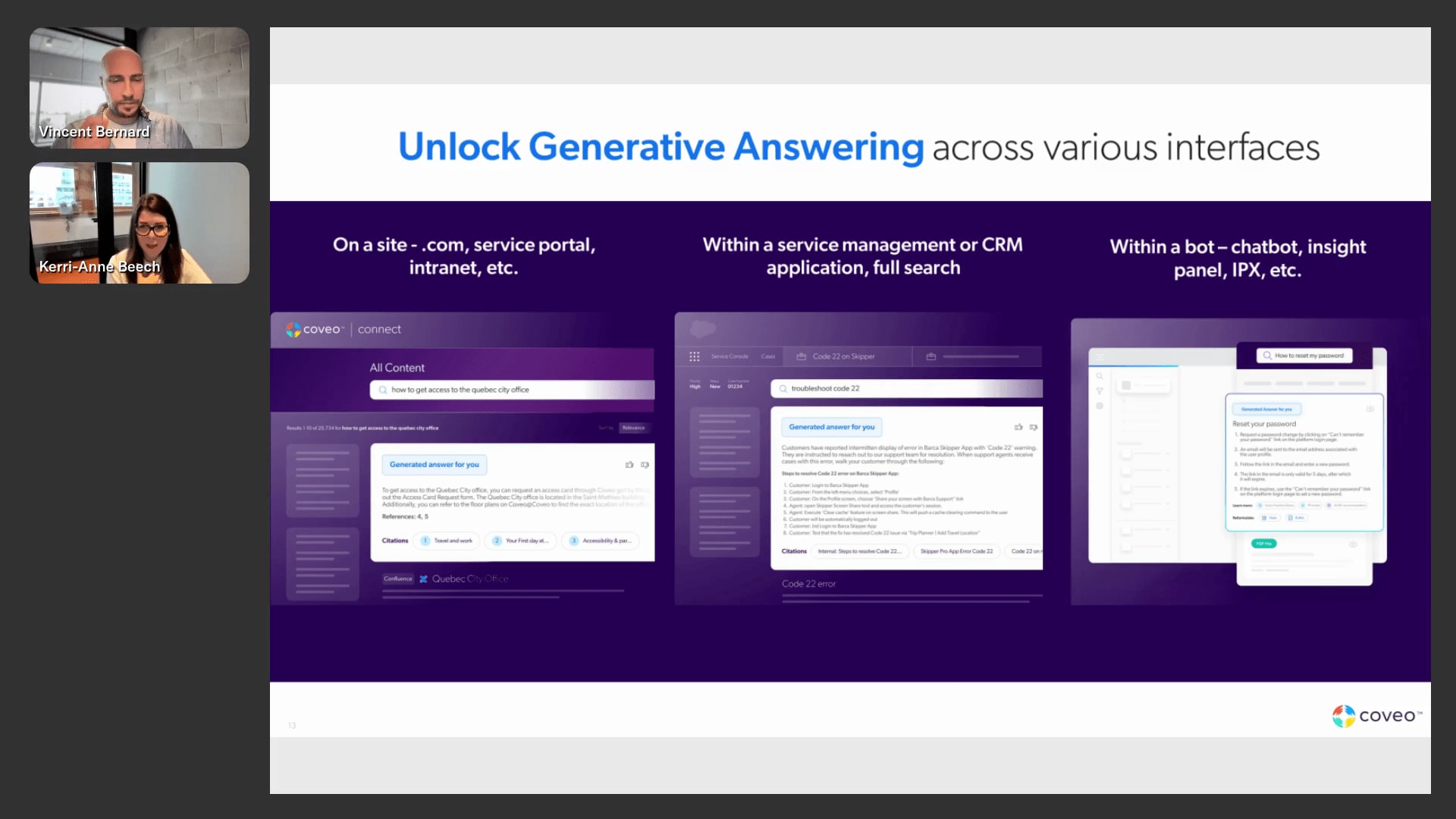

Alrighty. Alrighty. Hello, everybody. Welcome to another CMS wire webinar. My name is Jared Hornsby, and I'm here today to help answer any general or technical questions that you may have. But before we get started, I'd like to welcome you to today's webinar, how Gen AI is redefining the digital customer experience. Here's today's timing breakdown. These numbers are just rough, so please don't hold us to these. At any point during today's webinar, if you have a question, please do not hesitate to submit using the q and a tab on the right side of your screen. You can also chat with your fellow attendees by clicking the public chat also on the right side of your screen. And we also have some, excellent surveys available for you at the end, so stay tuned for those. Here is a bit about us over at CMS Wire. We were founded in two thousand and three, and we cover the primary topics that you see here on this slide. You can read more of our great content and register for upcoming conferences and webinars all by visiting c m s wire dot com. Here is a bit about our sponsor, Coveo. I'd like to thank them for sponsoring today's webinar. Coveo is the world's leading cloud based relevance platform that acts as an intelligence layer injecting relevance into the digital workplace with AI powered solutions. Over from Coveo today, we have two excellent speakers, and sharing with us first is Carrie Anne Beach, the senior product marketing manager over at Coveo. Carrie Anne, take it away. Awesome. Thank you so much, Jared. So hi, everyone. My name's Carrie Anne Beach, senior PMM here at Coveo. I am joined by my colleague, Vincent Bernard, if you wanna introduce yourself. Hey, everyone. My name is Vincent, live from Quebec City. I'm a director in r and d responsible for showcasing the best of the product. So nice to meet you all. And today, we have a really exciting topic as just mentioned. We're gonna be discussing the opportunities and challenges around JennyI and how you can create an end to end customer experience across all of your sites. So just a little bit extra about Coveo for those of you who don't know. We are an AI search and generative experience platform, as Jared mentioned. We have over a decade of AI experience, and our ML models, power AI search recommendations, generative answering, and unified personalization across use cases. This includes websites, commerce, service, and workplace. So without further ado, let's jump right in. It is no secret that Gen AI is a hot topic lately. In fact, eighty nine percent of executives rank AI and Gen AI as the top three technology priority for twenty twenty four. Companies all over the world are racing to understand how and where this can be applied as well as the impacts that it will have not only on their organization, but on the digital customer experience as a whole. And this is because Gen AI is really changing the way customers interact with your sites, and it's raising the bar for personalized, contextual, and seamless interactions across all of your sites. But as I'm sure many of you know, there are multiple types of Gen AI. It's a pretty big bucket. I'm sure a lot of you, if not everyone on this call, has probably leveraged it in your own day to day job or even personally for content creation to help with writer's block or summarize large documents, maybe even for coding. But the type of Gen AI that I wanna talk to you about today is specific to generative answering. So this is a little bit of a mix between content discovery and conversational AI. It's a really interesting one for CX leaders who are looking at your holistic website strategy because customers really just want fast and easy answers from your site. Now what you might not know is that generative answering is actually rooted in search. So I wanna take a moment for you to think about your website search experience. How quickly users can find and discover your content? Is that content personalized to their actions and their intent? Are you able to support multiple user journeys? And I bring this up because site search can often be overlooked, but it's really a critical piece of your digital customer experience, and it can actually determine the effectiveness of GenAI. And this is because site search serves as the foundation of your web strategy from which all of these other richer experiences, including GenAI, are built. So if your basic site search experience is lacking, this is going to impact the success of Gen AI. It's also the glue that connects all of your sites to drive that end to end digital customer experience, and this is especially true if you're able to surface content that lives outside of your CMS or your DXP. And, of course, it's the voice of the customer. Right? They're signaling to you their intent in that search box. So the content that you deliver, be it through search results, recommendations, or generative answers, needs to be tailored to their experience and also their intent. So what does all of this mean to you? You might be asking how you can utilize Gen AI to help amplify your website, to deliver that seamless digital customer experience? Well, first, it can change the way customers search and interact with your site. It allows them to ask more complex questions and receive answers in natural language. This is really starting to blur the line between in store and online experiences so you can meet those rising customer expectations. To that end, you can also contextualize those responses because it's not enough to just have good content that exists out there in the world. It needs to be able to be surfaced to the right person at the right time based on their actions and their intent. In today's competitive economy, you really need to earn the right to sell to customers. You need to show that you understand them. And this is gonna increase self-service across sites leading to a better overall experience. Users can receive instant responses to their questions along with citations and recommendation sources, should they want to learn more so they don't need to bounce around from different types of sites and different documents to find the answers they need. It's all right there in front of them in real time. And, of course, you wanna be able to apply this across the entire user journey, not just your dot com, but your support sites, your commerce sites. Think of it holistically everywhere that they're interacting with you. But customers aren't the only ones who benefit. Your teams are also going to enjoy a ton of efficiency gains, having to do less manual work, and being able to scale. You can also get more out of your content, and this is because generated answers can summarize, merge, or expand upon the most relevant pieces of content across documents so you can present it in new ways, really allowing you to do more with less. So it's clear that there is a lot of potential here, a lot of opportunity around Gen AI, but there's also challenges and risks if not implemented effectively. First and foremost, there's privacy concerns. Public GenAI platforms like ChatGPT may store and learn from your data, which could leave your confidential information open to data leaks. You want to be able to maintain ownership of your data and make sure that it's not being used to train these public models. And then there's a matter of maintaining permissions, making sure users are only able to get answers from the content that they have permission to see. So you don't want internal content being surfaced on your public sites. Or if you're using it solely internally, you don't want employees gaining access to confidential, you know, HR type of documents that they shouldn't be seeing. Then there's also accuracy and factuality of your answers, concerns around hallucinations, what can impact your brand trust amongst other, limitations implications. You also need to consider content. Right? Lack of content reduces the quality and relevance of generative answers. So you want to be able to leverage content across a variety of sources, not just, different individual ones. And then, of course, there's cost concerns. Some may choose to create their own custom models in hopes of keeping the content, the cost down. But as we've seen even on this slide, there's a lot to consider here, and it could be extremely costly if not engineered properly, not to mention resource intensive to maintain. And then finally, you need to think about the overall experience. How do you generate relevant answers at scale and support multiple user journeys that understand intent? An AI platform can help you address a lot of these challenges and remove those barriers to entry. So I'm gonna pass it off to Vincent to share some important considerations that's gonna help you leverage NII across your organization and, of course, show you what it looks like here at Coveo. Thanks, Gary. So first off, we'll start by addressing these pain points. And the major pattern that we see on the market right now to resolve this issue is clearly the RAG pattern, so, retrieval of meta generation. So, basically, we have here an example of a platform that does a rack. It is a complete example with a lot of different components. I'll walk you through each part of them so you can understand why it's important and also, how to build it if you need. So, basically, in the left section, the first part is what we call connectivity. So if you wanna have the right content and if you want to be traceable, if you want that content to be refreshed often, for instance, And, also, if you want content that is not available, on the learning phase of these models. So for instance, you have an intranet where the content is is secured. It is available to specific user. Not everybody can see everything. So you need a set of connectivity. What we see here is basically on the left box all the different features you can have at the connectivity level. Basically, you can have, features that will help you enhance the content, feature that will let you secure the content and access rights and these kinds of features. Then after, you're gonna have, and you've heard it probably if you are, interested into the rag pattern. You've heard vector databases or or snippets or chunks. So, basically, it's the storage part where you're gonna have your your your passages of your information. And in in some cases, like at Coveo here, we also have the full documents. So we believe that having a a textual search like lexical and also having the semantic search is the right approach. So here, what we've done is, for instance, having the documents enhanced with the vectorization. So, basically, the embeddings of those documents. On the middle section, what you'll see is, where the magic happens. So you're gonna take your documents, and then you're gonna pass them to your LLM. This is really the rag pattern that you see here where you're gonna have a prompt that's gonna specify, please generate an answer based on the documents that I've retrieved. These are the documents. Additionally to that, you may wanna have business rules to to specify for specific queries or within specific timelines to to have business rules that influence, the the outer, the output of the LLM. On the right side, what we have is a front end. So, Gen AI takes, different forms. You've seen probably chatbots or or these perplexity, interface, for instance, where you have, like, results and Jenny, I stack one on top of the other. And the most important part in my personal opinion is the feedback loop. When a user is using the interface, you want them to you wanna track what's happening, track the queries, understand what their intent, where are they clicking, what documents is relevant, and then we can loop that back at the section at the bottom. This is something a little bit more advanced that most RAG platform doesn't offer where we are learning from the user behavior to rank the result better for the next one. So if people are looking for a specific query and are clicking on a document that is low in the list, at one point, we'll start pushing that document up, for instance, or even suggesting questions based on what happened. And that loop will go on. So as long as you have new content feeding through your connectivity and you have that loop, you're gonna have an experience that's gonna get just better and better by itself. So this is kind of a an overview of a really complete rag pattern, that you see here. What we've seen, on the market when we start to, to investigate relevance generative answering, so we we had clients that decided to walk with us on the beta phase and start building that feature. And some of them were already building a rag pattern on their on on their selves. So, basically, they had two stacks. On one head, you had a search, for instance, And the on the other side, on the right side, you had another stack, which is the rag pattern, your question and syring mechanism. When you build two stacks differently, so let's say you wanna go fast one to do a prototype, so you're building your vector database, your LLM, and you're chunking your documents and and doing something like on the right side. What happened is that on one side, you're gonna have your search engine. So each large enterprise or or or midsize enterprise will have search no matter if it's for their Internet or their websites. You're gonna have two different stack at this point. You're gonna have your your new stack that is your prototype and then your search on the left side. Having two different sets of facts creates all sorts of friction. You have redundancy of information. You need to refresh both sets of information. You have two different infrastructure. You have two different sets of strater. So it's obviously not the ideal situation. What we've come up with is actually something like this. You have your intent box, as we call it, which is a one box does it all. And when you search for something, it goes through a stack that merge lexical search, so, like, very accuracy search based on what's typed, and semantic search with the embeddings and extraction. And the documents that are retrieved from search are fed through the LLM. So the advantage is that you have a single set of facts, single platform to administrate all the different experiences. It's also gonna give you the potential, to to deploy these experiences at different points in your organization. No matter if you want to add them to your CMS, to your Internet, or to any other platform. So this is the, I'd say the architecture we are proposing on the market. And then without further ado, I'll go and start doing a demo so we can walk through what we have. I think it's the most interesting part, and I think that's what you'll see. Just before going in the demo, build versus buy, that's often a question we get. So why should you build it? A good reason to build it is if you have skills. Basically, if your skill set is that you have a large team of AI experts, if your your core competency is in AI, also, if you have a very niche, need, which we can even talk about it, And then you don't have any scalability concerns. So let's say it's a very unique thing you do for your back end. I mean, it's fine. You should buy if you don't have a lot of AI experts and you want to avoid, like, building something and having to maintain it, having to scale it yourself. But, also, if you want something more enterprise wide and you wanna have something that is really, innovative, I'd say. So that being said, this is the just before Carrie Anne, we have one last slide before the demo, and it's actually the key, generative return on investment you can expect. Yes. Exactly. So before we get into the demo, which I'm very excited about, we wanted to leave you with three kinda key things to remember when implementing Gen AI that will help you generate revenue, a kind of checklist of sorts. So as you've seen today, the foundations of generative answering still lies in search. So it's not just about finding the right documents. You need to be able to retrieve the most relevant sections or chunks of those documents and having the right content across sources in a unified index. The quality of the content within these documents is also extremely important. Really can't overstate that enough. Gen AI is only as good as the information that's feeding it. So you wanna focus on short and clear content, but then you also need to continuously test and optimize your answers, fine tune them with subject matter experts. This is really not a set and forget type of technology. You'll want to have a content and testing strategy in place with someone who's looking at everything holistically. And speaking of holistically, you need to consider that end to end experience, of course, and how to thread Genii across your organization rather than just implementing it in a silo so that customers will have consistent experiences and be able to find the right information no matter where they've ended up on your journey, no matter which site they're coming to you from. And then finally, if you understand the important considerations, you, have a solid action plan in place, and you have a great scalable solution, you will be able to reap all of these benefits and deploy generative answering across the buyer journey from dot com, support sites, even internally, your HR and portals to help your, internal team self-service, and truly drive that cohesive end to end experience. So with that, let's dive into the demo. Alright. So let me share my screen here. Yep. Window. So the first thing I'll share with you is our Barco ecosystem. So the Barco is, is a a fictional brand we built here at Coveo to showcase the product. This website here is running on, Adobe AEM. The important part to notice is that all these components you see, so we have a giant search box. We have the results set at the bottom with facets. This is all JavaScript based, framework called Atomic. It's also available in TypeScript, with Headless. This is deployable across all types of interfaces. So we have it integrated in many CRM, but it's also, on different CMS. So we have Sitecore. We have Adobe, and and we're also compatible with everything out there. So this kind of interface is easily reproducible, no matter where you are. So without further ado, the first thing we wanna do here is to, click the search box and start doing a query. What is bark? So the first thing you'll notice is at the top, we're gonna have that generative component that's gonna start explaining what's in the results below. It's important to notice that the query will first retrieve the results. And based on the best passages of information from the best results, we're able to send it to a an LLM, and at this point, generate a summary. There is a few features I wanna highlight here. First off, traceability of information. Disinformation and everything that has been done for the, generative part is, is viewable here through citation. So each document has a little part of text that has been extracted and has been used to generate this specific answer here. Let's continue just to show, the depth of the feature, of a full platform like Coveo. So when you start typing something, you get that query suggestion, which is powered by previous users that got successful queries working, on the system. So really a type ahead experience like you would have at, at Google. And then here are the generative components, all the different sets. We also offer something a little bit different. So if you switch to smart snippets here, you'll see, something different. It's another type of, of response that we do that is actually authored. So for specific questions, if you are in a highly, standardized industry or if you need to provide, like, answer that are regulated, you can also decide to use a different approach, which will give you a semantic search, capability. But instead of generating an answer, you can select exactly the text you want, which is also very interesting. This comes with people also ask question, which will let you see other, documents that are possible, within the the ecosystem that you guys have. If we go down here and click on one of these, pages, you'll see at the bottom, this is a regular page that has been authored by Brian here. And at the bottom, we are also showcasing some recommendation. Why, I I'm talking about recommendation. We strongly believe that all these features together create a unified experience that that that makes the user more engaging with your CMS. So you're gonna have recommendation, the query suggestion, the generation, and all of these experiences create a very, very interactive experience that is personalized based on the different CMS personalization engine you have. So if you're using Optimizely or Adobe Target, you can really have these profiles injected here so the overall experience gets personalized. I'll go now and show you, a different experience. So this is really like a website informational website that has a lot of different features, but then let's go in something a little bit more, live. So the first implementation we've done with Genii was docs dot Coveo dot com. This is our own internal documentation. The reason why I wanna show you, this interface is that we're gonna show the back end after, show you how it's it it is built. One first thing I wanna show is, the feedback loop I was talking about, at the beginning. So now that we have a generative experience, people started to realize that, oh, this interface is intelligent. It starts to respond to better questions. So they started to get more generous with, with their time and their keywords. So you see here if I'm typing how, I'm getting longer questions. These weren't there if you would, go on the website one year ago. So, really, the the feature, the generative feature changed the way the users are interacting with the system, and the feedback loop takes it back and gives you actually better suggestion for the future. So, let's go here with explain how does Coveo uses permissions, which is based on security. This here is a more advanced, version of the component where you have here show more to avoid taking too much real estate on screen. It also has markup, so you can see that we have bold. We're gonna have different type of, like, code highlighting and these kinds of features. So here, very interestingly, it is gonna explain to you if you need, like, SharePoint or Salesforce. This is how you do it. All the citations are pointing to the documents. What I find very interesting is the RAG approach here, and a demonstration would be to click on something like Sitecore or CNS. So if you select that filter, we're gonna change the document at the bottom, to to to to now explain how it works with Coveo and Psycore and websites, and this will basically change the answer. You can also see the interesting speed we have, which is very important in my opinion. This kind of experience and the user, I'd say expectation on the website is things go fast. That's how we that that's how Internet works. So having this kind of reactive, implementation really helps a lot. So to build something like this, if you have a mature search platform, the only thing you need to do is to log in your Covidoo administration console. Once you're inside your administration console, the first thing we're gonna ask you to do is to add some content. You can see here a bunch of different content we have. Like explaining the architecture slide, we, have a list a very long list of connectors. So no matter if your content is in SharePoint, ServiceNow, SAP, Salesforce, or Sitecore, we have connectors that can grab that content and bring it to Coveo Cloud. Most of them are don't need any configuration. It's actually just point and click UI. You're gonna enter your user. You're gonna select which part of that platform you want to index, and we'll bring that content in Coveo. Once you have the content in Coveo, the next logical step is to build a machine learning model. So here, you're gonna have a set of different machine learning models. Since today, we're talking about generative AI, I'll focus on this one. But please note we have almost, like, eleven or fifteen different models. Semantic encoder is one of them that is really required. We think to have a good experience, but let's focus on generative experience. So we click on it. We're gonna have a quick UI that's gonna tell you what's gonna happen, what how your search interface will be modified. And at this point, we'll ask you to select your content. This is the kind of thing that for a developer or for an engineer, it saves a lot of time. To be able to grab the content, chunk it, and also preselect it so a specific interface has access to specific part of the content, saves a lot of time. So here, for instance, let's go and select the Coveo, documentation website. You'll see that I'm, right off the bat, I'm able to evaluate how many items are there. Interestingly, we are losing a little bit of documents, and that's because some part of the documentation is written in French. You may have noticed my my slight accent. So, we are here, a French company from Canada, and then there is a few documents and a few parts of the website that we decided to keep in French. Right now, we are only use using English for the generative approach. However, French, German, and a few other languages support will come later this year. Another good reason to buy versus build. We are evolving and making this whole thing better as we are going. Interestingly, you can also be more specific. So right now, I took the whole website, source, but you could have filters and select some specific part, using metadata, for instance. So you can select specific metadata and scope it down. Once it's done, the only thing you need to do is to proceed. So, actually, you build your model, CMS wire, and then you start the build process. So at this point, it's gonna start building. And in it says, several hours, but for the dataset that I selected, it's gonna be in a few minutes. And then we're gonna have the model ready to associate to a search interface. At this point, it's gonna start generating, generating answers. Why are we, so in love with that platform and the fact that the platform can serve multiple experiences? Once you have your content and you have your traffic and your ML build, you can reuse it across multiple interfaces. So this experience that I see that you see here, how to use permissions, it's also available in our support line. So if you start, creating a a case at Coveo, so with a support ticket, you're gonna have deflection. So we'll read your case, and we'll start generating answers based on the content. Obviously, we'll use the same content, so public content, but also internal cases and KBs and things that are not publicly available necessarily. Also, we decided to use a feature called in product experience, and this feature is basically a widget that you can drag and drop all over the place. So here on the platform, you have that little, question mark sign. And if you click on it, you're gonna see here a condensed search interface. It looks a little bit like a chatbot, but in a better version if you ask me. And this one is contextualized right away with what you're doing. So here, I'm in the model section of the platform. First thing is gonna ask you is, create or update a config of a model or if you wanna build RGA, very popular these days for us. So those are the default generation. But if you ask something like, what is a query pipeline, you're gonna have that full generative experience that you saw on the website also available here. So the same documents, the same machine learning models can be deployed across multiple interfaces. And this is where you gain, like, an economy of scale of having these documents and these experiences, and you can reuse part of the content. You can reuse behavioral information you gather on your website. So you see here, we have all those users looking for, to do a lot of different things. So they're leaving traces. We can reuse that content over here. So you're gonna have, like, a bunch of different suggestions that's gonna appear as well. So that's it for my quick tour. I think we still have a little bit of time for the, questions at this point. So, Carrie Anne, back to you. Awesome. Thank you so much for that really great demo. Actually, Jared, will you be, leading us through the question answering portion? Yes. Yes. Yes. Let's get into some q and a. Alright. Up first, when implementing these, new AI approaches, does it matter what CMS I'm using? No. Not really. So Coveo is agnostic. We are an API first platform. What you've seen today is the simplest form of what we do. So I've used the out of the box components and interfaces, but then you can deploy these components directly across a variety of CMS. You can also use the API first approach if you prefer. And more importantly, all the modern CMSs have access to content through REST endpoint or GraphQL endpoints. You can even build some some little piece of code to push your content to Kahua if you wanna do it securely with permissions. But, yeah, we've done them all. I'd say we've done, Psycore. We've done Adobe. We've done Contentful, Optimizely. All the modern CMSs have been indexed, and we've been providing experiences on all of them. Yeah. And we are actually, agnostic solution. So we do have, as Vincent just showed before, all these connectors to the leading applications, not only relating to CMS. It could also be SharePoint, Confluence, all of the, you know, enterprise organizations that you're currently using. No matter where the content is, we'll find it. And no matter where the search interfaces, we can deploy, some front end search on it. Excellent. Alrighty. Next here. What type of large language model do you use? It's a famous question. So, like everyone else on the market, we don't wanna we don't want to reinvent the wheel. So for the current build that you saw, Coveo relevance generating, we're using GPT. And we're using a version called three zero five turbo. And often people are asking, why are you not using four or four o? The reason is that most of the intelligence part here is done by the platform. So at the output, we simply ask that LLM to do a resume or to do a summer, a summary, of everything we've done prior to that. So we don't need necessarily all the creative, but the, features of GPT four. Also, please note that if you wanna build something like that, GPT four is extremely expensive compared to three zero five. It's slower, and it costs more. So if you are receiving millions of queries every day on your website, might not be economical to do it with GPT four. That's just lesson learned by us. The way we build the platform is that we can unplug and change the LLM. So we've been investigating other vendors as well. But right now, OpenAI, the model is deployed through Azure. That's how we, set it up behind the scene, and that's, that's the overall architecture. Gotcha. Alright. Another question here. What type of content can you use for generative answering? I'd say everything. Basically, every piece of content can go in the platform can be used. So, what we see is, we have mostly web pages. I think that's the that's the common, thing. But when you're indexing a document with Coveo, we're converting the body of that document for an HTML representation. It means that we can go through PDF, Excel files, PowerPoint, Word documents, chat and forums. Whatever the content we have, we'll we'll normalize it in our own HTML representation, and this is what we'll use to feed the LLM. So, I'd say almost everything. Perfect. Alright. And up next here, how does Coveo facilitate semantic search and content indexing? So content indexing, I think, the connectivity is is is very how we do it. We have connectors that are prebuilt that lets you, in a secure way, pump content from all over the place. We have web web scraping comp components, web connectors, site map connector. So So no matter and and we have twenty five years of experience connecting to everything. So, here, we have a lot of experience. If you wanna do it yourself, fine. I mean, the UI is simple, and it's easy to do. Otherwise, we have professional services that are also, like, very good to do crazy things, like going in house and and and doing joint in your databases to send it to Coveo. So we've done it all, but I think that's one of the main differentiator of the platform. We are good at finding content, enterprise content, and bringing it. Regarding semantic search, I I maybe I went fast at the beginning, the architecture slide, but what we've done is when we're indexing your content, we have some ML drills that will start vectorizing the content, meaning that we are taking each word and we're positioning them in a vector space to make sure that they are, available for semantic search. Then when a user is typing something, let's say you're looking for something that doesn't make any sense like mustard, kayak. At this point, we'll compare all these words with the words we have in our in our documents. And kayak, I might find something. Mustard? No. But yellow is existing. Yellow is close to mustard. So at one point, I'll give you back a yellow kayak because I've been able to find kayak as lexical, match and mustard yellow as a semantic close term. So this is how we do it. We do, lexical match, but we also inject some boost on the semantic match of the document between your query and the document that we have indexed. Gotcha. And I think that's gonna be our time for today. Thank you, Vincent. Thank you, Carrie Anne. And major shout out to Coveo for sponsoring today's webinar. Last but not least, thanks to everybody out in the audience for your time and attention today. Today's webinar has been recorded, so you'll be receiving a link to that recording in a follow-up email. So keep an eye on your inbox for that. In the meantime, thank you all once again, and we will see you on the next one. Thank you. Bye bye.

Your guide to driving end-to-end digital customer experiences with GenAI

Digital customer experience is becoming a definitive competitive advantage. And GenAI is at the heart of evolving these customer expectations — with 89% of executives deeming AI and GenAI crucial for 2024.

GenAI not only transforms how customers engage with your content, but also enhances the entire user journey, enabling personalization at scale. This evolution starts with the basics of search relevance.

Watch this session to:

- Gain valuable insights into the challenges and opportunities around GenAI, and the importance of search

- Drive an end-to-end digital customer experience with AI Search, Recommendations, Personalization and Generative Answering

- See a live demo of Coveo’s AI platform, including how Relevance Generative Answering is changing the game for customer self-service.

Make every experience relevant with Coveo

Hey 👋! Any questions? I can have a teammate jump in on chat right now!