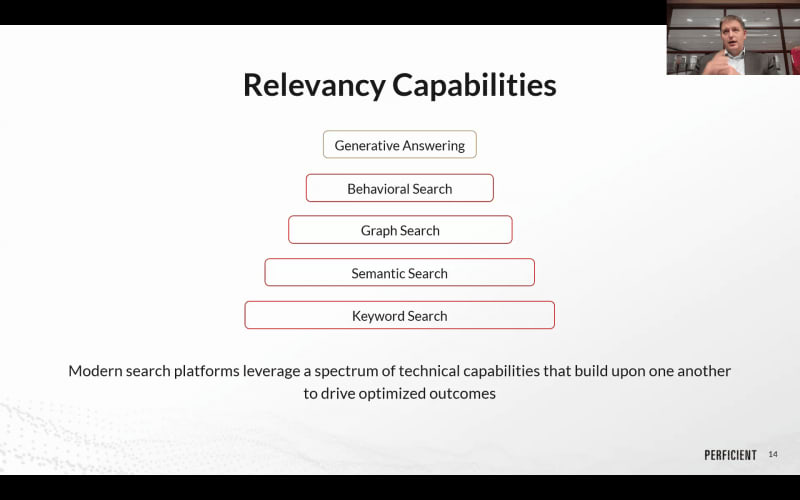

Alright. Perfect. Looks like people are already joining the webinar. Welcome, everyone. We're just going to wait about one minute before we start officially just to to give everyone a chance to join this call. Alright. Perfect. Hi, everyone. Thank you so much for joining us for today's webinar, harnessing CX and EX, unlocking scalable success with generative AI. My name is Patricia Patsy Liang, and I'm the product marketing manager for Workplace here at Coveo. I'm thrilled to be joined by our wonderful partners from Perficient, Eric Immerman, a practice director for search and content, as well as Zach Fisher, a senior solutions architect. Together, we're going to explore how innovative technologies like AI search and generative answering really help leading enterprises deliver efficient and empowering digital experiences to both customers and employees. In today's session, we're also going to take some time to reflect on the general journey that enterprises have undertaken with GenAI over this past year and share a few key insights from twenty twenty four. We're also gonna cover some tested strategies for leveraging SecurGen AI, to improve both customer and employee experiences, and drive real business outcomes. And, of course, we're gonna also feature some real world examples from some of our favorite customers. Eric and Zach, is there anything you wanna say before we start? Welcome, everybody. Excited to to chat with everyone today. Wonderful. Thanks for your help. Yeah. And, of course, today's webinar is going to be recorded. You'll be receiving the recording of it in the next twenty four hours. And please feel free to ask questions throughout the webinar as well, and we'll be answering them at the very end. Alright, Eric and Zach. I figured we would start with this quote from IBM. Eric, can you tell us a little bit more about it? Yeah. So, I mean, the the general sentiment in this quote, right, is that fundamentally, ChatGPT came out two years ago roughly now, and kind of showed the world what was possible with generative AI. It got everybody's minds spinning and thinking and saying, I can generate information now. And so, fundamentally, that's not just in a corporate world. That's in a consumer world. Right? I, as a user, can go to ChatChippy Tea or Perplexity or in Bing or Google. I get generated answers back. Quite simply, the minimum experience someone has had, it becomes what they start expect or sorry. The experience that they've had becomes the minimum for what they expect going forward. Right? And so if I have seen ChatGPT, and I'm now gonna start talking to organizations and saying, well, why are you giving me ten blue links? Or why aren't you giving me a summarized answer? Why aren't you giving me information? Just because expectations have been set. And so now we're at the point where everybody tries to, in some ways, catch up and align with and meet those expectations, that were were set by more of the public sphere sort of companies. Yeah. It's true. Expectations are at an all time high when it comes to digital experiences. That makes a lot of sense. And so twenty twenty four has been a pivotal year for generative AI. Eric and Zach, looking back, what are some key learnings that have emerged from your implementations? Zach, you wanna go first, and I'll jump in? Yeah. Sure. So, the the general AI bubble, right, making it a a sort of kitchen table conversation, there was a lot of very, very high level of expectation that intelligent, intelligent artificial intelligence, like, with the same same capability of a individual user is here, and we can we can just kind of start using off the shelf AI models to to think like a person. I mean, I think over the next couple of years, it became clear that as far as conversation, as far as insights, there's there's a lot that these sort of off the shelf models can do, but they're not foolproof. Right? Twenty twenty three and twenty twenty four have been a lot of learning about what hallucination means in in, in the use of a large language model and, how an enter how how much risk an enterprise is willing to take on to mitigate some of the some of the effectiveness of what you can do with a large language model compared to compared to those risks. A lot of a lot of our customers were really gung ho in the beginning about converting a whole number of different processes and sort of customer facing, customer facing capabilities with a generative AI solution and kinda dialed back when they realized they had a little bit less control over over that without either building a model themselves or having better ways of kind of controlling what those outputs would be. So a lot of customers have been, you know, not cooling on the technology, but looking for more more controllable and scalable ways of of using it more effectively. And the offering from Cameo has been a a really useful tool for for kinda building out some of those use cases that are that are really kind of safe. But we'll talk more about that later. Yeah. That makes sense. That makes sense. I would just jump in here and say there there's two other trends I'm seeing as well. One is, you know, initial twenty twenty four, twenty twenty three was kind of the time of the POC, the pilot. You could you could very clearly build something that looked really slick and fast and worked really well, for a demo for, you know, ninety percent of the way there for ten percent of the effort. Alright? Twenty twenty four is more been finding out that, well, that last ten percent to make it truly production ready takes ninety percent of the effort. Alright? All the edge cases, all of the the confirmation of security teams and and allowances to do this and making sure that it's not you know, you've everybody's heard the horror stories of selling a car for a dollar or, you know, telling someone to eat rocks for their digestive health. Right? Like, all of these sorts of things can happen, and so there's a long, long tail of challenges that people have to work through and bring this to production. The other thing that I think folks have really started realizing this last year is the power and need for contextualization. Right? So we as humans do a really good job of being able to contextualize a question when we're giving an answer. Right? Who are we talking to? What their motivations may be? What, you know, circumstance we're in? What this person should know about. Right? LLMs organically don't have a lot of that contextualization. So even things such as, you know, somebody asks, you know, what is my, you know, what is my salary, right, at a company, and you use an LM to answer that, but has access to everyone's salary. Right? Hopefully, it's gonna get you yours, but there's not necessarily a ton of blocks inside the LLM itself to say, oh, don't give this other person's salary or this other number or anything like that. And so, really, what we've had to deal with a lot is how do I both bring in context from a security perspective, make sure we're restricting to and only giving the right information, but, also, how do I give context from a a user perspective and match that up with the information that an organization may wanna share? You know? What product are they using? What version? What operating system? Where were they in that product when I was going to give an answer? Right? All of that matters because just using a tech example, asking how do I reset my password may be a very different question if you have seventy different products all with their own ways of resetting their password, and I've kinda gotta give an answer based on what the user is looking for specifically. So that contextualization challenge has come first, you know, front and center and continues to be evolved on, but that's a big part of where I think a lot of that ninety ten struggle people have had on, you know, great POC, but then in reality, it struggles is because bringing context becomes hard. Yeah. So I guess you would say security and personalization are top of mind for a lot of organizations then. And GenAI, for the sake of GenAI, it's not going to be a cure all for everything. Correct. For sure. That's wonderful. Well, thank you for this. And so we're really curious to know, when did you realize the differences between the different types of generative AI, that are out there, and how has generative answering and search become an essential tool? So it's a it's a good question. So generative answering in in search and and let's take a step back. Many way many times and and I think we'll go into this a little bit later maybe. Many times, the way that folks are using generative answering with search is via an approach called retrieval augmented generation or RAG for short. It's a a harsh word, but it it gets the point across. Everybody knows it. Right? And, generally, the the high level idea here is instead of letting a large language model just answer based on what it's learned from the Internet or whatever its training set is, you in first, do a search for information that is canonical or defined or trusted within an organization. You take snippets of that content, and you send it as context to the large language model and say, read all of this context, answer based on this context, and cite your sources. Right? And so, fundamentally, what we're doing now is we're we're treating a large language model almost as a reasoning or interpretation layer on top of content that you're giving it rather than the content that's more broadly learned. Right? This, from from our perspective, has become kind of the next level of search. Right? You know? Search, I'd I'd add keyword. I I went up to behavioral, and now I've got generative where I'm actually not just giving ten blue links. I'm summarizing all of this together and giving it back. Right? But this goes quite beyond search in terms of how RAG can work and large language models can work. Right? It it's it's broader than a search problem. Right? If I'm if I'm, using, GitHub Copilot, for example, right, there's rag search going on in the background there the whole time where it's looking up snippets of code or other classes or other things that are gonna happen, but there's no search bar I'm typing in. It's an interface that I'm using for interacting with this data. Right? The same thing can happen in your CX and EX where I can start getting answers generated not from somebody going into a search bar, but from the context on the user doing a query and saying, hey. You have an update for your case. Here's all the latest information and things that you should try. Right? Where we're just using context and information to call RAG and pull back information within an interface or an experience rather than treating it as a purely search kind of expanded sort of capability. One of the things that the one of the main goals of search is, right, to find the right in to find the right information for the right user. One of the things that generative answering really does is kind of allows that answer to be recontextualized and Yeah. Kind of put into language or parlance that the user understands. Right? So the the common common common issue is we maybe give the user the right the right results, but the user can't interpret that result correctly. Right? And generative answering lets us kinda summarize it for different use cases, and in different ways. Right? So it can be that extra layer on top of not just finding the right answer, but making sure that it's presented in a way that, you know, different different people or different learning styles or even, like, frame for a different use case can kind of be understood, and and customized. And that that's that's pretty neat. It's another layer of it's another layer of, providing understanding to people who are looking for looking for answers to questions. For sure. And I feel like at the rate that enterprise data is also growing too, it's growing at such a large exponential rate. There's so many things we need to keep on top of. And so having something in a palatable, easy to read, single interface, getting, like, the SparkNotes version of something is always desirable and helpful, especially when we're always doing fifty different things at once. So, yeah, I understand. It's even it's even more helpful when you can target the way that it's presented to the user. Right? Yeah. An engineer asking a question may have a very different need for information than a procurement officer asking that question. Right? Where, maybe we're looking for feature function on the procurement officer side. The engineer may be looking for details and technical architecture diagrams and everything else. Right? That contextualization I mentioned before is the ability to not only bring in the context and say, you know, get the the product marketing information for one audience versus the product engineering documentation for another, but it's also the, you know, prompt side. How do I contextualize this and say, you know, answer in a deep technical style versus, you know, explain it like I'm five for this product. Right? Because Mhmm. Those two audiences are going to consume that information in very different ways, and we need to make sure that we're aligning the information to their their level of need and understanding both. Yeah. We have to meet we have to meet all of our users at every point of the at every point of experience. That's that's great for sure. So you're sharing some really good examples of what makes makes a generative AI real. Can you tell us a few more examples about where generative answering really excels in terms of, customer and employee experiences? Zach, can you Yeah. Yeah. So employee experience is one that I've been working with a number of customers on. So some of our customers are in financial services, and, this the right answer may be totally wrong in in certain situations. So you can imagine you can imagine let's say we're talking about transferring transferring funds after someone's deceased. Right? Depending on what state that person is in and depending on what type of funds we're moving, you can give a wildly incorrect answer that could have downstream repercussions. Right? So when for the employee experience, right, to be able to have sort of a to have a Copilot like experience, someone who's kinda checking your work and giving you giving you answers and helping you kind of process some very, very complex workflows, Making sure that you can contextualize correctly, but still have a have an answer that kind of matches and is flexible enough to kinda meet the request of the of the of the employee. Really neat. Really innovative. Right? Otherwise, there's hundreds and hundreds of pages of of stuff to read. So you you have this kind of for employee experience, we often either suffer from a lack of information or too much information. Oftentimes, you have years and years and years of procedure that you need to follow, and, the need to interpret it often doesn't allow you to read the entire corpus. So using search to kinda refine that's super important. But then to be able to summarize and and get to the right place, it it really it's really that intersection of contextualization like Eric was talking about with, you know, being able to actually reason what's the linguistic meaning behind the employee's question. Right? So we have the the sort of, like, procedural limitations that we're putting on with search using filtering, querying, etcetera. But then the actual reasoning and semantic layer, which is added by the the large language model. And in tandem, you can we're finding that we can do some really, really interesting stuff with it. For sure. Yeah. Yeah. You can give some examples of some of that interesting stuff real quickly. So one of the the key areas that we often see as a a low hanging fruit for using generative AI is during the case submission process. Right? So customer support, somebody needs help. They can't figure it out on their own. They go and they're trying to create a case, usually electronically, but it can be through other methods as well. Right? What we found is that in many cases, there are actually users who don't try to solve their problem before submitting a case. They just say, this is somebody else's problem. I'm just gonna submit a case and get on the phone, and somebody's gonna try to solve it. And so all of that work that the organization's been doing to put the information out there on the website and make it easy to find and give good documentation is or not because this person's just never even gonna try in the first place. So what we can do is we can actually say, alright. When you're creating a case, right, we're gonna give you a form to fill out for this case. What is the title, the description, some some other metadata that we can predict what you may select or you can select it yourself. Right? But before you can hit submit, we're going to send you to another screen that says, okay. Based on your case, here's an answer that is reading through that description, doing a search for all the information on it, and generating an answer and giving it back. So we're, in some ways, gently forcing someone to see an automated answer rather than talk to a human. Alright? If they don't get the answer, they can still submit the case. Right? If they do get the answer, they can click, never mind. This helps it. Thank you. Right? And so what we've seen is that a lot of organizations, that feature alone is worth, you know, twenty to twenty two percent case deflection at case creation time. Right? You know, about a fifth of the people who are gonna create a case, you're actually able to knock out of that whole flow because you've given them a generated answer in ways that you wouldn't get if you're just giving them the list of three or ten blue links in that same approach, accordingly. Right? And so this is a a scenario where, there's immediate ROI for using Gen AI on this scenario because I don't need to have humans answer the phone anymore. I've just removed the need for a human to spend time doing something. And so now what I can do is is automate this process and automate automate away these cases. Right? Yeah. Another example that I'll use, and I'll use a a story from one of our our customers. I won't name the name. I haven't got permission to name the name, but I'll I'll give a general general experience here. So we're we're using this with one of the large auto OEMs, particularly for trucking, right, where they have essentially a whole series of contact centers behind the scenes for helping dealers and others figure out problems with the the vehicle that has been sold. Right? They're using Salesforce for this and and kind of routing all the information and case information through Salesforce, and they brought generative AI into that mix. We implemented Coveo for this and pretty quick implementation bringing it in. And within the first probably day of this system going live, there had been a case that had been outstanding for the past few months. Right? This was a a vehicle that was broken. They tried I think the number was, like, seven solutions prior to that, and none of them had worked. Right? And the the service agent who is responsible for handling this pulled open the system and said, I wonder if this new thing will help. Pulled it open, got a generated answer back that had found and cited a case from years ago for a very similar issue that this person had never been able to found and say, fine, and said, try this. Within an hour, that vehicle was fixed and ready to go back to the customer and back on the road. Right? And so this was a mix of generative value played a role, right, because it can summarize the information and give the answer and give it clearly. But it's also a mix of, you know, as as Zach said, access to information is a challenge in these cases. Right? You know, if humans are trying to look through they're trying to find all this old stuff manually, the system can look much more broadly and pick this snippet of this case is very important to the you know, looks very similar to what we're asking here and generate that answer. And so this is a just, you know, a scenario where it's driving real life kind of capabilities right away. Right? Now on the other side of that, there was the downside of this to contextualization that we're talking about where initial rollout of this was a pilot. Very quick. Just prove it out. Does it work? Right? But this is a company that sells, both gas and electric trucks. Right? Gas and electric trucks work very differently. Right? And as part of that initial pilot, they hadn't contextualized it to bring in the context to say what vehicle is someone asking about. Right? As such, you would get very obvious mistakes. Please replace the sixth piston on your electric truck. Right? For those that don't understand electric versus gas trucks, there are no piston on pistons on electric trucks. These are electric motors with rotors and spools and everything else kind of inside. So you can run into that, but it's a contextualization challenge that we can now explicitly start refining on by passing that data, filtering down the data that's being searched on, changing how the the LLM prompt is working in order to drive better outcomes. And so there was both angles there. Right? It's still not perfect, but immediately, this thing we've been trying for months on is getting solved. And that's kind of the iteration that I think a lot of organizations are seeing here where it's having magic benefits, but also just obvious challenges to us humans that you need to work through and get fixed over time. And, usually, there's some pretty logical steps you can take to it. Yeah. These are some really great examples. Talk about finding what is it? Like, a needle in a digital haystack. And so just to recap this section, because this is a great segue to talking about the challenges of generative AI. I guess we would say that when it comes to generative AI, there's a lot of potential, but you need to approach it with intentionality and be iterative with the process. Like, it's never going to be perfect at first, but the more you improve upon it, the more sophisticated and precise it'll get. Is that is that correct? Absolutely. And and you can't assume it's a magic button. Right? You can't pitch it as a magic button, or you're just gonna blow up all expectations of your audience. It does have magic features or what feel like magic to us, but, it also has all of its own complexities that I think the world is just really getting their head fully around at the moment. Yeah. And so can you tell us about some of the barriers that people are encountering now when it comes to generative AI for CX and EX? We've already discussed this a little bit. Are there any particular risks that might, cause it to backfire? We mentioned a few earlier. Hallucinations are a big problem. Presenting the wrong information in a scenario where, you know, you really need the right answer, also a problem. One of the things Caveo does really effectively with that too is it kind of its own internal confidence scoring. It it will not generate an answer if it's not confident in the output. That's a that's a really good way to mitigate one of one of those one of those risks. Mhmm. There's a cost element too. A lot of the a lot of the off the shelf models, you know, it may be a little bit unclear how much it'll actually cost you to put something like this into production. And we at Perficient, we've been building chat bots and chat interfaces for years for, like, our I think our AI practice has been doing for over a decade. And even for even for an on premise model, you know, the scaling of it can be really expensive, and it it gets extra extra expensive when you're when you're kinda going through one of these open services. There's always the build versus buy question as well. So one of the one of the biggest barriers is if I want total control over what my thing can do or what my chat experience or my generative answering experience can do, I should be building the models or I should be building the artificial intelligence myself. I should be building the models myself. I should own the full scale of it. That takes a lot of time. And unless you wanna become a data science company, you likely are not going to be able to do that effectively. Yeah. But then the other risk is, you know, how much does it cost to actually have a million people asking asking a question? So Yeah. And do you need question answering every time? Eric, what were you going to say? Gonna say the other thing that we run into all the time on this, that is a a good thing to run into even though it may not feel like it that way is, honestly, just governance challenges. Right? What information can be answered on? What data can be accessed? What does this know about? What should it know about? Right? Fundamentally, this is forcing a, it's forcing a reckoning within within organizations, particularly for CIOs, CDOs, CTOs, right, of, okay, the magic, you know, magic large language bot can now access all of my information, but should it? Right? As mentioned before, one of the challenges that GenAI has is there's no concept of security built into large language models unless you do very fine tuning of prompts and other details. One of the reasons why RAG is so popular and why we leverage it so frequently is because in a search platform, I have a very definite heuristic security model that I can inherit from an underlying system. Right? I know who has access to which data based on Microsoft Entra or Salesforce permissions or whatever your IDP is going to be. Right? And so I can pull that data in. I can structure my permissions around it, and I can only send information that someone should have to that, large language model. Right? Yeah. Can't do that with large language models just pure out of the box. I can't tell OpenAI, you know, as part of its system prompt, really, really pre please don't answer someone about, you know, salaries unless they're this specific person or that specific person. Right? It's just not the same trustworthiness or at least audit auditability even at the the very least for for being able to handle that. Now the other challenge is that inheriting, inheriting security is all well and good, but if that security exists in the first place. Where we've seen a lot of folks stumble is that I mean, search has often been one piece of visibility into all of the security and data flaws within your environment. Gen AI takes that to the next level. And so where a lot of companies have stumbled in the the foundational work they've had to do underneath is making sure that all of their content is secured properly and tagged properly and limited to the right audiences properly so that you're not accidentally getting some free form document coming in and to a generated answer that was never supposed to be available in the first place. And no one even and would ever have known to look at it, but the large language models and and the search engines can see everything. And so they find it because it fits the question that was asked. And so there's a lot of, approaches here. At Perficient, we have something called the PACE framework, which is a very structured way for us bringing organizations through these governance challenges about policies, about what models can be used, how they can be interacted with, all the security constraints on it, all of the the control and governance of the system. But that still remains a big part of the challenge where, you know, it's a new technology that everybody realizes powerful, but always also realizes is dangerous, and so it's just getting people on board with that that paradigm. Yeah. It's very much like the whole garbage in, garbage out issue too that's been coming up with a lot of implementations as well where Gen AI is basically revealing a lot of the poor content management practices that companies might have or might need to improve on. So that's really fascinating. Thank you for that, Eric. And then, Zach, can you tell us a little bit more about change management when it comes to building a good UI for these, customer and employee experiences? Absolutely. I think one of the one of the best things that Coveo does for for its users is just to really promote AB testing, right, even from the query pipeline side. But the ability to to roll out new changes to a small number of users, get feedback, and then scale out effect scale out effectively is critical. Cameo gives you tools to to do that e without even needing a UI framework in place for AP testing. So starting small, expanding out, starting with with, solution areas that you're extremely confident in. So in the in the case with, you know, we were talking about an, an engineering case earlier. You start with summarizations for very basic questions. Maybe you can classify those data into the how to the how to documents. That's what you start with. And then you expand out from there into the more technical complex ones. But being able to being able to kind of control the scope of how many users see it and the scope of answers that you're confident in, super, super important. As far as designing the experience, the I mean, the experience should always be should never be intrusive. It should never be disruptive in a way that it it diminishes functionality. Right? So one of the one of the things I really like about the Caveo UI package that comes with the generative answering, it only shows up if there's an answer. Right? Ordinary it it ordinarily, if it's just a search, you know, you don't even know it's there. But it pops up when it when it has when it has the right to do so and when it has the right answer. I think that's pretty neat. One thing I see a lot of customers do incorrectly maybe is is, you know, the the answer kind of takes over the whole screen and the person has to, like, watch it kinda paint and they Mhmm. It wasn't what they were looking for. Maybe the the answer is is incorrect too. So you don't give the customer a frustrating experience. I tend to summarize this in my head is don't be clippy. Right? Clippy and Microsoft a long time ago taught people that lesson of don't interject yourself when you're not wanted, and so we need to respect that from a from a CX side as well. Agreed. It's the same thing with, you know, you see the little let's chat button at the bottom right of so many so many experiences, and I think, we've been trained by the Internet to ignore those. Mhmm. But, you know, if you look at if you look at Google, the summarized answer, that's something you wanna look at because it's a it's doing something different. And I think Aveo is taking kind of a a pretty good approach there too. Thank you for this. Mhmm. Alright, Eric. Let's talk more about these strategies and foundations for success. Eric, why do I need search if I have GenAI? How does it impact the quality of my generative responses? So this is this is a question that I've got more than anything else in over the last two years. Right? You know? Hey. You're talking about search. Why do I need this? Why can't I just throw chat g p t at it? Right? And, fundamentally, the answer is grounding and and all these other pieces. But if I take a step back for how this this often works, and I think if you go to the the next slide, Patricia, real quick. Right? When I talk about this with customers and just I tend to break this down into a a series of kind of levels of capability that all build on one another. Right? And there's been a a trend lately, in the the generative AI space of we wanna use vector search for everything. I'm gonna use a vector store. I'm gonna do embeddings. I'm gonna treat everything as a vector, and it's gonna be great. Right? And I find that a little bit, interesting for those who have been in the space for a while because it kind of hearkens back to this kind of cyclical pattern that everyone in search has for a while where they say, okay. We're gonna really understand the content better, and that's gonna make search so much better. And then they realize that gets really hard. And then they go to, okay. We're gonna start looking for other ways and other signals and other behavior to do this. And then they go, okay. No. Now we're gonna understand the content better even again. Right? And so if we we look back and we go to kinda keyword search is the lowest level. Right? This is what you'll you'll get in a standard kind of solar elastic search. It's matching terms together. Right? And, fundamentally, the last time we were here trying to understand content better was the heyday of IBM Watson, which I think most folks here will will remember. Watson's biggest kind of innovations, and they were innovations, were natural language processing. Can I understand, you know, how sentences are made up? Was this word the noun or the focus or the participle, as well as can I understand sentiment and everything else, as well as can I allow my customer to put their own algorithms over it to interpret the data better? Right? So somebody's saying, you know, three hundred years ago, I can understand that means seventeen twenty four, right, as opposed to say, oh, well, I have to search for three hundred years ago somewhere else. Right? If we remember back to that time, right, Watson was a a big thing. It was a big focus. There was a lot of hype and excitement about it. Might have gone a little too far in the promising to cure cancer and some of the other things that went on. But, ultimately, what ended up happening was, it got to the point where it was ninety percent, ninety five percent of the way there, but that last five or ten percent ended up being ninety percent of the work. Right? You get to diminishing returns. Right? And so keyword searches we're talking about here are still viable and still part of most solutions that are built. Right? It's still a very accurate way to find across a large set of document, accurately find those that are talking specifically about what someone is talking about, right, or searching for. The next if we go to the next slide, it's gonna be semantic search. Right? And so semantics, this is the same as vector search. Right? This is the idea that I take a a machine learning model, I look at whatever content I'm looking at, and I move it out to a series of numbers, right, that represent the concepts in that item. And those numbers essentially are a vector. Right? You can treat them as a line, and I can determine similarity by figuring out how close together things are. Right? Simple example here is is, you know, I can compare, a royal is ninety nine percent royalty, one percent male, one percent female. Right? It doesn't have a gender connotation with royalty. Right? But a king is ninety nine percent royalty, ninety nine percent male. There's a gender connotation there, one percent female. Queen is ninety nine percent royalty, two percent male. There's a band of men named queen. There are some men who call themselves queens, so maybe we're a little bit higher conceptually here, but, you know, mostly still all female. Now with this construct, I can do some really interesting things where I can subtract a, you know, king minus a royal and get something very equivalent to a man. Right? I can add a woman plus a royal and get something very equivalent to a queen. I can also treat this like a geometric problem bringing everybody back to high school. Right? If I have two lines, I can do the cosine between them to figure out how similar they are and use that similarity for search to say what are the things that we have in our index that are most similar to our search that the user is doing. Right? Mhmm. The challenge with this approach is that, fundamentally, we're still based on understanding the data better. Right? We're essentially operating under the premise of, I'm going to have a smarter and smarter embedding model that lets me get better and better views of this data and what concepts it's talking about and how it's going to work, and I'm going to rely on that. And so we see a lot of current kind of RAG and generative AI systems that are quite simply, they're just, they're using vector search as the way to do this, but it's only part of the problem. Right? Vector search is very good at getting me to general arenas. It's not great at getting me to specific, highly, highly kind of, high precision results. Right? And so we often find that the best case is we combine the two of them. Right? Use signals from keyword search, use signals from vector search, do them both at the same time, and start giving a better answer. Right? We go to the next one of these. It's gonna be graph search. Right? Knowledge graphs are a concept that has been around in theory and practically to a degree for a to a degree for a long time. Right? The examples always used here is movies and actors. Right? This actor is in this movie with these other actors who are in these other movies, and I've got a big graph of how all the actors are related to each other. And, I mean, call it the Kevin Bacon effect at the end of the day in in kind of data form. Right? The challenges with graph search that we see is it's still a lot of work to create a really high quality graph. Right? You know, if you looked at what Google did with giving answers, they had thousand plus people working on generating their graph of the world and everything that was going on that is a lot of work. And so we're starting to see some innovation here with, you know, Graph for RAG and some other things that Microsoft and others are coming out with to automatically create graphs based on large language model understanding of documents. But we're still not a hundred percent there yet. That said, just like vector or and the semantic model and lexical and the keyword model kinda come in, we can add this together and give a much better sort of signal for relevancy if we have graph knowledge about our data to begin with. Right? The last here, if you go on, is behavioral search. Right? This is where what I can say and this is really the innovation that, honestly, Coveo has kinda built their company on over the years while also doing the other pieces is gonna be behavioral search. For behavioral search, I ignore the content. Right? To some degree, I don't care what it is you're searching for. If it's a a widget, a product, you know, a letter, or what have you, it doesn't matter. What I do is I watch the user interactions with that data, and I learn from how users are searching and clicking and interacting. Right? I can learn that a user searches for x. They don't click on anything. They search for y, and then they click on result a and start boosting result a accordingly, even though I have no clue what result a is or what x or y was. And the words may not match, but I know where they're going to click. And so I can start using this series of feedback loops of end users actually interacting with the system to start driving results. This is the layer that I think with large language models is currently being ignored most broadly. Right? Because we're we're kind of saying we're gonna understand the content best is gonna be great and then giving it, but we're not actually capturing a feedback approach for how well are they working? Did they deflect the case? Did they actually go create a document? Did they have to, you know, do some amount of work to replace what this large language model gave us that is starting to get wrapped in more and more for automated improvement of relevancy of the content that they're using over time. Right? And at the end of the day, last slide, this all goes up to generative answering and kind of the rag approaches we talked before. Right? But all those four steps before, those are our foundation underneath most generative answering systems, right, in terms of, how do I find the right content that is contextually appropriate, that is used by peers effectively, and we've seen have good outcomes in the past, and automatically bring that back into the underlying context window for the large language models that we can answer to a user accordingly. Little bit of a deep dive there and and little bit deep down that, but happy to go into questions at the end on any of this anybody would like. I love this. So hybrid search plus generative AI equals a great answering experience. Exactly. And so Coveo's relevance generative answering relies on innovative technologies like retrieval augmented generation. Eric and Zach, can you break down why RAG is essential for these reliable generative answering, answers? And how does Kuviveo's approach to RAG differ from other providers? Zach, you wanna take this one? Sure. Yeah. For all those all those reasons that Eric talked about, right, the the pure pure vector based search is going to use whatever whatever relationships exist in the linguistic model that was built off of the entire Internet. Right? And the entire Internet is great for all sorts of things. In my opinion, it's also horrible for all sorts of things. And when you're trying to have a have an answer that, you know, is more localized or maybe requires special special experience to kinda understand what's correct or not Mhmm. RAG is a good way to kinda limit the limit what data is used and limit the correctness of the answer to, you know, a pool of content that that is vetted. Right? We we authored something, and it knows better than the Internet for this case. Not always the case. Some basic questions like how to make a how to make a cherry pie. Well, there's a lot of recipes out there for how to make a cherry pie. But if I am writing a cookbook, I wanna use my cherry pie recipe, and I want that to be the answer that we give. And Raglan allows you to kinda control control that contextual response. What Coveo does that's really neat, is it gives business users capabilities to kind of control how the retrieval is done. Right? So, Caveo, you index the content once. If it gets added to your semantic model, which is what what you guys call it, it can be it can be used at retrieval. You index it once, and you can use it many, many places. So I I have that same recipe indexed, but I might want to I might have an additional document that I wanna have for my for my cookbook author compared to my cookbook audience. Right? The people who are looking for looking for responses. And the the business user can go in and kind of build rules directly in the query pipeline that affect which documents come back and can be used for an answer. And that scales really, really effectively, and it requires no code changes on the application to implement. So my my business user has in essence, you in essence, you treat the the answer generation as a person who's really, really good at explaining something if you tell it what the answer is. And the business person gets total control over what types of what types of data should be used to make that answer. Query pipeline is has been extremely, extremely useful for for that sort of application. You can, in some ways, simulate workflow by kind of using cascading filters, that can be that can be managed by a business user. And when you add to that also the your automate automated relevance tuning and the a bunch of other models that Cameo has that that really make search high quality, we're influencing it not just with with business controlled, rules, but also the relationships between other users and and their clicks. Right? Which documents are helping other users with similar questions? We can feed that into the model, automatically without without needing to to redeploy anything. So that that combination lets you kind of stay current Yeah. And not just rely on the linguistic similarity or the vector similarity or how close to the rest of the Internet this this large language model kind of thinks these these, topics are related. But, it lets you be a lot more granular and puts control in in a lot more people in a lot in the hands of a lot more people. Yeah. So in a way, it's here. I'll skip through some of these slides, but it's really layering in several different things to offer a great experience. So it's not just the Gen AI alone or the rag alone. It's the machine learning models. It's the analytics. It's it's the whole shebang. The biggest challenge with getting the right answer to the user is the data, the underlying data and how we how we find it. Mhmm. What's neat about your solution is you can swap out the large language model as often as you want. And the the retrieval and kind of understanding what chunk is correct for the right answer is where a lot of the magic happens, and you're not relying just on a static, static vector or static embedding or or just behavioral or or just business rules. Right? You can kind of pretty easily and scalably bring all of those together. Thank you for that. Yeah. The one other thing I'll highlight here highlight here as well is that there's a part of the reason why Coveo is valuable in this is not because of anything to do with LLM at all. It's because of the amount of maturity of the data platform that lives underneath Coveo in terms of being able to connect to many sources, being able to do it quickly, inherit security, do it at real time. Right? You know, one of the the things back to to the, you know, why is RAG better than than, you know, large language model or just an LLM based approach, training a large language model or fine tuning a large language model costs a lot of money. Right? Because it costs a lot of money. People don't do it super frequently. Right? And if you're in an industry where your data changes by the minute or by the day, right, say there's a new, article alert, knowledge based article alert, or say there's a new product that came in, or say there's a new order the customer made. Right? I would have to train a large language model every time that happens, right, if I'm just going against a large language model directly. With RAG, I can do that as long as the underlying search index is updated. And with Coveo, there are many, many customer many, many connectors out of the box that are going to frequently, you know, every fifteen minutes, update all of the data from these systems and do it incrementally and only pull the new changes that have happened. Right? Or allow you to push data in with an event driven feed from Kafka or some other system so that getting it literally in real time as the system is processing, my back end search kind of brain in many ways has all of the latest information for us to answer from. So that temporal nature for a lot of our customers ends up being a really important part of it because you've gotta be able to answer with up to date information. If you have a new alert on your site, but the the LLM is giving an answer that has no clue about it, you're gonna have a bad experience and bad kind of customer bay customer outcomes accordingly. Agreed. This is so reassuring and exciting to hear about. I I love the passion with which you speak about our company. Thank you for this, Eric and Zach. We're gonna ask a few more questions. We're winding down. One thing I'd love to shout out is I completely agree. Data and content are so important to effective generative answering. And one thing I'm excited to shout out during our webinar today is probably in January, we're gonna be releasing our new knowledge hub, which is going to be an interface that works with our generative answering solution, that will really allow you to assess your answering content quality all in one space. It's one dedicated interface similar to our merchandising hub. Well, where you'll be able to trace the source of your generative answers to see which content pieces are informing your specific answers and also to implement rules as well to block specific passages too. So, hopefully, this will help you ensure that your outputs are accurate, compliant with your enterprise policies, and aligned with regulatory standards, and we're really excited to be launching this soon. Moving on to some final questions. Wanted to ask you, Eric and Zach, how does a feature like Coveo's new passage retrieval API support all of these efforts that we've been discussing? What does it bring to the table? I think you mentioned chatbots before and custom experiences. Tell us more. So passage retrieval kind of, from from my perspective, it brings the platform to the Coveo experience. Right? Coveo is a platform, but it tends to be integrated into organizations via solutions where Coveo kind of packages and brings everything together. With passage retrieval, this is actually bringing the platform of all of the information Coveo Coveo can give to developers who can go and build custom things now on top of it. Right? I can now start treating something like Coveo as the brain or the information source behind my application and then use lane chain or any other LLM toolkit that I want to go build my own custom application. Right? As an example, at at Perficient right now, we are, we built a a solution where for RFPs that we get. Right? You know, those who whoever write or receive RFPs, you get a long Excel document with lots of questions about your organization that you need to go and figure out. Right? And so some of our developers, went and built a tool where it just says upload the Excel and tell me what column has the question and what column to put the answer in. You upload it, process for a little while, and on each of those questions, it's reading the question. It's doing a search against Coveo, grab grabbing all the information to answer that question, generating a a prompt and sending that to an LOM to synthesize an answer based on our past responses, our marketing information, our commonly accepted FAQ responses, legal response we can have, etcetera, and it's pasting that into the spreadsheet. It iterates through all of that. A couple minutes later, you get a spreadsheet download link that says, here's your updated RFP response for a seller to go and pick up and say, alright. Now I can refine this down and adjust this if needed rather than spend potentially hours from any of these RFPs filling out all of the same boilerplate information that the customer obviously needs to know, but that it's asked in a slightly different format in each RFP, so I can't just copy and paste each time. Right? Yeah. This is a scenario where we're getting a ton of value out of the platform because Coveo is the I'll call it the the data lake, the the brain with all the information from all of our different sources, be it SharePoint or websites or databases or what have you. But and we can have that one unified, you know, one stop shop to get all the information and then have LLM developers go do what they do best and build on top of that and build innovative ways to do it. Right? We're seeing this as well for integrating Coveo into, you know, chatbots with Amazon Lex and kinda Bedrock or with Einstein, right, and on the Salesforce side. It's a general knowledge layer that can be used everywhere, that we can go and kind of build our own custom pieces, or we can go and create our own custom prompts around if we have a very unique scenario that may not be, you know, the the generic out of the box I wanna find answers that that a company like Kaveh would build for everyone because I have something very unique just to me. Right? I love that. I know you've done a lot with custom prompting and some of that stuff, so I'll pass that one over to you as well. Yeah. I mean, one really neat thing you can do with passenger retrieval too is yeah. If you if if someone does wanna have just a you know, I built my own LLM, and they want that extra data, they don't wanna train it on all of the data that exists, Kaveo could be the grounding solution for that. In addition, you can you can do things like detect detect certain keywords or detect parts of speech and and use that to create the filters and hooks into the query pipelines in Kaveo. So you can actually go go a level deeper and and kind of do some pre intent detection and use that at to set context levers that control what data comes back. And that's been really, really interesting to start to experiment with, and I I see it going pretty, pretty far in the ability to kind of add add that reasoning layer that sometimes, without the context or, you know, a career in the industry, you can't really you can't really match just by turning something on. That's so exciting to hear about. So I guess what we're saying is that with Coveo, if you're a beginner, we have an out of the box solution that's ready to go. And if you're very mature and sophisticated and want to do all sorts of things with generative AI, sky is the limit with our intelligence layer, basically. Very really good to say that. Yeah. Thank you both so much. We're gonna move on to one of our last questions. So tell us a little bit more about you've shared some really great examples throughout this interview about some of your customers. Can you tell us some more real world examples of companies that have been successfully using, using generative AI, specifically with Coveo and Perficient? Zach, can you go first? Maybe there's an example. Yeah. Yeah. Go ahead, Eric. You you can go first. Well, so so the first I was gonna chat about, Zach, is I was gonna jump into SAP and some of the the outcomes that they've had with this. Mhmm. And so SAP across both SAP and SAP Concur, which are are the two kind of business units that are are using this broadly across the organization, right, are bringing generative AI and and generally Coveo across the board into into their solution. Right? The number one goal here from their perspective is to allow their customers to self serve more and allow their agents to more proactively, proficiently serve their to serve the the customers accordingly. Right? There's one instance here that's being used, but it's being used pretty broadly across, things like customer portal or or SAP for me or, you know, within product, right, in order to find answers. Right? But at the same time, that same instance is used behind the scene by agents when a case does come in to start answering that. They have seen a a pretty dramatic impact on their their case, volumes at at SAP from doing this. Believe roughly thirty one percent is the last number I had decrease in in cases that are being submitted. And so that for them is a huge cost savings now because I'm starting to use all the technologies we've been talking about to actually drive outcomes. And SAP is is far from a simple organization. Right? I mean, thousands of products. Right? Hundreds of versions of every product, customers all across the world. And many of these products are kind of the core backbone of an organization, right, where if they're broken, if something isn't working, or if I give the wrong answer, there are major, major, major implications accordingly. And so they're doing this in a way that is is trustable and very well tested to make sure that it's not impacting, I'm gonna call it, the the customer chain down the line. Because if they were to, it's going to blow back at them as harder harder than most others companies in the world that they could possibly have this just considering the scope and breadth of where where their solutions are integrated. Eric, I gotta I gotta jump in and correct you, though. We are not live with we are not live in general SAP, just with Concur, but we are we are doing our best experiments with it. Thank you, Zach. Thank you for for correcting me. Yes. Love this. And, Zach, what are some other examples you've seen? Customers who I can't name, but we are yeah. We are are using it for employee experience to to kinda summarize and so some of those samples I was talking about before with the fund management, that's a good example. Mhmm. Some of our other we're working with some other customers on, audit use cases. So, specifically, I it it's kinda similar to the to the fund management use case, but, specifically, we need to have a a regional or or legal kind of basis to filter data before we're generating answers, but it's a lot of how to manage audit scenarios. Mhmm. And that is a a really, really rich use case to to test out as well. For sure. Thank you both so much for all of this. We're gonna open this up now to the q and a section. Let me just see. Oh, we have one really good question actually already, and it's kind of the same topic. Eric and Zach, what are some quick easy wins, the best use cases that you could just try out GenAI with right off the bat that will have maximum impact across an organization? Your your go ahead, Eric. No. After you. After you. Your knowledge based articles, your how to articles, the how do I questions that you often are are writing specific answers for. If you've written if you have any documents that explain explain those basics, the basic how tos, pointing command, generative answering at it is such a turnkey win. Right? Especially if you already have a search box, just turning it on, you're gonna you're gonna save users a click. And the goal with search is always to get, I I learned this from Eric, this the goal of search is to get users off of search as quickly as possible. You were there as a it is the liminal space. Right? So if you can even save them the click into the article of how to answer that question, that's a win. A lot of, in I use this all the time, with the Caveo documentation. Right? So I do a lot of Caveo implementations for a lot of customers. RGA is turned on on Caveo's document documentation pages, on their help pages as well. So when I have a question about how to do something with Caveo, eighty percent of the time, I can get it answered just on the generative solution, or generative answering on their page. Saves me a lot of clicks, saves me reading, or at least helps me kind of focus in on on what my follow-up question will be. So that's the best place to start. And you're not even doing particularly simple questions usually, Zach. You worked with it for a while. So, it's it's digging and giving the advanced the advanced answers, which is, to be honest, even even as as important or more important than the simple answers. Because the I mean, the simple answers you could always do just via how to or an FAQ page, right, where where I can kind of force the real simple things in there. It's that long tail of complex, you know, how do I implement this interface when you're using this version of React? And, you know, for this browser, what's going on? Right? A lot of these complexities start combining together and giving a a much harder time at generating specific content for it that generative AI sells pretty well. Yeah. Wonderful. And then I have one more question. This has been a good question that people have been asking a lot, throughout this past year of Gen AI experimentation. Zach, you mentioned, sometimes they search. They don't even have to click. How do you track, I guess, like, search success and generative answering success in this new world of generative AI? If people aren't clicking, they're not there's less conversions. How how do you track the success of these initiatives? It's a big it's it's one of the big questions that we keep asking. Right? And it's a it's it echoes broad on on the broader what's the future of the Internet? Right? If we move to generative solutions, we're even going to author content in the future. Or is it all just gonna be generated answers, and is it gonna be an ouroboros, and is the Internet gonna eat itself? It's a great question. In an enterprise context, we have more control. So one thing that Caveo does that's really good, right, is it is not training its semantic embeddings model or its generative solution model on customer data, which lets us move past a lot of restrictions that a lot of our our more risk averse customers have. We, in essence, we don't need to we don't need to worry about how the model is learning from the queries that we that we are passing to it. That'll that allows us to kind of put all of the, well, let's just push aside a lot of the legal concerns about how is my data being used to to kinda feed that machine. But on the other side, we can still capture whatever. We all we we can still capture all the analytics and interactions and use them for for reporting and dashboarding and the ability to create custom rules. Right? So Caveo one of one of Caveo, our customers' favorite things about Caveo is the analytics platform that comes with it. With the generative answering solution, there's a an additional kind of analytics package that you can get so you can process you know, customers can provide feedback. Sure. But we also can kinda see if there was a conversion from from an answer. You know? Users can say if they liked it or didn't like it. That doesn't feed the model, but it does help us kinda review the quality of the answer. Yeah. And like you mentioned, there's that, the the new the new dashboard for the for the prompt management that's coming out that's gonna be hugely, hugely helpful. Agreed. The other the other thing we get pushed to is is analytics by tracking outcomes, true outcomes. Right? So, you know, watch the whole session. If somebody had this generative answer, did they just leave the site without creating a case? Did they create a case? Okay. I can assume the generative answer wasn't very good. Right? There's some inference that comes into the analytics stream when we start having generative answers where someone isn't going to click by watching what they do next that becomes important for understanding how are we impacting the user journey overall as opposed to just this one specific interaction with the user as part of that broader journey. Wow. Eric and Zach, this has been such an informative session. Thank you both so much for joining me today. We're out of time. I could talk to you two for hours. This has been wonderful. Audience, as you can see, with the right partners and technology, you can really apply generative answering in really innovative ways that really benefit your customers and employees. Eric and Zach, do you wanna plug any resources, before we sign off? Just I would say, please visit us at Perficient dot com. We we've included here kinda just a fact sheet about the company. We do a lot of work in this space with a lot of, wonderful customers of ours, and we'd love to chat with you. Thank you. And don't forget to check out our upcoming live webinar that'll be taking place on December eleventh. It's called the the best retrieval method for your RAG and LLM applications, and we'll be joined by Juanita Olguin, our senior director of product Barca, and my incredible manager, as well as Sebastien Phakiette, who's the VP of machine learning at Coveo. And it's gonna be a really exciting and fun time. Everyone, thank you so much for joining us today. This has been such a blast, and we'll send you the recording in the next twenty four hours. Thanks again, Eric and Zach. Thank you. Thanks so much. Everyone. Bye.

Register to watch the video

Harnessing CX & EX: Unlock Success with GenAI

Catch this insightful 1-hour webinar featuring experts from Perficient and Coveo as they explore how Generative AI is transforming both Customer Experience (CX) and Employee Experience (EX).

Key Highlights:

- Real-World Success Stories: See how enterprises are achieving impressive ROI while mitigating risks using GenAI.

- Enterprise-Grade Security: Learn how Coveo ensures seamless, secure integration to protect your data.

- Passage Retrieval API: Discover how this technology enhances GenAI precision and accelerates time-to-value.

Perfect for CX/EX leaders, IT professionals, and enterprise decision-makers, this session provides practical strategies to unlock the potential of GenAI. Watch now and start transforming your enterprise today!

Eric Immermann

Practice Director, Search and Retrieval, Coveo

Zachary Fischer

Senior Solutions Architect, Perficient

Patricia Petit Liang

Platform Marketing, Coveo

Next

Next

Make every experience relevant with Coveo

Hey 👋! Any questions? I can have a teammate jump in on chat right now!

1