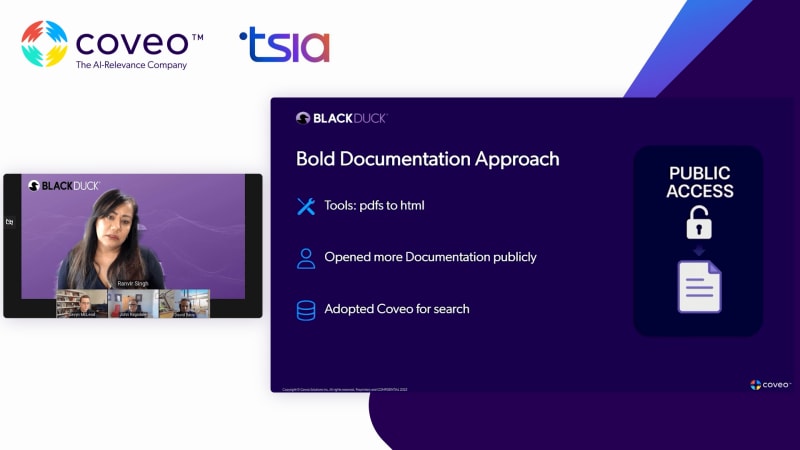

Hello, everyone, and welcome to today's webinar, AI powered deflection, BlackDot's forty three percent self-service lift, brought to you by TSIA and sponsored by Kaveo. My name is Vanessa Lucero, and I'll be your facilitator for today. Before we get started, I'd like to go over a few housekeeping items. Today's webinar will be recorded, and a link to the recording of today's presentation will be sent to you via email. Audio will be delivered via streaming. All attendees will be in a listen only mode, and your webinar controls including volume are found in the toolbar at the bottom of the webinar player. We encourage your comments and questions. If you think of a question for the presenters at any point, please submit through the ask a question box on the top left corner of the webinar player, and we'll open it up for a verbal q and a at the end of today's session. Lastly, feel free to enlarge the slides to full screen at any time by selecting one of the full screen button options, which are located in the top right corner of the slide player. I would now like to introduce our presenters. John Ragsdale, distinguished researcher, vice president technology ecosystems for TSIA, Gavin McLoyd, lead product marketing manager for Agentic AI and knowledge for Kaveo. Ranveer Singh, head of global knowledge for Black Duck. And Dave Baca, director support services research for TSIA. As with all of our TSIA webinars, we do have a lot of exciting content to cover in the next forty five minutes. So let's jump right in and get started. John, over to you. Well, thank you, Vanessa. Hello, everyone, and welcome to today's webinar. We're gonna be talking today about what it takes to be successful with AI and Gen AI. And we have a great success stories of someone who's not only leveraging the technology successfully, but getting some really great ROI. So I wanna kick off with a little industry framing, and I would like to bring into the conversation Dave Vaca, who is the director of support services research for TSIA. Dave, I know you've been doing a lot of research on AI and Agentic in particular over the last couple of years. Could you talk about the primary use cases you're seeing for support services? Hi, John. Sure. Absolutely. So recently, we were able to conduct a town hall within our support services research for, the topic on AI powered support framework, a new framework that we've recently published and is available to all of our support members. During the town hall, we were able to pull the support leaders on the various AI delivery technologies that they're currently implementing. And you can see from this graph that, there's a AI range of AI technologies that are being implemented currently. They range from AI search answers all the way to more complex Agentic predictive analytics. The, the polling, though, indicates a clear preference by our support leaders, in the industry to implement a Gen AI search answer technology solution, and that's used to power search and answer systems. Not too surprisingly, AI chatbots, has been around for a long time, but it has made a, a very strong rising again with the advent of AI. And, we have several members, within our support membership that are able to utilize AI chatbots to a AI, very high pace at our level, performance level, and they're demonstrating very high explicit case deflection as a result. But, clearly, you know, the primary focus here is on harvesting generative AI's ability to provide more accurate search and answer solution. And to that, I turn to, again, that new AI powered support framework. The support framework defines three critical workflows required by support organizations to deliver a very high efficiency, and scalability for answering customer questions. So customers have questions and support agents generally have knowledge. That support knowledge, really extends beyond support across the entire enterprise. So from a knowledge management critical workflow perspective, it's no surprise that Kilometers really is the foundation of everything involving support. In fact, without good knowledge, it's really impossible to run any service business unit, whether it be professional services, education, even product management is difficult. So that knowledge management work cross workflow process leverages high volume support interactions. On average, our support members are averaging about forty five hundred cases, inquiries and support cases a month, and that's required to generate, you know, very high current, high quality and readily available knowledge. The key metrics around knowledge management, and this is from our recent knowledge management maturity model three point o research involves and circles around participation rate, article publishing time, review frequency, and archive frequency. In essence, everything that keeps the content fresh, relevant, and alive is what's important. And this is particularly, important for agile product life cycles. So when technology organizations deploy the agile methodology for software development, it's really important to have a a really tight rein on the quality and the relevancy of that knowledge management as the the product changes day to day and and week to week. The, the next critical workflow process is on case management. So customers have questions. Oh, yes. They do. But they also have high expectations. And those expectations and the outcomes that they expect from their support organizations is the ability for that support agent, to provide them with high quality, persistent, technical solutions. So this workflow ensures a very high efficiency and scalability from the time the customer submits a a new case to the time the support agent resolves, And it focuses around that promise that outcome that the customer deserves and requires around resolution time. And it's also driving that customer satisfaction to a high level with a low effort level. Lastly, the, the the technology stack has to come into play. AI? So over the past year, we've seen a rising actually probably more like the last two years we've seen the advent of what's referred to as Retrieval Augmented Generation, RAG for short. RAG works hand in hand with the AI search applications to serve up that one best answer. And in order to provide that one best answer, the unified search application has to provide relevant, accurate data, basically of the data cloud, the knowledge cloud that the RAG process then operates on in order to provide that one best answer. From a historical perspective, the AI unified search, and now with RAG had it AI it on top of that is best measured through TSIA's ecosystem of, self-service KPIs. So we we measure self-service success. We measure, implicit and explicit case reflection and the overall end to end digital customer satisfaction. The basically, the customer's experience of that self-service solution. You know, from a, just a a point in time, we are seeing that the, the industry still has a a long way to go, you know, from a, a true high productivity and performance perspective. You know, self-service success is being achieved roughly a flip of the coin, a little more than fifty percent of the time. Implicit case deflection, it's about forty percent. And then whenever the, the customer visits that web form and submits a a case, the explicit case deflection is only being, solved and provided in one in five, visits. So lots of work left to do within the industry, and knowledge management is key and and and and central. And really, that's the the the basis and the focus of of this webinar today. Lastly, I'll just say that, you know, the three interconnected, work process around work, knowledge management, case management, and the RAG, do form the basis and the backbone of AI powered support, and optimizing them significantly improves the overall support operation and customer satisfaction. So with that, John, back over to you. And, John Support. There we go. Most support organizations have invested in GenAI at this point. And I'm wondering if you would hazard a guess what percentage of TSIA members are getting the results they anticipated. TSIA support member perspective, we are just now starting to see GenAI come into a productive realm. For AI in general, we know that whenever the c level executives are responsible for establishing that AI strategy, there are better outcomes that are that are that, are achieved. So more than half of our organizations are doc documenting success when that sea level executive responsible for AI strategy is leading the ship and got their hand on the on the on the helm. Another AI, from IBM, indicates that roughly twenty five percent of AI initiatives have delivered, the expected ROI over the past three years. So, again, we have huge opportunity within the, the the technology space, specifically within support services in order to really make good, benefit from AI. Yeah. Well, I I know that there are a lot of challenges with AI, but luckily, we have Gavin with us today. I know that you have worked with more than fifty companies with successful AI deployments. Could you talk about really what some of those pacesetter practices are and how your customers are getting results? Yeah. Absolutely. And I think going back to what Dave said, it really comes down to good retrieval, good relevance, feeding a, retrieval augmented generation type practice with LLMs. But I just I think I wanna start first and foremost with the fact that, companies who are implementing Agentic on that kind of foundation are seeing really great results. SAP Concur, for example, was able to reduce case submission rates by thirty percent and reduce their cost to serve by over eight million dollars a year. Similarly, Zoom, nineteen percent reduction in case submission rates. And it's not just in technology organizations. We're we're seeing this in regulated industries as well, like large US airlines, financial services, medtech, the the risk of hallucination really drops with good retrieval and good relevance and, of course, secure retrieval and relevance. But it's not easy. We understand that. There are significant implementation challenges that we see people trying to overcome. We hear custom AI builds are costing too much. People are struggling to get out of the labs at scale. Retrieval being a really core component to, what's limiting that scalability. Emphasizing the risk factors, we know that fifty nine percent of CIOs worry about hallucinations. Forty eight percent fear misuse. And these hallucinations risk company reputation, customer loyalty, and at some cases can have significant harm on on on users if they're given the wrong information, especially in those regulated industries. So regulations, privacy concerns, security, significant risks installing progress. We also know that there's high market stakes. So those who are adopting Gen AI are really widening the gap between them and their competitors who are not doing this. And really it's come down to the fact that AI adoption is no longer optional. Companies have to adopt Gen AI and inevitably Agentic AI in order to keep pace and stay relevant in their markets. And this is a mandate we we see and hear is coming from boards. It's coming from executive teams. And it's not just an expectation now that you can continue to test. Few years ago when Agentic AI hit the market, everyone was experimenting and that was okay. That reality has changed, and investors are now demanding results on their trillions of dollars in Gen AI investment. And it's not just a matter of anecdotal evidence. They want hard KPIs. They want evidence that their investments are working, and accurate reporting on that. And really back to the theme of what you guys were talking about, it comes to having a solid foundation of content and being able to retrieve that content, the most relevant, accurate content to feed the LMs so they can give accurate and reliable answers. And there's a formula that we've seen work across our customer base, for how we can get to Gen AI and get there with confidence. And it starts with starting small and deploying fast. We've seen organizations who start small be able to get out of the gate in four to six weeks with an implementation, laying the foundation with good knowledge practices, establishing that small but clean searchable index for high volume inquiries. We think about the eighty twenty rule. That is what is that twenty percent of content or knowledge that's going to answer that eighty percent of the volume of customer AI. And then we can expand smart with analytics. So instead of trying to boil the ocean and taking nine months to deploy your knowledge base, if you get to start small, you can use analytics to understand where your content gaps are so that you can, push new content, update content, smart, focus your efforts on what's gonna have the biggest business impact, and we can continue to tune relevance both with machine learning and business rules. We can understand how people are searching, and apply a bit of an SEO mindset to how we bring that content to users. And when we do that and we have that foundation in place, we can then scale to that to AI and Agentic experiences with confidence because we know that the system is able to retrieve AI, citable content to feed the LLM, and we're getting deliver accurate answers to users and employees. Our customers are employees rather. And just to emphasize that, I'm gonna let I'm gonna let Van Vere talk about the black duck story. I don't wanna steal any of her thunder. So I'll just emphasize this, with a case study we did with Zoom, where they focus first on content quality and search relevancy, applying that SEO mindset, as I said, looking at user behavior, looking at content gaps, looking at how things were written to ensure that the language was was was in line with how users were searching and and not necessarily how things were referred to internally. And initial gains just from those first two things were significant. Massive improvements in click through rate, in case deflection, in content gap reduction. And then once that foundation was in place, layered on generative AI in one use case. And that was their search, their service search portal and saw massive incremental gains from adding GenAI. We saw a twenty percent increase in self-service success rate, nineteen percent reduction in case submission rate, which was significant for them because they had already seen improvements with those first two layers. And then from there, you can expand AI across use cases. So we talked about one use case, but once that foundation is in place, you can apply that that relevance, that good search, the generative answering, the agentic experiences across the the the support service ecosystem from in app support, self-service portals, AI agents, chatbots, copilots, the agent insight panel, and and the information you're providing with your agents into your case form. And that's what, we've seen many other customers do, and that's what we, that's exactly what, Black Duck did. So without any further ado, you know, the main reason you're here, is to hear from Ranvir and understand the Black Duck story and and how they got to AI. So Ranvir, over to you. Thank you, Kevin. Hi, everyone. I'm super excited to share our Black Diamond journey to GenAI. And, our GenAI success didn't just start just by enabling AI. It all started few years back, or I would say several years back, when we started to rethink how we do knowledge sharing. Yeah. Because our knowledge was kind of scattered. It was all in all different tools. And, and we didn't have a, one easy way, simple way to do, search. So this journey started about five years ago, and, we focus on three primary areas, to set the foundation. So, number one is, knowledge centered service, KCS, and documentations and unified search. So I will be talking deep into these three areas. So and to tackle KCS, we started, we we took a, phases approach. So in, in in phase one, we took a self starter approach, AI like a DIY. So we start reading about it, try AI hoping to hoping to have. It might be because we were not fully vested. And then in phase two, we introduced more structure, by bringing in official KCS training for our sport management, teams, and then also end up, having one of our technical team, taking kinda, like, a a part time role as a, knowledge manager. And that didn't quite give us the results we were hoping for either. It might be because just training support management team, is not enough to fully adopt KCS. And, also, knowledge management role is, it's it's it's not a part time. There's a, it's a ongoing journey. There's a lot of process and procedures and challenges you have to tackle. So this all happened before my time. And, and AI, in phase three, we made a full commitment by hiring a dedicated knowledge manager and building a formal KCS program. So it took some trial and error. But in phase three, we made it, more of a, KCS as an optional to required. So we have, about ninety, support engineers around the globe. So we made a mandatory training to get certifications, and, it was intense three day, training that all of us took. And, but the best part came out of it is they all understood the, the why behind it. Why we are you know, change change management sometimes, I think, we forget the why. So we actually had our knowledge creators learn, why we are adopting KCS, why it's important, how we can benefit them as well. And, that was the shift we needed, literally. And since we have a large team, of AI, support members around the globe. We, decided to have, we went with seventeen different coaches, who can have, like, micro teams, three to four KCS teams are coached around the globe. And they, literally, the coaches we did a additional training for the coaches to, to coach their teams, and that, drove adoption and made a a big shift. And with that, within two years, we were able to triple our knowledge. And, from there, we also, introduced the we call it, KCS playbooks where our coaches, they track, monthly basis. They track, case attachments. They added all the cases by their team members. And then at the same time, they introduced the process that adherence review process as well. And that also, helps to kind of focus on the areas, you know, we need to make improvements within our teams. And then at the same time, we went to our management team and, made MBOs as part of, everyone's goals. And that together, you know, AI training from having a micro teams and, making this part of everyone's goal. KCS really became, you know, case handling's workflow, literally. So every case that our TSCs are handling, they're using KCS workflow, which is pretty much they, you know, they start with a search. And from there, if there's a knowledge gap, they will, go ahead and add a new article. Otherwise, our attached radio the ratio went up with everything because when you start with a search, it makes you realize, is it, are we missing if there's a knowledge gap, do we need to create it? Do we need to modify it? So that kinda solve all the problems of, you know, keeping the quality, if there's any duplicate, knowledge gap to fill in the beginning. And, the second area, the key focus area is documentations. So back, back then, we AI, like, way close to two thousand documentations, but they were all in, PDF formats. So it was extremely hard to search. And, and the second challenge was these documentations were only, available for our customers. And we know, through looking at Google Analytics, fifty percent of our customers are coming, from Google Search. And if their Google Search is not, you know, showing our links for our documentations, then it's just harder for our customers to be able to get to the content. So we had to tackle, two problems. So one is, where we wanted to make kinda move from PDFs to HTML. And second part is where we wanted to open up these, documentations publicly. So we, we adopted, zoom in for documentations. And with the zoom in, it's, again, it's easy to manage and, AI and present our documentations. And then at the same time, we went to our, product management teams and legal to open up as much, documentations as possible for a public public view. So we, and at the same time, we adopted for search and so building on existing KCS and zoom in documentations. So that was, like, pretty much the foundation to create a unified knowledge base. And with that, our, third key area, which, again, the focus was, you know, we have, we have lots of tools. Like, you know, we track our tickets in Jira. There's a lot of even documentation information in Confluence. We have, we use Scale Jira for our academy and education. But then we didn't have a, easy way to search all the content at one place. So this is where Cabello helped us to solve the two big use cases to for our internal customers and also for, for internal users and also for our external customers. So for externally, we have, community forums, which is a q and a that's hosted on Salesforce and knowledge articles that's also, hosted on Salesforce and documentation at this point in Zoom in and all the case data. So we were able to use Coveo and, enter all those different sources. So that way, it's easy for internal search and external search. So that way, we can get pretty consistent results. It doesn't matter where you're searching and all in one place. And so starting at the bottom, now we have a solid knowledge foundation, with KCS in place and documentations. And our research was working really good at this point. And we're getting all the, links are being populated, and and and also our deflection was pretty good. Then we saw, Akabeos Gen AI in action, and we immediately knew it was a must have. So we were excited about Gen AI from the start, and we were the one of the first companies to participate in the Akabeos beta testing. And the numbers were, like, literally hard to believe. We took, two weeks of our case data. And in the beta testing, we literally went through just the same questions how our customers were asking. We were able to see seventy percent of the answers being generated, and they was I mean, from that point, we just knew. Soon as, you know, we get all the budget approval, all the internal approvals to going, we want to do, we want to have Gen AI on our community. And, that's kinda how our CRGA journey started. And, we, we just went to JennAir everywhere. So we have four different search hubs. Community search, which is our, AI. We call it full search. So any of our, internal users or customers, they start with the community search. And from there, we went to we have a Gen AI on case creation page. This is where our customers come in to submit cases. And most, I would say ninety five percent of the customers visit the case creation page. And the third is our internal search. This is this is where we have all our other sources AI Jira, Confluence, Academy, everything for internal use, all the sources there, and we also put Agentic on top of that. And, and also agent panel, which is a, in within case man within Salesforce, the case management for our, support teams. So we got Gen AI everywhere. And, and answers, again, it's you get a consistent answer. It doesn't matter which search hub you go. You and getting a very consist consistent answers. Although we have, like, two different machine learnings, working one for internal use, the other one for external use, but, getting a consist consistent results. And overall, we are, we are seeing, about sixty percent, across all the different hubs. We're seeing about sixty percent of queries that are, where the JENIA answers are being displayed. And out of there, about seventy percent of the users are engaging with those responses. And, I wanted to kinda talk about couple of key learnings, you can say that. So we do analyze, a lot of data that's in the career reporting to see what can we do to make, even sixty percent go even AI, or if there's any knowledge gap or what's you know, what kind of queries that's result coming getting results versus the ones that not. And one thing, I realize is, I think it's, how you ask AI, the answer depends a lot. So some of the queries where there's only, like, single words, or it's just, like, a big paragraph of a lot of information. So that's where we see there's no generated answers that are being displayed. AI as we speak, AI is getting better day by day. It's definitely, something I believe is not we cannot train our end users on how to use AI. We have to depend more on the tools to get smarter and better with time, which I definitely see that happening. So and, but but, you know, overall, I believe prompt prompt engineering is really critical. It's, it makes a huge, huge difference. So if you type in, like, for example because our software is pretty complex. So if you type in a, for example, like, you know, I'm getting this error on Coverity and you give some context to it, your chances are you will get a much better answer. So let's dive into a little bit on, the analytics. So this is basically our thirty day, after we went live with Agentic AI, we've seen a, you know, six percent, decrease in case submission and also forty three percent increase in self-service success rate. And, and, you know, huge for our case, increase in the the, explicit case deflection rate as well. And, case deflection, I, so the I think it changes with how with with CRG. You know, with Gen AI, it just even the definition changes a little bit because, it gets it gets a little tricky, I think, with, so I do get this question get asked a lot internally. So I want to kinda, like, you know, kinda dive deeper into here how are we measuring the case deflection. So we're looking at where answers are shown versus where answers are, not shown. So for now, this is talking about our case submit page. And so if our customers are again there, they're just selecting their product. And if based on what they select and the description and the title to type in, if the answer is shown, the deflect deflection has jumped to fourteen point seven percent just in thirty eight days of after go live. And, where answers are not shown, the deflection is at four percent. And, that's kinda equals to the change of two hundred and sixty eight percent. And overall, in general in general, again, this is just kind of what I explained. I wanted to make sure because there is still a little bit confusion on with going with CRG, like, how deflection is being measured. So that's why I wanted to kinda deep dive so, into AI where how it's being measured. Right? So it's basically from no answers shown to answers shown. And the general deflection is, anytime our customers who are visiting our session, if they're you know, come with attention to submit a case and, they start filling in the form, and then in within twenty minutes, if they don't submit the case, that means the case was pretty much, deflected. K. And then, the second, metrics that we've been, looking at is the self-service success, overall from this is from our community, main community. So where we see a where answers are shown, the case, it's a where where no answers are shown, there are three point nine percent cases that are being submitted. And where, answer is shown, that's three point seven, so we see a, minus six percent reduction. And then, self-service success success where, no answers are shown, it's it was, forty nine percent. And from there, it went up to, where answers are shown, to seventy percent. So it's a big jump, AI, forty three percent. And, very similar, definition here as well, for how we are measuring. Again, no answers shown. This measure the percent of search sessions where customers clicked on at least one search result but did not submit a a support case. It's calculated by dividing these sessions by the total number of search sessions. And answer shown is the measure of percentage of search sessions where customers interacted with the current with the content, such as clicking search results, viewing a citation, giving a thumbs up, or copying information, and did not submit a support case. So the number is also divided by the total, total search session sessions. So, technically, I think even, I I think AI, it could be our numbers could be even higher because a lot of times, like, personally, like, for me, if I go to even a Google search and I get in a Agentic answer, sometimes that's good enough. Like, I don't even have to interact with an answer. You know, if that's, like, quick answer, you see it, you know, you don't have to really but we wanted to be really be sure. So that's why even where the answers are shown, we are we are tracking where our customers are interacting, and that's when truly we are using these metrics into your deflection or self-service success. Alright. So, so looking ahead, we are excited about our next phase. So now, you know, we started with Kaverio's AI search, you know, using MLs and moving on to AI, then Gen AI. And now we want to advance with, Agentic AI, and we have already kick start on that. So one is, that's already in testing is we are we want to increase more knowledge and, to save time for our our TSCs as well. So we are testing automation, with AI knowledge creation. And we have, in house tool that we're using to, test this out. And from the testing, literally average fifteen to twenty minutes that will article will take. It can reduce to, like, two minutes. And, second thing is which also in, testing as well where we're using AI Agentic, through using a, Copilot and, Kadeo's, search API to have that, you know, our already powered search where we have everything in Coveo using their API to, test it out with, with AI agent that we would in the future, we would like to have it on our community and, case contact pages. So that testing is already kicked off. It's looking looking good, again, because it's part of. And the third thing we want to focus more on is, looking at our case submit page. At the moment, we have our JENNI answer displaying on the right hand side. And, and although we do see a high interaction, about seventy percent of our customers definitely are interacting, but we want to increase that. In order to do that, you know, we want to, have, explore the case assist. That's another, feature that Kavio provides. So that way, we can get the Agentic A answers to kinda display in the middle of the screen before our customers hit submit, hoping that we could even increase in interaction from our customers. And, and then in product support, IPX, we also, at this point, we have about five, six, SaaS products, and, we started, already to, you know, went live with with one of our SaaS product for, IPX, and we are testing to enable CRG in there as well. So soon as that goes well, we're gonna move on to other additional SaaS products so we can have, IPX with CRG in there as well. K. With that, I'll pass it down to Vanessa. Okay. Thank you all so much for the presentation. We do have a few minutes left for our q and a. So just as a reminder to everyone, if you have any questions for our speakers, please enter it in the ask a question box on the upper left hand side of your screen. We're gonna get through as many questions as time allows. We do have a few already in place, so I'm gonna go ahead and just jump right in. This first one here is from Krista, and it says, what role did KCS play in enabling those Agentic gains? Oh, I'll take that one. KCS played a big role in enabling our Gen AI gains. It helped us to build a strong foundation of structure and a high quality knowledge over time. So since we started about five years ago with KCS, and our foundation was in place, and, that is, I believe, is the key. Because within, like, after we implemented KCS, eight just within, like, couple of years, we were able to triple our knowledge base. And it continue to grow because through KCS, you know, recommended structure with the workflow, Every case we handle is, is being you know, there's a workflow KCS workflow, every TSD follows. So it's it's the I would say without KCS, I just don't see how we could get these tough successful numbers. Okay. Our next question is actually for Caveo. So, Gavin, I'm gonna say this is probably for you. It comes from Brian, and they say, what makes Caveo's passenger retrieval stack uniquely suited to plug into a mature KCS program? It's a great question. And, you know, you heard Ranvir talk a lot about our API suite and, CRGA, which you could tell she's a Coveo customer because she's using lots of acronyms, but which is really, you know, our managed offering for, for RAG, relevance generative answering, and it's a, a managed solution. But I'd say at the heart of Coveo is really good relevance and really good retrieval on top of a unified index. And the way that plays in for KCS is is that it builds on and strengthens the foundations of your already structured knowledge management practices. Right? It's unifying and normalizing that content. It's bringing together things, like, from your Zoom in, from your Confluence, your Salesforce, your Jira, and so much more, whatever you need to connect. And it ensures that your generated answers can cite authoritative KCS compliant sources without any duplication or confusion. And it can do that in a way that adheres to document level security permissions allowing for, like, a single index to power both your authenticated and your unauthenticated experiences, which is something that's really hard to do in custom Gen AI implementations. So you end up getting these grounded contextual answers, that are they're founded in our, AI knowledge from our from our hybrid ranking architecture. Right? So we're blending things like keywords, semantic understanding, behavioral signals, and ensuring that those AI answers stay accurate, relevant, and, and permission aware. I guess probably sorry, Vanessa, but I think very important to note too, just especially around KCS, is the feedback loop. Right? And and you heard Ranvir talk about this, but it's those real time engagement signals, the thumbs up, the thumbs down, the copy, engagement with the with the content and and the citations, they flow directly into Coveo's analytics. And this makes it really easy for knowledge managers to identify, article gaps, measuring their reuse, feeling continuous improvement, in their solve and evolve loop best practices and really using those analytics as a way too to keep those those, support engineers engaged in creating more content because they can they can see the impact that they're having. Okay. I'm gonna go ahead and squeeze in one last question here. This question is from Sean, and it says, what best practices separate firms that plateau on deflection from those that break through with GenAI? Anybody have any any thoughts on that one? I'll take that one, Dore. With Jenny, AI again, I think we are moving away from, because AI is everywhere, thesis. Right? So we are getting so used to, moving away from clicks and getting a quick answer. So I think that's what really helps even I mean, that's that's why we have a big I mean, we almost doubled our deflection or our case contact page. It is because we see that, you know, the answers is sometimes our documentations could be, like, you know, ten pages long. But you're just looking for a very specific, like, you know, how to do this. Not that you're doing you know, you need twenty steps, so that's why customers will try to, submit a case, and then they see, out of the documentation, they see a nice quick summary of their answer. So that's why I see a huge, interaction. Seventy percent of our customers are looking either you know, they're interacting with that concern because it's, now it's literally narrowed down. Because I remember when I first joined, as a KCS manager here, I was, you know, I was recommending like, I was like, I know we have a lot of content and documentations, but to a TFC, it's like, hey. If you see a customer the way they're asking, let's start creating micro articles. You know? So that way we can give them in a way, it was indirectly we were doing I wanted to kind of do the Agentic experience for our customers. So that way, if they're asking a simple question, we don't have to give them twenty page documentation. So I think that is the power with Gen AI. It's literally understands from, AI know, a big picture, breaks it down, give you a AI, simple answer that anybody can take and resolve. So I think that's the advantage of having GenAI. Okay. Well, thank you all so much. I know there are a couple questions we weren't able to answer here live, but don't worry. We haven't forgotten about you, and we will follow-up. And since we have come to the conclusion of today's webinar, just have a few more reminders before we sign off. There will be an exit survey at the end, and we'd appreciate if you could take a few minutes to write your feedback on the content and your experience by filling out that survey. We also encourage attendees to learn more from the resources on the left hand side of your screen. You'll find a one on one deflection assessment, black doc case study, and additional TSIA resources there as well. And know that a link to the recorded version of today's webinar will be sent out to you shortly. Now AI just like to take this time to thank our speakers, Gavin, Ranbir, John, and Dave for delivering an outstanding session. A special note of appreciation to John who is leaving TSIA after nineteen remarkable years. Today's webinar marks his final one with us, and we want to sincerely thank him for his incredible contributions, leadership, and dedication. You left a lasting impact, and you will be truly missed. Of course, thank you to everyone for joining us as well for AI powered Black Duck's forty three percent self-service lift brought to you by TSIA and sponsored by AI. We look forward to seeing you at our next TSIA webinar. Take care, everyone.

AI-Powered Deflection: Black Duck’s 43% Self-Service Lift

Generative AI alone doesn’t scale support—strategic placement, data-informed design, and great execution do.

Black Duck, a leader in open-source security and compliance, didn’t start from scratch. They had a mature AI-search experience, but like many TSIA members, hit a deflection plateau. That’s when they turned to generative answering. The result? A 39% increase in explicit case deflection and a 43% boost in self-service success.

In this 45-minute, substantive session, experts from Black Duck, TSIA, and Coveo will unpack how they leveled up their digital support experience—by embedding AI where it matters most, and delivering measurable impact.

What you’ll learn:

- How Black Duck boosted case deflection by 39% and improved self-service success by 43%.

- Where to embed generative answering for maximum deflection ROI.

- TSIA’s latest research on AI adoption and support experience trends.

- Best practices from over 50 enterprise GenAI deployments with Coveo.

Whether you’re looking to enhance your existing AI investments or kickstart a smarter self-service strategy, this session will leave you with proven insights and practical next steps.

Make every experience relevant with Coveo

Hey 👋! Any questions? I can have a teammate jump in on chat right now!