Ce contenu n’est disponible qu’en anglais.

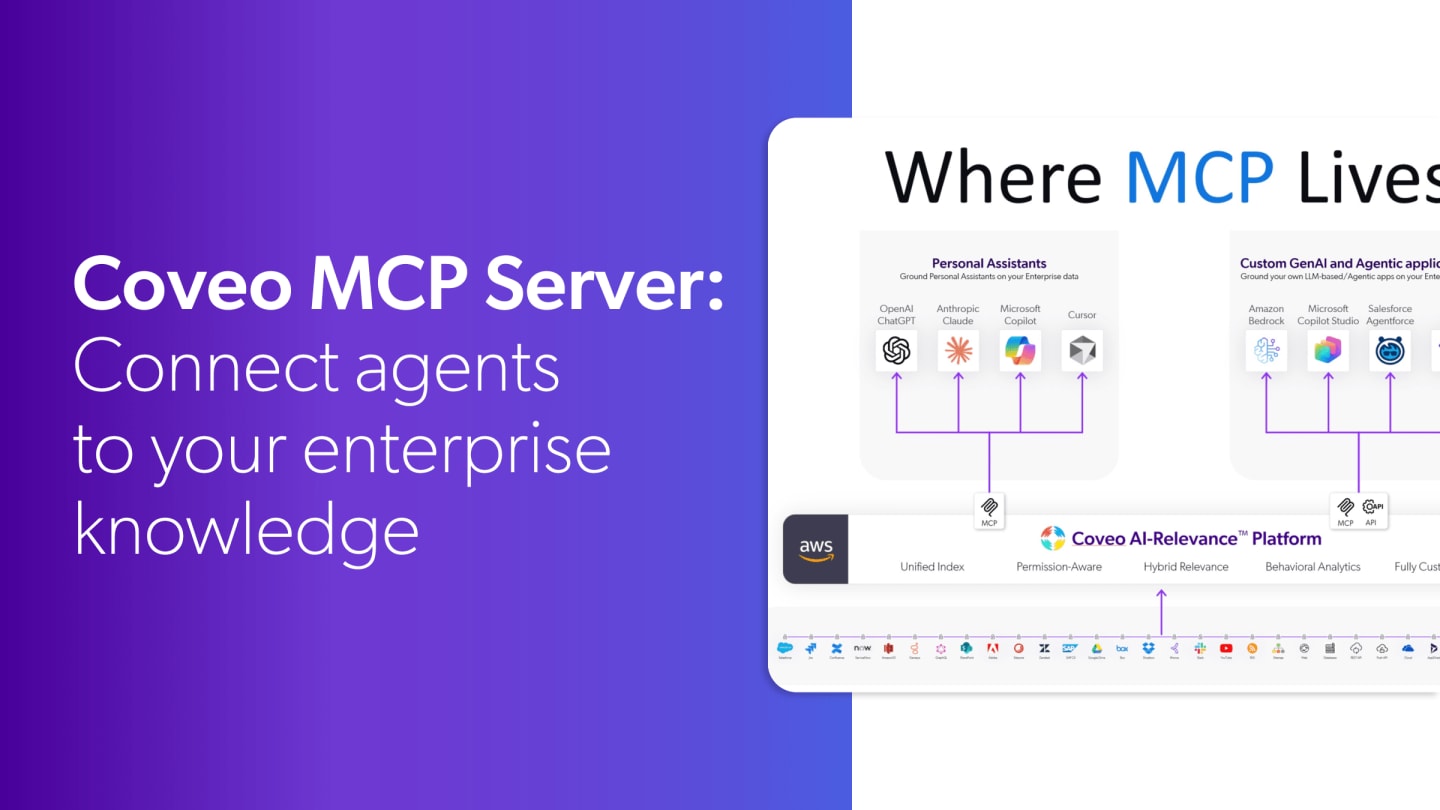

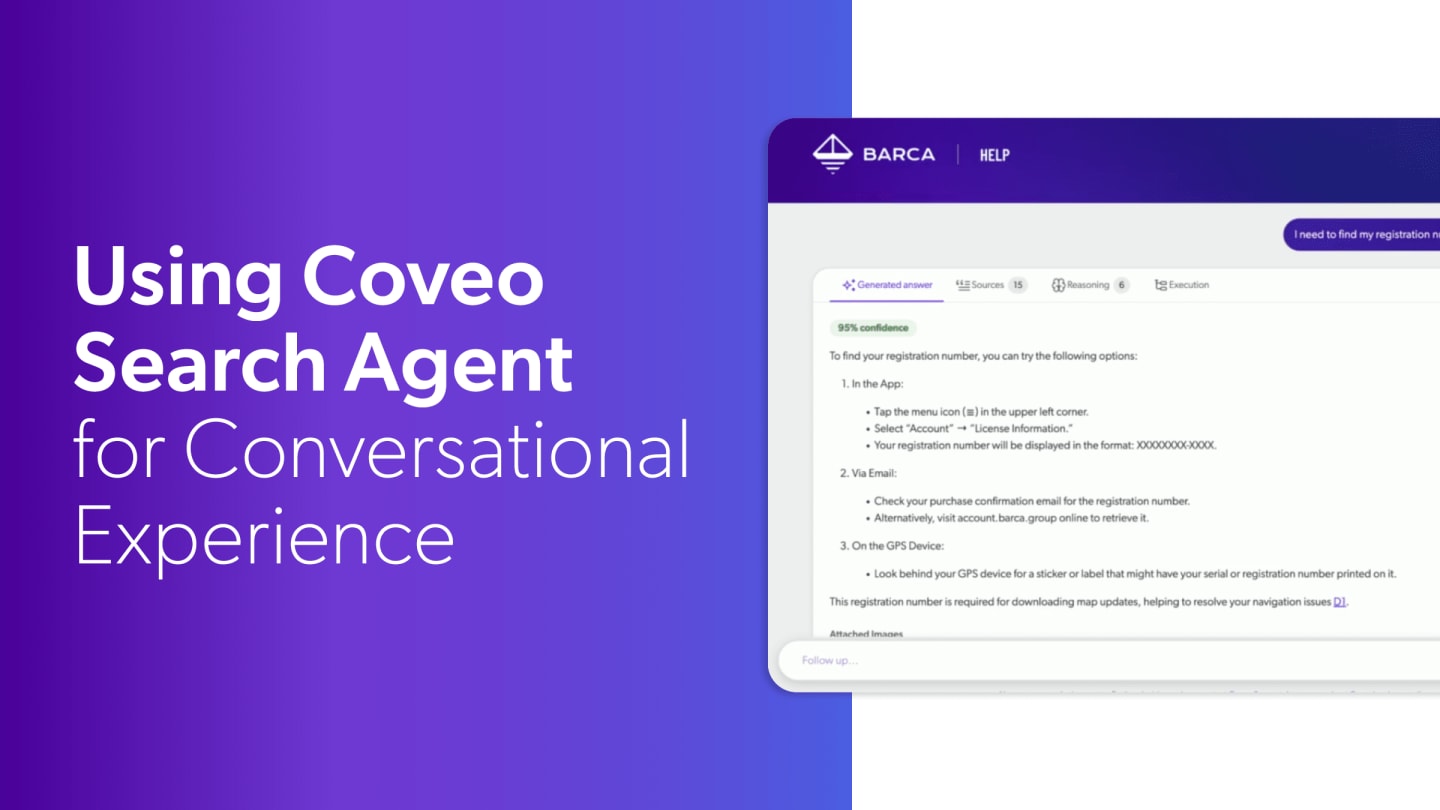

You know when you're using a powerful AI model like Claude, ChatGPT, Copilot, and a classic meme kicks in and you say to yourself, what kind of sorcery is this? It's helping you vibe code. It's summarizing historical logs. It feels like the speed of business has finally caught up and given a boost to catch up to the pace of technology. And then you ask it something that actually matters in your job, something specific like what liability clause is this Project Alpha contract from twenty twenty three? And the magic instantly evaporates. The model either refuses to answer or worse, it confidently hallucinates. A generic clause that has nothing to do with your business, not because it's dumb, but because it's locked out. The LLM is standing on a sidewalk, staring up at your office building with no key card to get inside. It has incredible reasoning power, but has zero context into your reality. That's the problem we're here to solve. Hey there, I'm Scott Ferguson, and I do product stuff at Coveo. Today we're talking about Coveo's hosted model context protocol server and how it bridges the gap between large language models and your corporate knowledge. Going back to my earlier example of it being locked out, our MCP mission is simple. How do we give an AI agent the key card? How do we help it? Stop guessing and start knowing without requiring a team of a dozen engineers months of custom infrastructure work. Spoiler, it's right in the name. The hosted part is doing a lot of the heavy lifting. At its core, the hosted MCP server is all about connecting an LLM's reasoning ability to your organization's memory securely, reliably, and with minimal friction. A helpful way to think of it is as a localization librarian. On one side, you have the LLM. It speaks fluent human. It understands nuance, intent, and even ambiguity to a degree, but it doesn't speak database. It has no idea to query your specific indexes. On the other side, you have your company's knowledge scattered across SharePoint, Salesforce, Confluence, and a dozen other systems. The MCP server sits in the middle. So when you ask it, I need the Project Alpha contract, the librarian knows exactly where to look, retrieves it, and hands it back in a format that you can understand. That standard interface, that low friction layer is the key. And this is where the hosted part really matters. The reality here is while interoperability still isn't an easy term to remember, it's at least now an easy term to achieve. Historically, building this kind of middleware meant spinning up several servers, managing infrastructure, and handling authentication, maintaining APIs, and absorbing the operational pain that comes with it. You essentially become a system integrator just to make a chatbot useful. With Coveo's hosted MCP server, the burden disappears. Coveo provisions it. Coveo maintains it. There's no server to manage. No indexing pipelines to babysit. For IT and platform teams, that alone is a big deal. But this doesn't live inside an isolated search box or a dialogue window. The hosted MCP server integrates directly with the LLM tools people already use. It could be Clode, it could be ChatGPT, Copilot, even IDE like Cursor. Imagine a developer is stuck on an internal proprietary library that was written years ago. The usual workflow is to check Git, open a browser, search a wiki, lose your flow, and hope the documentation you find is actually still accurate. With the MCP, the agent can pull that internal documentation directly into your workflow. You ask, how does this internal function work? And the answer is sourced from your secure enterprise content without ever leaving your IDE. That's a genuine productivity shift. The knowledge comes to where the work is done. And now let's pop the hood and talk about how this actually works. The hosted MCP server exposes a toolkit to the agents. Here, you're not just prompting for text, you're giving AI agents tools to let them decide how to use them. Out of the box, we're launching with four core tools. The first is Search. This wraps Coveo's Search API and uses it for a broader discovery, surveying relevant content exist on a topic. The second is Fetch. This is like the scalpel. Once the agent knows which document it needs, fetch retrieves the full content in HTML content markdown that it requires. Search finds the book title, Fetch reads the book. The third is Answer, which triggers Coveo's Generative Answer. This synthesizes a natural language response directly into the index content via our Retrieval Augmented Generation process, also known as RAG. And I save the coolest for last. The fourth is Passage Retrieval, which is all about that double e, efficiency and effectiveness. When you only want the most optimal paragraph that matters from a fifty or a five hundred page manual. The tool delivers just the relevant chunk. What's powerful is not just the tools themself, but how the agent uses them. Consider a real world walkthrough where the user asks a question like, what is this AWS Data Plane Agent 1.8.0? The agent starts by trying to be efficient. It uses Passage Retrieval, maybe not the most results it can get there. In a rigid system, that's the end of the road. But with MCP, the agent pivots. It broadens its approach, switches to Search. This time, it retrieves relevant documents. From there, Fetch uses it to pull that content and read the details. Then it realizes something important. The internal documentation is actually incomplete. So for that specific version, it actually fetches document and cross references it to fill in blending internal proprietary documentation with publicly sourced info. You don't always have to go this way, but it's really effective. Finally, it synthesizes everything into a single coherent answer. That's not scripted. That's reasoning. It behaves like a research assistant, checking one drawer, moving to another, validating sources, and assembling the final insight. Finally, let's talk setup. The UI is aggressively low code. You name your server, you select an existing query pipeline, add your tools, authentication is straightforward, OAuth for secured content, or anonymous API keys for public content. Once easily configured, you paste the new endpoint into your LLM orchestrator of choice and Coveo's MCP server shows up as an available to. No swagger files, unless that's your preferred MO. No custom middleware, no infrastructure drama. If you're building a basic FAQ bot, use the API. If you want a research partner, use MCP. Coveo's hosted MCP server democratizes access tool enterprise knowledge without forcing teams to become infrastructure experts. It lets AI do what it was always meant to do, reason, explore, and help people get real work done. Experiencing it doesn't get any easier than this, so come check us out. Plug it in. Watch your AI agents in action.

Coveo MCP Server | AI-Powered Search & Generative Answering for Developers

Rendez chaque expérience plus pertinente avec Coveo