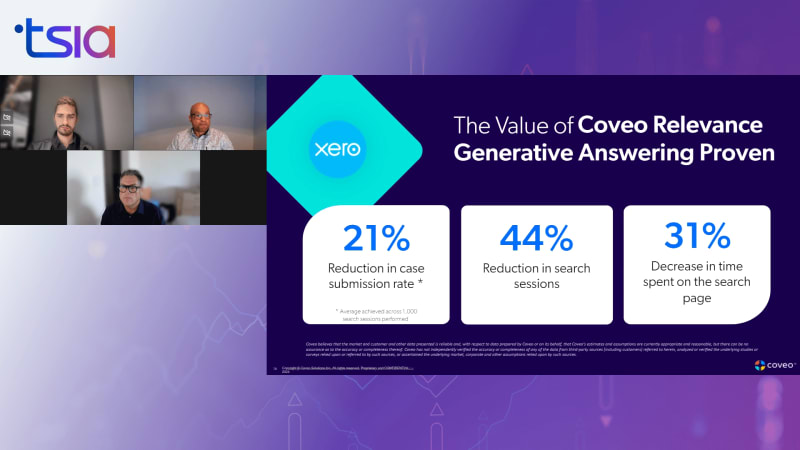

Hello, everyone, and welcome to today's webinar. Experience GenAI in action and discover its ROI potential. Brought to you by TSIA and sponsored by Kaveo. My name is Vanessa Lucero, and I'll be your moderator for today. Before we get started, I'd like to go over a few housekeeping items. Today's webinar will be recorded. A link to the recording of today's presentation will be sent to you via email. Audio will be delivered via streaming. All attendees will be in a listen only mode, and your webinar controls, including volume, are found in the toolbar at the bottom of the webinar player. We encourage your comments and questions. If you think of a question for the presenters at any point, please submit through the ask a question box on the top of the left corner of the webinar player, and we'll open it up for a verbal q and a at the end of today's session. Lastly, feel free to enlarge the slides to full screen at any time options, which are located on the top right corner of the slide player. I would now like to introduce our presenters. John Ragsdale, distinguished researcher, vice president of technology ecosystems for TSIA, Angelo Sandeford, senior director, global services operations for Coveo, And Matthew Lavoie Sabarin, product manager, also with Caveo. As with all of our TSIA webinars, we do have a lot of exciting content to cover in the next forty five minutes. So let's jump right in and get started. John, over to you. Well, thank you, Vanessa. Hello, everyone, and welcome to today's webinar. There is no denying that the introduction of ChatGPT not even two years ago has completely changed the picture of AI for everyone. It's something we can use in our personal lives as well as our business lives. And support was very early in getting involved in doing some testing on generative AI or Gen AI. And we've had a lot of questions about use cases. And now that people are more adopting and understanding the technology, people are asking for ROI. Where is the ROI coming from? What does the business value look like? And we're going to be talking about that today, and our guest speakers from Caveo are going to be sharing some customer case studies with some pretty dramatic results. So as everyone knows, you know, the big change here is instead of the traditional search bar, with GenAI, you have this potential to have a conversational experience with customers. So instead of them trying to figure out how to ask the right question, they can actually have a conversation with the GenAI tool to really help get the information that they need. And this is introducing a completely new experience both for employees and for customers. So tech companies understand that GenAI has huge potential, for transforming their knowledge management program. And this data from a survey we conducted last year shows that sixty four percent understand that GenAI is going to have an impact to their Kilometers program. Twenty seven percent understand that it will be a major impact. And there are multiple ways, that we are seeing this take place. First, just the identification of missing content and automating the creation of that knowledge, more contextual content suggestions. So no more one size fits all. It can make suggestions based on a particular account or the particular information that you've included in a support case. As I mentioned, a much more conversational experience, which is a much more natural, approach for customers attempting self-service. And we're also saying that we're beginning to, automate some workflows, make some recommendations on the next best recommended action, etcetera. So, we see that, as I said, support was pretty early in line for experimenting with GenAI. Seventy two percent of our support members were already, working with using this for agent facing use cases, and fifty percent, have begun ex begun experimenting with use cases for self-service. One thing that is, often a roadblock though is there are so many requests coming into IT, and you can see on the right, twenty four percent say there's more than ten AI pilots going on. Nineteen percent say it's hard to know how many are going on. And IT sometimes prioritizes these projects based on business impact. So, I'm really happy today that we're kind of digging into some actual results from companies who were pacesetters with adopting Gen AI so you can understand where that business value is coming from, how quick, and how much ROI that you can expect. So I have just completed my twenty twenty four tech stack surveys, and this is, some new data for you showing that so far, adoption of knowledge management, intelligent search, is we've seen eighty two percent of companies that are already using it. Eighty six percent are shopping. And a lot of those companies are looking to their intelligence search provider, to add on that Gen AI element so it can sit on top of all the machine learning and analytics that you've already been collecting about, frequently asked questions and the top content that is being used, etcetera. And I'll also note that on the bottom of the slide, we see that planned spending is really high across all sizes of companies. And if we go back a decade, we saw that this was a big company solution, but not necessarily being adopted as quickly by smaller firms. And we see that even the smallest companies, those with less than a hundred million dollars in revenue, seventy five percent have planned spending around analytic based search. So, very happy to have our guest speakers today, from Coveo to dive into the real world of business value. So, Angelo, may I turn it over to you? Absolutely. Thank you, John. Well, you know, I decided to, kick off our our our presentation or a portion of it, with, what I consider to be a bold statement. Well, maybe not so bold for for some, but, surprisingly enough, there's still many out there that would that would consider it a bold statement. And, the the statement is the following. You know, client experience is the cost the competitive differentiator. I mean, ultimately, you know, when you ask yourself this question, you're gonna you're gonna, you know, wanna reflect and say, well, why why is it, you know, why is it the differentiator? And, you know, simply put, you know, eighty nine percent of our customer or or or customers out there or companies out there rather, sorry, end up competing on, the competitive edge of customer experience. And again, eight, nine percent is is is quite, you know, quite a large number. And considering that they're they're competing on not their product alone, but their customer service is something that, you know, we need to really consider. And, you know, why is that? Well, simply enough, the buyers have choice. Right? And today, the only thing that sets them apart truly is, you know, the brand loyalty and the experience that you'll deliver, on that will set you apart. And, you know, some of the research that, that I found regarding regarding this, that, you know, what customers have to say is quite intriguing. In so much that, you know, seventy three percent of our customers, or consumers say that they would rather do business with a competitor, after more than one bad experience. And seventy five percent of consumers will do business with them again if the customers the company's customer experience is excellent. So we see that the, you know, the level of tolerance of of consumers today is very low when it comes to dealing with poor experiences. However, you know, given the capacity to deliver on exceptional customer experience, the the the customers are willing to to give us that opportunity, to continue to serve them and offer them our our services. Now when we talk about, you know, that customer poor experience to the customer, there what does that represent in terms of risk? Well, research also shows that, you know, there's three point seven trillion dollars in revenue at risk in twenty twenty four. And and and and that comes with the poor experience like that comes from companies if they don't focus on a strong digital customer experience. So, you know, what is what constitutes a remarkable digital experience? Well, you know, it's based on our users' expectations. And what are those expectations? Well, first of all, what they're looking for is a personalized and just for me experience. You know, they want what they want quickly. There has to be it has to be based on their behaviors, preferences, intent, demographics. And and what would that equate to is greater engagement, satisfaction, and loyalty. The other thing they're looking for is relevancy and efficiency. Right? They want something fast and they want it right. We don't have time to waste when we're searching. We all know we're all consumers. We're one click away from going to that next tab, to that next vendor. Next, we're looking at moving from prescriptive to advisory. So helping to enhance that business decision making process through tailoring that experience to match the user's intent, actively guiding them and advising to make that optional decision that meets their needs in that specific moment. We of course wanna ensure that or they're expecting that we're offering them a secure and private experience where their data is protected, their personal information is confidential. And with today's you know, day and age where we have everything online and everything we do, from our banking to, just searching, you know, on online for for what we're gonna buy next, we we definitely need to have that that security when we're when we're browsing the web and looking for our next option. In terms of the final piece, we want to have a coherent journey. Right? Customers are looking for a transparent, a transparent journey that's channel agnostic. Regardless of where they where their point of entry is, they want it to be consistent in terms of branding, functionality, content, and quality. So this is something that we need to take into consideration when we're looking at the digital experience. So the question is, what is the key to achieving this client differentiation? You know, the business business to person model or business to persona model is not one that, will achieve, or help you to achieve driving this client the customer experience differentiation, because it's not individualized. It's not something that, that will drive that that personalized experience. What we need to move toward now is a business to person type of of method in in delivering these these, experiences to our customers. And the only way you can really do that is with AI and GenAI. Now one of the things that we need to consider with this is is, you know, if this is the only way to to, deliver this experience is looking at it from a full customer journey perspective and a service a serve a self-service, perspective. And Mathieu will, walk you through what that looks like, from a a service journey. Awesome. Thank you, Angelo. So, so, yeah, AI engine AI, of course, are again the main, you know, competitive difference, but how does that apply and what type of value can that deliver across, you know, the entire customer experience? And when we think of the customer service or self-service or support experience as a whole, it has to follow chronological path where the user will first strive to self serve, at some point, they may open a case or a case submission, which will lead, of course, for the journey to become an assisted service journey where agents will go in and try to help the users. Now across that journey, there's different touch points that the user may access, whether it's a, search or relevance or help being delivered within a product, or help being delivered in community via knowledge, or help being delivered via support portal. And finally, of course, you know, that same help and, you know, AI and the gen the power of Gen AI can also be delivered within a, service console, for agents to then have access to that same intelligence to better the the user experience and solve case disaster. And then the value that this can bring can be quite numerous. Right? Because we if we think about issue anticipation and issue avoidance, these can represent significant cost savings for organizations using AI in those in those use cases. When we talk about better self-service and case deflection, again, we're talking about significantly reducing cost to serve by delivering AI and AI and resolutions in those use cases. And then finally, for higher cost use cases where an agent is actually solving your case, which tends to represent a higher cost, of course, having JNI to help improve the efficiency of those agent and enhance resolution can also help deliver tangible values. So what do those use cases essentially look like? Before I go into demo and show a bit how we've done it at Coveo and how we've deployed GenAI into our service and self-service journey, we can highlight maybe some of the different ways that it can be delivered beyond just what we've done in our own, in our own experience. So Gen AI can be delivered within a SaaS based product, for example. So we work with a lot of companies which are SaaS based products, and, of course, they want their clients to spend most of their time within their product. As such, bringing GenAI, bringing resolutions directly in that product can bring significant value. Of course, some users will then need to visit a website to get our support portal to get more help. So delivering that same generative answering experience within a website or even an employee portal, for example, can bring tremendous value. Of course, to create a case for, you know, asynchronous support organizations, well, we have to go through a case fill. And that's another opportunity to use this information to deliver generative answering, which can be part of the demo we'll, I'll I'll show a bit later today. Within a chatbot is also an experience we're hearing a lot about. Right? Some companies are choosing to go with, chatbots over a case form and use a chatbot as a way to allow their end users, their their, inner support portal to open a case or open a ticket for assistance. And then finally, of course, within a CRM, helping actual support agents, you know, get to a resolution faster using the intelligence from, you know, an LLM to help them, achieve that. Now I'll go over a quick demo to show how we've deployed us in two of those use cases being within the SaaS based product, our own SaaS based product and within our our our support portal. Another key thing to highlight here, and let me just share my screen to, start to show this. Everyone should be seeing my screen. I'm in the Kubernetes administration console. So a key thing here that, is very important to get the most value out of generative answering solutions does not just use LLM to generate answers, but it's to ground those answers and facts. Ground those answers and actual answers. So the way we deploy generative answering is we do so by using, essentially search or using intelligent retrieval. The ground the answers are generated within real information that lives within our index. So in this example here, we're inside the Kubo console, and I won't walk you all through the Kubo console or through a demo. But the idea is if someone is using this console and needs assistance at a specific question, and we will deliver search results. But using those search results, using those facts and real information, real documents as a source of truth, we will also generate an answer that can actually walk the user through different steps to help them achieve the desired outcome that they like and to desired outcome that they like and to continue using that software without having to look and request for assistance. Now sometimes users will need to end up or go to a support portal to ask perhaps for more complex questions and get additional assistance. Same thing in that scenario, we wanna enhance the retrieval or, you know, information retrieval experience with generative answering. So this example here, if I ask this question, of course, I will get relevant search results, relevant information to solve the issue and give me the information I'd like. But adding generative answering to this to help, you know, summarize and group information from multiple documents or multiple knowledge articles will end up with an answer and a resolution that is enhanced, that is more valid, more personalized, and will lead the user to desired outcome of having the right resolution faster as opposed to the user having to, you know, click on multiple documents and read different knowledge articles or documentation pieces one by one. We have the PowerGen AI to essentially group those together in an individual answer that will solve for the entire issue and answer the question the user had. So with that said, I'll pass it back to Angel to share what that has impacted and how it has impacted, essentially, our outcome, how it has had a significant impact and a tangible outcome for or support organizations here at Cook Ave. Let's stop sharing and pass it back to you, Angela. AI and JennyI to drive real results. What I what I'm about to do is share with you some of the some of our own results as Mathieu had mentioned that we feel demonstrate that the answer to this question would be yes. So first we'll start off with our case submission. So the number of case submissions per one thousand search sessions to be precise. When we look at our results, it was quite, you know, we were quite pleased and quite happy with what we saw. We looked we saw a reduction of forty six percent in case submission year over year. Now, of course, you know, it's important that, you know, we call out the fact that, there are obviously other factors that would probably have influenced as well the improvements that you see, whether it be process or system related. However, we did note that, there was a significant improvement once we integrated and deployed our CRGA within our self serve journey. Now one of the factors that, you know, we would look at is obviously the fact that we had added our CRGA to our case assist form, which, you know, raised the likelihood of a case being deflected because it was, the validated or the the search results were validated against a trusted and author authoritative knowledge base. And again, you know, Matsui, we'll we'll look at that a little bit later. But, we're also a small volume shop. So, you know, take into consideration that the, you know, the the results that we're seeing can be reflected in that as well. But that being said, you know, I'm sure the question would come to mind is, you know, why should we believe that these results are possible or, you know, why would we take what you're saying for as gospel? Well, you know, I don't think you should, and I and I know I wouldn't, but, I'm you know, we are happy to share to to say that we can share, as proof points, real life results from our enterprise customers who are seeing significant improvements in their own self-service use cases. So the first one that I would like to share as a case study is zero. And what's interesting to note is that prior well what's interesting to note here is that they saw a twenty one percent reduction in their case submission. And again, this is a an organization that, you know, they are considered, you know, in the vertical as big players. They they they have high high case volume. So, you know, to offset what I said earlier, we can see that this is this is these results are possible, with, you know, at scale. The other thing, that we can we'll look at next is, the reduction in search in search sessions. They saw a reduction of forty four percent in their in their search sessions. And finally, they realized thirty one percent decrease in time spent on the search page, which indicates, you know, there's definitely a quality of output that was helpful for them to be able to find their answers quickly and not waste time searching for answers through reams of different documents and links. Now moving on to SAP Concur. And SAP Concur had realized a thirty percent reduction in cases per one thousand search sessions and a annualized reduction in cost to serve of eight million dollars or eight euros. So as you can see, these improvements are quite, quite significant. And, you know, I think one thing that's important to emphasize though with even with these results that the reason why that that we see such good results, are, very contingent on the fact of having a solid knowledge management. And, you know, John alluded to the the investments that are being placed into knowledge management at the beginning of the of the session. And, you know, we can't emphasize, enough that, it's it's getting these results is is really tied to having that knowledge framework in place and the health of the content, which is being served up. You know, it it needless to say, if you're if you're putting in a faulty input, you're gonna get a faulty output. So this is something we really wanted to call out. In our case, we saw that when we were entering community posts or as part of the content that was accessed, it was giving up less than desirable answers simply because, you know, we had no control over what was shared within the community. And an answer could be outdated, albeit right, or some of those answers also inaccurate. So it's important to really think about your content and and and and the knowledge management behind that content in order to ensure that you're getting the the most relevant and optimal, outputs out of the out of AI and GenAI. And with that, I'll pass it over to Mathieu, who will, now, do a little demo on our case assist form. Awesome. Thanks, Angelo. So everyone should be seeing seeing my screen again. I'm back in that support portal. And by case assist, we essentially mean our case deflection flow. We have a solution called case assist, which helps to, create and classify cases, but also deflect cases called case assist. And the reason we wanted to deploy there is because from the support portal where we were a little bit earlier, we we were seeing that often some users would essentially skip the search entirely and would head directly to the support button and try to go on and open the case directly without even trying to search. Right? And, of course, if they don't try to search, you can, you know, lead a horse to water, but you can't force it to drink. As such, we saw an opportunity to deploy generative answering technologies and large language models directly in that case submission form using your case assist technology. So if you go and, I have this, you know, prefilled to simplify to them a little bit, but if you then go to the case form and start to open the case, obviously, you'll go through a standard, you know, case submission flow where you write in a title, for your problem as well as a detailed description and get to select the environment that's impacted, etcetera. Once you've gone through that step, we have a large language model that will essentially help serve and classify cases, right, by suggesting the right classification for the case. The reason we do this is to help route the case to the right agent, right, based on the understanding of the issue, but also to utilize this information to then provide a more accurate answer. So now we're only generating an answer based on a regular query input. We're generating an answer based on way more details, being a subject, a description, and as well as a categorization of the issue, which will then allow us to generate a more accurate and more detailed resolution for the issue at hand, essentially transforming the case creation flow into a case resolution flow. And we've seen tremendous results of doing this. In this example here, you can see quite a complex answer to a quite complex question with examples of code and, you know, configuration that you can put in place to solve the issue at hand. So, you know, this has been, you know, this has led to tremendous results for us and has helped has helped solve or or essentially block this this this, this kind of a of a drain hole that we had where people would just avoid the full search and head straight to the case form. And now we're able to provide them with a resolution as well, which has led to interesting reductions in our case submissions. So we'll pass it back now to Angelo to share some of the results that that has led to for, again, for our own support organization. Thanks, Mathieu. Alright. So, for our case deflection rate, we had seen, quite a considerable improvement as well. In fact, twenty nine percent improvement in the case rate deflection. Now this is, again, based on customers who have performed the full search, who were presented with content and answer and didn't open a case. And this was, you know, this was what we saw from our own case deflection. But, again, we wanna show with show with you share with you what we also were able to drive and help our customers drive as well in terms of their own results. So now we can go and look at this case study with Forcepoint. And in terms of improvement in case deflection rate on the Forcepoint case form, they realized a an improvement of sixty percent. And, I think one thing to call out here is, the fact that this improvement so what what AI and Gen AI has allowed is to really go and get that that extra that would be very difficult to obtain in terms of improvement even at a pacesetter or, you know, type of level of of performance or or or trending in terms of metrics. When they initially, implemented, AI into their, into their, workflow first for self-service, they saw an improvement of, you know, two hundred percent in case deflection within the first, the first few months of of it being in place. And they were able to further attain an an additional sixty percent of reduction at that point, of deploying CRGA within their within their their flow. So it it this just allows us to really surface up more information that, is easier consumed and digestible for the the end user because it provides not only the content, but next steps and recommendations on how to proceed, in in an accurate manner. And I think this is what's pro what's key and the differentiator, when we talk about GenAI. It really allows for you to go and get that little extra, from from the output that you're you might be already obtaining in terms of of of solid and strong results. So if you wanna know or hear more about the case study with Forcepoint, our EVP of global customer experience, Patrick Martin, will be presenting with, the senior manager of digital customer engagement for Forcepoint, Cora LaRose, at TSIA on October twenty second at the upcoming TSI World Envision. So I invite you to register and attend that particular session if you want to learn more about the Forcepoint case study. And with that, I'll pass it over to Mathieu. Thanks. Now, you know, the question might be or the next question may be, how can you realize this value in your own organization? Like, Forcepoint has done, like, some of our clients have done, and, like, we've been able to achieve for ourselves. And the two things essentially that needs to be done is, first of all, to assess the current state of your knowledge, right, as well as your entire self-service journey. What are the different use cases or different touch points that you have in place today where you may be able to deliver generative answering solutions or generative answers in general to your clients? Which of those point represents perhaps as high as friction and could potentially deliver the most significant ROI? And then the second thing that's very important, and Jhil alluded to this a little bit earlier, is the content help. Right? Which content is of quality, is accurate, and is readily available to then use as grounding to generate answers and combine different pieces of that content to provide resolutions to your clients. And then once that's figured out, the next step is to plan and deploy. Right? So what are your options today? So you have options like Coveo like ourselves, which we are a turnkey solution. Right? We will provide both the indexing of the content, the retrieval of the content, the grounding, as well as the prompt, and the answer generation from end to end. But there's also a mix of different solutions that you combine to do it yourself. And if you wanna do it yourself, you'll need a unified index, right, to bring all that content together in one index to retrieve it, you know, regardless of which source of content might be coming from because the truth might live in multiple repositories across your organization. You'll then need a retrieval engine to accurately retrieve that content, right, based on the different answers. And then, of course, an NLM or a large language model to take that content together and generate an answer. So Cavill can also serve some of those pieces in in silos. Right? If you're looking for just unified index and retrieval engine, but you like to use your own LMM, this is also something we can help with. We've seen clients with more complex use cases, which require them to use their own LMM that was fine tuned, but they still need a retrieval engine. And we can help with that, but also auditioning, you know, user behavior understanding and behavioral AI models to further improve their retrieval. But these are essentially as a whole of different pieces you need to deploy successfully deploy and securely deploy generative advancing solutions within your self-service, journey to impact self-service success, impact case deflection, and, of course, lead to significant cost savings for your support organization. So with that, before we head to questions, just wanted to thank again, TSIA for for having us today here. It's always a pleasure to get to speak to this audience, and thank you, John, as well also for being with us today. I think we may have some questions in the chat, so, I'll pass it over to, Vanessa if there's any questions that, the audience would like us to answer. Great. Thank you so much, Matthew. And we do have quite a few questions already in queue, but I just wanna remind everybody, go ahead and pop those questions in the upper left hand corner in the ask a question box, and we're gonna get through as many questions as we can. Even if we aren't able to answer your question here live, we will make sure to follow-up with you. So with that, our first question comes from Devin, and they say, how does GenAI handle complex or technical customer queries? That's a great one. So there's two sides to this. One side is on how the content is organized, right, in the index, and the other side would be how the content is then retrieved. Right? So the thing with complex question is, you know, it can overlap so many different topics. So one thing that we do to help, you know, answer and and solve those complex questions is we help with classifying the content properly in our index. Right? And understanding the intent of the users at query time to then be able to, you know, avoid noise and avoid, you know, different types of issues for different products perhaps that are completely relevant to the issue at hand. So that's the first thing. And the second thing is the retrieval itself. Right? So by understanding the user, by understanding the topic or the intent to, you know, and understand having already that classification and the the content properly organized in the index to then do a more accurate and narrow retrieval. So we're not answering a question or providing an answer that doesn't apply to the user because they might be using a different product, for example. Right? So that's really the the two things we see, and it, again, requires work at both the index and the way that the content is organized and structured, but also in the way that we'll do retrieval to make sure to get to the the bottom of the end of the the, the solution as opposed to doing a broad retrieval and potentially, you know, finding content that are for a different product or a slightly different category that somehow seem to answer the same question, but in fact are not answering the correct or giving the correct answer to the user. So that that's how we've been tackling this here at Kuveo. And if if I might just top up on that, I think, you know, that is the way of of grounding and ensuring that the the, you know, the answer, to those more complex, you know, inquiries, are still as relevant and as accurate as as possible. But as I mentioned earlier, I think, again, you know, you have to emphasize the fact that content health is imperative. And you know, I just give you an example. We had, you know, one of our customers who came to us and said, hey, you know, your answer is given us or is giving us a result that includes our competitors. And so we investigated to understand why that was happening. And you know, surprisingly, it wasn't anything that had to do with the the search itself. It it actually gave exactly what it should have in terms of an output. But the the documents that the customer had included in that, in the in the in their data, base included the names of competitors. So it went and take out the information based on the inquiry, and therefore, it it included the names of these competitors. So it wasn't a question of the search or the, you know, the whole, generative answer producing something that was inaccurate. It was truly because the content that it it it queried had that information in it, and it was relevant to what the inquiry was. Okay. Kind of along those same lines, we have a question here from Sanjeet, and they say, how do you ensure generated answering accuracy when content is not up to date? It's a great question. So, of course, we're because we do retrieval and grounding based on real content to ensure to avoid hallucinations and provide real accurate answers, there is a dependency on the content. Howard essentially helping our clients tackle this at Coveo without providing tooling. Tooling to understand, well, which piece of information from which document was used to provide an answer. What was the feedback, right, that was received from users? Because we allow for users to provide feedback on the answers that were presented to them. And then finally, we also have tooling for more, you know, edge use cases like the example Angela was just giving, where we may allow clients to temporarily block the model for answering for a specific query until that the root cause in the content can be solved. So it's a fine balance of, you know, tooling to troubleshoot and stop the model for answering in specific scenarios. You using the same tooling to understand where the issue comes from. But then, of course, there's a reliance on knowledge management and ensuring that the content that is inaccurate is removed, updated, or simply, you know, redone to reflect the reality of today. That's a great one. So we have Rita asking, can this be integrated into chatbots? And if yes, how easy is this process? Very interesting. So, there's two ways to tackle chatbots that we see, and and our clients talk often about conversational AI and the idea of, you know, asking a question and getting kind of a conversation with a model. So we're tackling this in two ways. With our key in hand, our turnkey solution, we're looking at, adding things like follow-up questions or clarification of the questions that the model will come back and ask users to slowly kinda guide them down towards the path of the desired outcome towards self-service, but in a controlled and, kind of limited fashion. Right? Essentially, in a small, narrow, but very efficient swim lane, let's say. But we also have recently, developed an API that allows clients to use the passages retrieved from our index. Right? So the source of food again, the grounding. Right? But instead of full documents, we'll be able to retrieve the specific passages of the documents that are that contain the answer, but then provide that to clients to use this with their own LLM. So whether they use our out of the box generated answer turnkey or to use their own LLM with content that's retrieved, what we see today are for more successful, you know, implementations of chatbot is really tapping into those large language models as opposed to trying to build a flow manually that accounts for all possibilities. And that's kind of the risk with chatbots that we've seen. Because there are so many possibilities and so many outcomes and the flow can be so open, you know, clients can kind of lead themselves into a dead end. So our turnkey solution was meant to avoid those and try to lead users down the path that they had towards the right outcome. But if, you know, or some of our clients would like to have a more open ended chatbot, with an LLM that can really have more of a natural discussion as opposed to a guided flow, then it's important to still use that retrieval element, you know, to deploy. So either or, usually chatbots do require some level of customization. We're not essentially a chatbot provider on our end, but we're there to essentially provide the content and material for the chatbot to provide, an appropriate answer. Again, whether it'd be using our turnkey solution that will have prebuilt answer with grounding and security or whether it be using your own LLM and then using our passages from our index that we retrieve to ground those answers into a source of truth. So I hope I hope that answers the question. But chatbots tend to always require some level of customization. That's not, what we specialize in. We really specialize in in the grounding and the retrieval to power accurate answers that can then, of course, you know, use the chatbot as a medium for for delivery. Okay. Corey is saying, you mentioned hallucinations. How do you avoid these and or prompt hacking as well? It's a very interesting one. We, so with our, turnkey solution, you know, you hear this term a lot now, which is prompt engineering. Right? So with the the the our turnkey solution, we have, you know, PhDs in natural language processing or ML scientists, as we call them, who are doing extensive research to engineer a prompt in a way that avoids those situation. And then, of course, the prompt itself needs to really guide the LLM to use the retrieved information from your index, the, again, the source of truth, the grounding to generate the answer. So we like to say that we're almost threatening the LLM in the way that we've built our prompt. It is proprietary risk to us because we've done a lot of research to build a prompt that is efficient, secured, and avoids hallucination. But even by doing that, it's impossible to avoid one hundred percent of hallucinations. Right? LM can still get confused with different pieces of information and may accidentally combine. But by having a tough prompt, like the ones we built where, again, we say that we almost we're threatening the model and say, do not do this, do not do that, and we're really guiding it strictly on how to behave plus having accurate information and proper retrieval. Usually, it's possible to avoid hallucinations altogether. Rallies, the large, large, large, large majority of the situation where hallucinations may occur. And we have not received this as feedback from our clients, actually, that they they don't see hallucination is not something that our clients have been telling us because we've built our prompt to be so strong. And the last maybe aspect I would add to that is, we've also built our prompt that in a way that is to keep it secure and avoid hallucination. Well, it will prefer to not answer rather than to provide an answer that could be dangerous, or inaccurate or or contain hallucinations. So, again, we've really built in that security with our turnkey solution. And this is really, I think, the key, you know, nowadays to avoid, though, is this, you know, strict prompt engineering as well as, you know, grounding in for for grounding with retrieval. Okay. Well, unfortunately, we have run out of time for today. Like I mentioned before, I know there are a couple questions we weren't able to answer here live, but don't worry. We haven't forgotten about you, and we will follow-up. And since we have come to the end of today's webinar, a few more reminders before we sign off. There will be an exit survey at the end, and we'd appreciate it if you could take a few minutes to provide your feedback on the content and your experience by filling out that brief survey. And then know that a link to the recorded version of today's webinar will be sent out to you via email. Thank you again to our presenters, John, Angelo, and Matthew, for delivering an outstanding session. And thank you to everyone for taking the time out of your busy schedules to join us for today's webinar, experience GenAI in action and discover its a o ROI potential, brought to you by TSIA and sponsored by Caveo. We look forward to seeing you at our next webinar. Take care, everyone.

TSIA Webinar