A positive experience with generative AI can feel like magic, but it’s not. To create content that is similar to human-generated content — such as text, images, and even code — generative AI relies on a few foundational concepts for processing information:

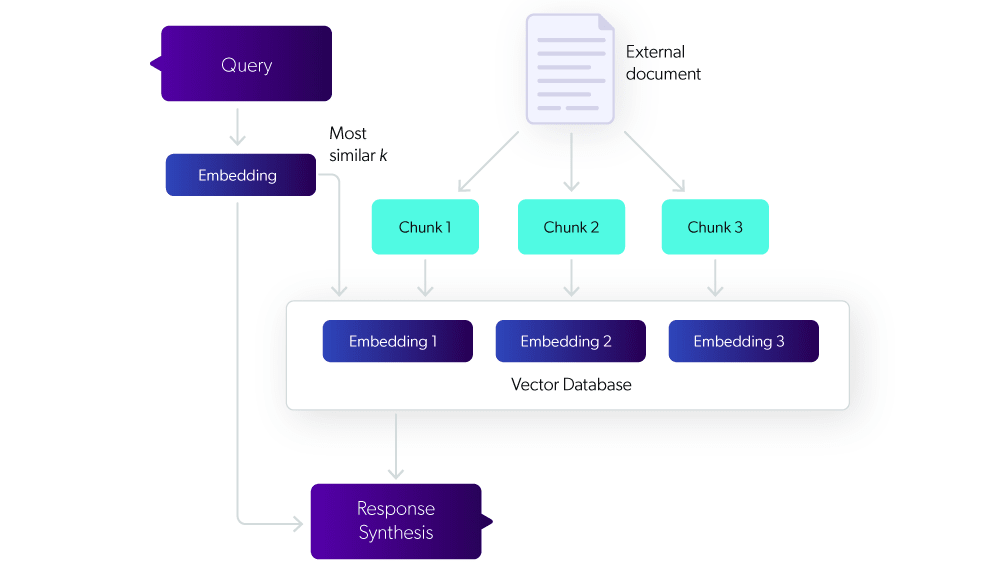

Retrieval-Augmented Generation (RAG)

Whether or not you had “AI hallucinations” on your bingo card for the 2020s, here we are. RAG helps generative AI avoid hallucinatory and otherwise unreliable output by making sure that the large language model (LLM) only pulls from trustworthy sources you provide.

A RAG system goes beyond traditional information retrieval, typically consisting of search and databases, dynamically combining the broader context of large language models with real-time access to external knowledge sources.There are a number of principle RAG types, including Naive RAG, Advanced RAG, and Modular RAG. Agentic RAG has come into focus with the acceleration of agentic AI applications. Agentic RAG strengthens the data analysis, decision making, and multi-step reasoning carried out by autonomous agents.

Relevant reading: Putting the ‘R’ in RAG: How Advanced Data Retrieval Turns GenAI Into Enterprise Ready Experiences

Semantic Encoding

Generative AI systems have an endless appetite for information. Semantic encoding translates human language into structured forms that help AI understand relationships and underlying concepts within the data. Learn how Coveo uses semantic encoding.

Chunking Information

For either RAG or Coveo’s Semantic Encoder to work at their best, it’s important to start by chunking the information you want them to use. Chunking involves breaking down complex information into smaller pieces, or “chunks”, to improve both processing and understanding. In the context of AI, chunking information can enhance your AI system’s ability to analyze, retrieve, and generate content.

There’s a specific kind of chunking for RAG applications, called RAG chunking, and there are many strategies to optimize those chunks (semantic chunking methods, for example, and document based chunking).

We’ll focus on RAG chunking in this blog post, including best practices, advanced techniques, and how this method benefits document analysis and customer query response.

Why Chunk Information for AI?

When you break down larger chunks of important information into smaller chunks, you make it easier for AI to generate human-like content. Chunking also enhances the accuracy and relevance of the information retrieved.

The concept of chunking is deeply tied to cognitive science and cognitive memory. Human short-term memory, or working memory, is limited. Manageable chunks help improve recall, reduce cognitive load, and build mental models. It’s not surprising that AI systems also respond to chunking — AI is designed after the very minds that created it.

Breaking a large document into discrete, self-contained units, for example, allows AI to match queries with relevant information more easily and accurately, leading to faster and more precise responses.

In the real-world, chunked content dramatically enhances experiences across generative AI channels, such as:

- Virtual chat assistants

- Case submission forms

- Self-service knowledge base

- In-Product Experience (IPX)

- Recommended responses for agents

RAG chunking also requires less computational power and training time for your models. It helps to maximize the navigability, accessibility, and utility of knowledge content.

Principle RAG Chunking Methods

The most common form is fixed-length chunking, in which you set the number of tokens in chunks and determine the level of chunk overlap between them. Fixed-length chunking is typically character-based, token-based, or word-based.

Recursive chunking divides input into smaller chunks in a hierarchical and iterative manner, using a set of separators. If the initial attempt doesn’t produce desired size or structure, the method recursively calls itself with different separators until achieving the desired chunk size.

In sentence-based chunking, documents are split at natural sentence boundaries to preserve linguistic coherence. Approaches include fixed sentence count, sentence clustering, and linguistic chunk boundary detection (using natural language programming libraries).

Semantic chunking breaks text into chunks based on meaning rather than fixed sizes, ensuring each text chunk contains coherent and relevant information by analyzing shifts in the text’s semantic structure.

Finally, adaptive chunking dynamically adjusts its approach based on the content and context of the query, using different strategies for different parts of the document depending on complexity and information density.

How Chunking Affects RAG Pipeline Performance

The impact of chunking on RAG pipeline performance is multifaceted:

- Retrieval accuracy increases when related information is kept together and noise is effectively reduced

- Context preservation improves, assuming you choose an optimal approach to chunking documents

- Processing efficiency improves because the system processes smaller chunks faster and uses memory more efficiently

- Cost and token management eases because the system only has to retrieve the most relevant chunk in response to a query

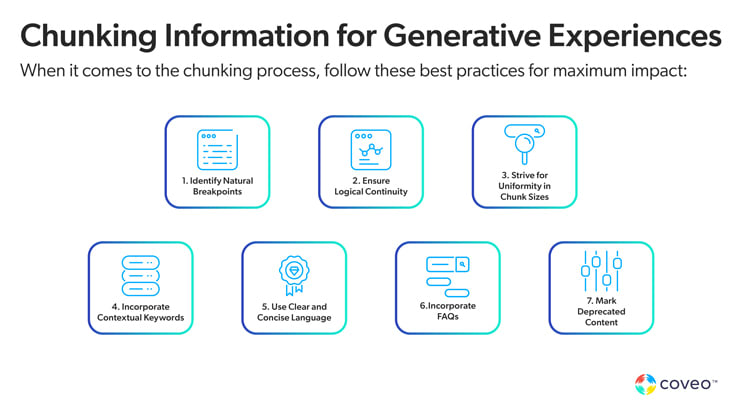

Best Practices for Chunking Information

When it comes to your own chunking technique, follow these best practices for maximum impact:

1. Identify Natural Breakpoints

Look for logical transitions where topics shift or new subtopics are introduced. This section dedicated to identifying natural breakpoints, for example, could comprise a suitable chunking point that AI could use to generate content on this subtopic.

2. Ensure Logical Continuity

Here we must borrow from the fundamentals of good document composition. Just as each part of a knowledge base article should be independently valuable, yet maintain a connection to the overarching theme, the same should apply to how you chunk that article.

This will help to strike a balance between autonomy and coherence, which will make work easier for AI.

3. Choose the right chunk size for RAG

There is no “one size fits all” solution when it comes to choosing a chunking strategy for RAG — it depends on the document structure being used to create the knowledge base. Larger chunks may retain more context, but slow down the retrieval process. Smaller chunks may do the opposite. Ideal chunk size may also depend on the content type, query complexity, and token limitations of your LLM.

4. Strive for Uniformity in Chunk Sizes

It’s easy enough to throw anything and everything at AI and let the system handle the rest. But it’s much better to create relatively uniform and manageable units to help manage the cognitive load on your AI systems, which leads to easier processing and comprehension. (Notice how every paragraph in this section is similarly sized and relatively symmetrical…)

5. Incorporate Contextual Keywords

You can further enhance AI’s content understanding and categorization by embedding relevant keywords within each chunk. Contextual keywords (think “current home interest rate” instead of “interest rate,” for example) help AI models understand relationships between chunks and disambiguate words with multiple meanings.

6. Use Clear and Concise Language

If you’ve worked with generative AI at all, you know that things start to fall apart when you use jargon, idioms, colloquial expressions, and even slang. Simplify the language you use to improve AI’s ability to parse and comprehend content.

7. Incorporate FAQs

FAQs are ideal content chunks. They’re readily retrievable by AI and often mirror the most common ways that people ask questions. And aren’t these the very questions that you’d like AI to automate, instead of asking your agents to answer the same ten questions all day, every day?

8. Mark Deprecated Content

If you feed AI obsolete information, it may very well use it. Indicate outdated content explicitly to maintain a current and reliable knowledge base.

How to Use the Chunking Technique

Because chunking happens at the content level, it requires a different approach to how you build the content itself. To illustrate content chunking in practice, consider the following before-and-after scenarios.

Scenario 1: Financial Market Trends 2024

This mock-up illustrates how content can be transformed from a dense, linear narrative into a structured, easily navigable document. By breaking down the report into distinct sections with clear headings and bullet points, readers can quickly find the information that’s most relevant to them, enhancing both comprehension and engagement.

Before:

The financial market in 2024 has shown a mixed set of trends, with technology stocks leading the charge in terms of growth, while traditional manufacturing sectors have seen a more modest increase. Investors are keen on understanding the impact of recent technological innovations, especially in artificial intelligence and machine learning, on stock performance. Additionally, global economic factors, including changes in trade policies and interest rate adjustments by central banks, have played a significant role in shaping market dynamics. The energy sector, in particular, has experienced volatility due to fluctuating oil prices and the increasing adoption of renewable energy sources.

After:

Introduction to 2024 Financial Market Trends

The financial market in 2024 presents a diverse landscape of opportunities and challenges, influenced by technological advancements, global economic policies, and a shift towards sustainability.

Technology Sector Growth

- Highlight: Technology stocks have seen substantial growth, outperforming traditional sectors.

- Key Drivers: Innovations in AI and machine learning are pivotal, attracting investor interest.

Global Economic Influences

- Trade Policies: Changes in international trade policies have had a nuanced impact on market dynamics.

- Interest Rates: Central bank decisions on interest rates continue to shape investment strategies.

Energy Sector Volatility

- Oil Price Fluctuations: The energy market has been unpredictable, with oil prices influencing sector volatility.

- Renewable Energy Adoption: There’s a clear trend towards renewable energy, affecting traditional energy investments.

Scenario 2: Optimizing Pharmaceutical Supply Chains

Look how, just by restructuring the content, this guide to pharmaceutical supply chains becomes a comprehensive yet easily navigable resource for pharmaceutical industry professionals. Each section succinctly addresses a key aspect of supply chain optimization, allowing readers to quickly understand and apply the strategies within their own operations, ultimately leading to improved efficiency, compliance, and patient satisfaction.

Before

In the highly regulated and competitive pharmaceutical industry, optimizing supply chain operations is crucial for ensuring the timely delivery of medications, maintaining product integrity, and minimizing costs. The complexity of pharmaceutical supply chains, involving strict regulatory compliance, temperature-sensitive products, and a global network of suppliers and distributors, poses significant challenges. Effective management strategies include the adoption of advanced tracking and monitoring technologies to ensure product quality, strategic partnerships with reliable suppliers to mitigate risks, and the implementation of lean inventory practices to reduce excess stock and associated costs.

After

Introduction to Supply Chain Optimization

Overview of the importance of supply chain optimization in the pharmaceutical industry, highlighting the balance between efficiency, compliance, and innovation.

Advanced Tracking Technologies

- Purpose: Ensure product integrity and compliance through real-time monitoring.

- Implementation: Invest in technologies for tracking product temperature, location, and status throughout the supply chain.

Strategic Supplier Partnerships

- Objective: Mitigate supply chain risks and ensure reliability.

- Approach: Cultivate long-term relationships with suppliers who demonstrate compliance, quality, and dependability.

Lean Inventory Practices

- Goal: Minimize costs associated with excess inventory.

- Strategy: Implement just-in-time inventory management and streamline stock levels based on demand forecasting.

Conclusion: Achieving Operational Excellence

Summarize the strategies for optimizing pharmaceutical supply chains, emphasizing the importance of technology, partnerships, lean practices, and adaptability in maintaining high standards of efficiency and compliance.

Scenario 3: Guide to Selecting Truck Parts for B2B Customers

This reformatted guide transforms a dense overview into a structured, easy-to-navigate resource tailored for B2B customers in the truck parts industry. By segmenting the content into focused sections, it allows readers to quickly access the information relevant to their specific needs, enhancing their decision-making process and ensuring they find the most suitable parts for their operations.

Before

For businesses in the B2B sector dealing with truck parts, understanding the comprehensive landscape of available components and their specifications is crucial. With a vast array of parts ranging from engines, transmissions, brakes, to electrical systems, and cabin accessories, making informed decisions can be daunting. Each component category comes with its unique set of considerations, such as compatibility with different truck models, durability, warranty periods, and price points. For instance, selecting the right engine involves considering fuel efficiency, power output, and environmental compliance, whereas choosing cabin accessories may focus more on driver comfort and ergonomics. This guide aims to assist in navigating this complex selection process, ensuring that businesses can secure the most suitable parts for their needs, optimizing for cost-effectiveness and operational efficiency.

After

Guide to Selecting Truck Parts for B2B Customers

Introduction to Truck Parts Selection

This guide aims to simplify the truck parts selection process for B2B customers, covering key component categories and considerations.

Engine Selection

- Considerations: Fuel efficiency, power output, environmental compliance.

- Tips: Evaluate based on operational needs and environmental goals.

Transmission and Brakes

- Transmission: Focus on durability, ease of maintenance, and compatibility.

- Brakes: Prioritize safety features, reliability, and compatibility with your truck models.

Cabin Accessories

- Focus Areas: Driver comfort, ergonomics, and in-cabin technology.

- Sustainability: Consider eco-friendly options that enhance driver experience without compromising the environment.

Conclusion: Optimizing Selection

- Research and Comparison: Emphasize the importance of thorough research and comparison to find the best fit.

- Cost-Effectiveness: Focus on optimizing for both initial costs and long-term operational efficiency.

Generative AI Can Do a Lot, But It Can’t Do It All

Let’s zoom out for a second, because chunking content is a very specific subset of strategies within the broader realm of generative experiences. Yet chunking information is more than a technical trick. It’s fundamental for preparing data in a way that generative AI can use effectively.

With 71% of contact center leaders expecting generative AI to have a major impact, but only 13% rating their digital and self-service experience as excellent, we need a more tactical approach.

Does generative AI have the power to transform customer experience? Absolutely. But to deliver excellence, we must optimize how we prepare and structure information. Chunking is a simple yet effective method to start this optimization, ensuring AI systems can provide accurate, relevant, and high-quality interactions.

Plus… content that’s written for audience accessibility is just good practice. Your site audience, whether internal or external, won’t always encounter the information they need in a generative experience. When you make content consumable, readers can digest it and get back to what they were doing (i.e., using your product or service). And it can take strain off of knowledge managers, who can parse and maintain content with ease.

Want to chat with an AI expert who can offer other practical advice to get your generative AI POC underway? Schedule a call with a Coveo expert today.

Dig Deeper

Content preparation is one of many steps to a successful generative AI implementation. Get other real-world insights and practical tips in our free-to-download Blueprint to Generative Answering in Digital Self-Service.