It’s never a good idea to implement transformative technology without a solid strategy in place. It’s downright detrimental to do so without a foundation to support the new initiative. And yet, that’s exactly what many companies have been doing when it comes to implementing AI tools, processes, and workflows into their organizations.

According to data from MIT, 95% of generative AI pilots ultimately fail. When they do succeed, it’s because they have a clear use case for the tech along with a solid foundation to support the infrastructure needed to scale.

A recent webinar co-hosted by TSIA and Coveo addresses the challenges of meeting user expectations around AI when scaling across a huge knowledge base. While a pilot might work in a controlled environment, the lack of a clear plan and solid data foundation creates a chasm that often prevents companies from successfully scaling AI applications to the rest of the enterprise.

The webinar highlights how UKG, a Workforce Operating Platform, used AI to successfully transform the self-service experience for tens of thousands of customers. Their first step started nearly a decade ago, when they focused on fixing their knowledge layer long before full-scale AI integration was ever on their radar.

The Self-Service Imperative

Business customers largely prefer to self-serve when attempting to resolve issues or answer questions. Dave Baca, Director, Support Services and Field Services Research at TSIA, notes that self‑service along with AI powered solutions are often the initial point of contact for support issues. In a recent TSIA survey, 87% of B2B customers said they actively use AI tools before contacting support.

The preference for digital-first support extends to both self-service portals and Google search. Whether on or off your website, people are using AI regularly—a sign of the rising complexity customers expect from self-service solutions. Users want their questions answered seamlessly and effortlessly and they’re demanding increasingly sophisticated answers.

Meeting these expectations requires a few key ingredients, including:

- Avoiding the “bolt on” approach to AI. Plugging AI into select systems makes it impossible to scale.

- A foundational approach that starts with unifying access to company knowledge. This lets you support both the breadth and depth of what customers are asking for.

- Applying strong machine learning models before generative AI enters the picture. This ensures you’re serving up relevant content based on who the customer is, without the need for manual configuration.

Without these ingredients, even the most sophisticated AI will struggle to deliver fast, accurate answers.

UKG’s Challenge: The Knowledge Ecosystem Problem

UKG is a global HR, payroll, and workforce management solutions that spans multiple industries and serves many of the largest enterprise companies. As a mature organization, they have a robust knowledge ecosystem with over 70,000 assets across 11 systems. While all content was valuable, it wasn’t easy to find.

For UKG’s customers and internal teams, searching across their many systems could be a frustrating slog. “Our customers sometimes needed to navigate to multiple places to find the information they needed,” said Keri Rogers, Principal Customer Experience Analyst at UKG. “Our internal teams were also struggling to find information. They knew it was there, but finding it was a challenge.”

Fundamentally, this is a silo problem. While the knowledge was available, it’s scattered across tools, teams, and experiences. This impacted experience in a few ways:

- Time-consuming searches: Users had to manually check multiple repositories to find a single answer.

- Inconsistency: Because content was managed in isolation, a customer might find one answer in the community and a different one in the documentation.

- Fragmented update processes: Without a unified search layer, there was no way to ensure a content refresh in one system would be carried through on all channels.

These issues made it difficult for UKG to scale support experiences as the company grew, much less layer AI into their service experience in a way that was sustainable. Before they could do either of these things, UKG had to solve their knowledge ecosystem problem.

The Foundation-First Approach

UKG started working on the foundation of their AI infrastructure about eight years ago, long before generative AI entered the picture. They needed to make it easier for customers and internal teams to find what they needed without bouncing between systems.

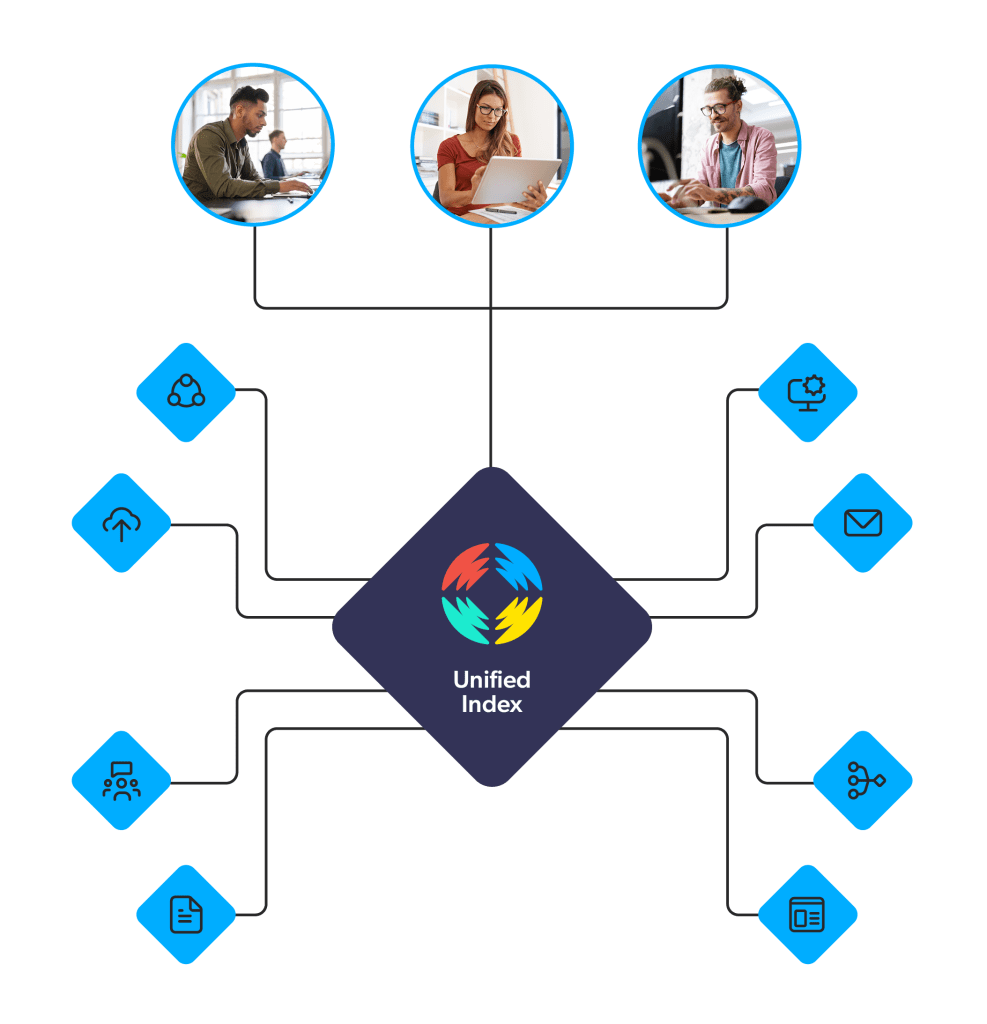

To address this, UKG partnered with Coveo to create a unified search index. The index aligns content—including metadata and structure—so it can be retrieved in a clean, consistent way.

This step is easy to gloss over, but it’s the difference between a unified index that feels effortless and one that just dumps results onto a page. It is also why managing metadata is so important when connecting disparate sources.

Security was also a priority. UKG serves customers, partners, and users with different levels of access, especially in its community. Coveo managed security and permissions by indexing the native permissions of the source systems. That ensured people only saw what they were authorized to see. Those permissions continued to flow through as UKG built new experiences on top of the same foundation.

Relevant viewing: Enterprise Security & AI: How to Innovate Safely

The Evolution: From Search to Agents

AI capabilities are evolving quickly from the simple question and answer pairs of just a few years ago to true agentic systems with conversational abilities. Agentic AI can deliver a more human conversational experience that drives cognitive decision-making that’s independent of humans. We’re now seeing knowledge management being used to enable the rise of these agentic AIs. This is a staged maturity process which breaks down (roughly) as follows:

Stage 1: Unified AI Search (7-8 years ago)

UKG’s transition from being AI-ready to being Agentic AI-ready began years before ChatGPT was a blip on most people’s radar. By creating a unified search index, they centralized access to content sources across the enterprise.

UKG’s process involved indexing native permissions from source systems so that users only saw what they were authorized to access. Those permissions then flowed through as UKG built new experiences on top of the same foundation. Once unified, UKG had a single search experience across all knowledge sources and could apply machine learning models for relevance and personalization. This made it even easier for users to find what they needed quickly.

Relevant reading: Preparing for Agentic AI: What B2B Leaders Need to Know (and How to Get Started)

Stage 2: Generative Answering (1 year ago)

Establishing a unified index allowed UKG to reach the next milestone, moving from simple search results to direct answers. About a year ago, UKG integrated generative answering into their case creation workflow. When a user fills out a support request, they’re sent to an interim page with a list of recommended solutions based on their issue.

Rogers noted that this approach has been a game-changer for users who aren’t self-service savvy.

“We’ve seen amazing success with this solution,” said Rogers. “Customers love having the answer on the spot.” Because the underlying data is refreshed every ten minutes, the answers stay relevant wherever customers are accessing them. The result for UKG has been higher deflection rates and increased customer satisfaction.

Stage 3: Conversational AI Agents (Current/Pilot)

The final stage of UKG’s AI maturity is still underway. It’s the transition to conversational agentic AI and it’s possible because the infrastructure is there to support it. UKG is currently piloting this through their UKG Community Assistant initiative which allows customers to have a back-and-forth conversation with a chatbot. Unlike traditional chatbots that rely on rigid scripts, agentic chatbot is grounded in the same unified index they built years ago.

The team focused their energy on tweaking prompts and making sure that agent experience was on point. This shift from managing content to perfecting interactions is what makes agentic AI feel more human and helpful.

UKG’s preliminary outcomes include:

- Improved self-service success rates: Customers find what they need faster without needing to open a ticket.

- Increased case deflection: UKG is seeing both increased implicit and explicit deflection as AI intercepts more requests.

- Lower support employee attrition: Reducing the volume of repetitive “how-to” questions leads to higher job satisfaction for support engineers.

- Higher customer satisfaction scores: Providing instant, accurate answers directly correlates with higher transactional satisfaction.

Guiding Principles for Success

Agentic systems like UKG’s are largely in the pilot phase, but there are some best practices that can help ensure that they’re successful (and that means scalable as much as it means effective).

1. Start with the Customer, Not Technology

When building AI solutions, start with the customer, not technology.

“Customers don’t care if it’s AI or not,” said Rogers. “They just want their questions answered faster.”

Focus on real needs and outcomes before hype. Use what you’re already seeing in the data and feedback. What customers search for and what they ask AI agents and human agents is the clearest indicator of what they need.

2. Fix the Foundation Before Adding AI

Knowledge management underpins support service success which makes it the main driver of AI maturity. If you deploy AI without first fixing the knowledge and search retrieval layer, your best AI intentions will fail – and this shows up in poor ROI and AI pilots that don’t scale.

Understanding generative AI risks helps you create the infrastructure required to make these tools work at scale.

3. Build What Differentiates, Buy What’s Perfected

When creating an AI game plan, focus on the experiences that truly differentiate your brand. Use technology (like unified search platforms) that are field-tested and perfected by others rather than reinventing the wheel.

By letting a partner handle the heavy lifting of infrastructure, you can dedicate your team’s energy to unique competitive advantages. Deciding whether to build or buy AI search is an important question that can help you plan a clear path forward.

4. Security and Permissions Can’t Be Afterthoughts

Enterprise AI only works when you have robust access controls, otherwise trust collapses fast. UKG had to solve this early because their community serves customers, partners, and users with different levels of access. Their approach was to not to recreate permissions manually for AI.

Instead, Coveo indexed the native permissions already defined in each source system. That way, the platform automatically recognized what each user was authorized to see, from specific Salesforce cases to community discussions and articles. Because this foundation was established years ago, those permissions carried over into the new AI functionality without any extra configuration.

5. Allow Time for Iteration and Learning

UKG spent seven years refining their search experience before generative AI entered the picture. That patience paid off.

When they rolled out generative answering and their Community Assistant, they had already tested internally with their support teams for about a month before pushing it to customers. This staged approach meant they could be confident in the answers and experience before scaling.

The reality is that iteration and learning take time, but they also help you prevent setbacks and pay off in the long run.

The Path Forward: Voice and Beyond

The next step for UKG involves expanding into voice-based AI experiences. It’s an evolution that will leverage the same secret sauce—the relevance and retrieval layer—that made their search and agents successful. It’s an approach that focuses on meeting customer expectations as they change and evolve, while providing an effective, modern service experience.

UKG’s journey demonstrates that the unsexy work of foundation-building is what ultimately enables exciting AI experiences. It was a seven-year commitment to their infrastructure that ultimately set them up for generative (and now agentic) AI experience.

Patient investment pays off. If you’re starting today, take a page from UKG’s book and fix your knowledge layer first. Then add AI to your service infrastructure. While it is tempting to chase the latest hype, the most successful deployments are those that prioritize a solid data foundation.