Jumping into the New Year, most of us would like to forget 2020 ever existed. However, then we would have missed some really great research on how to create highly personalized experiences in digital shopping.

While everyone talks about the importance of personalization in digital commerce – it turns out it is easier said than done. Machine learning has historically required mountains of data for predictions. But in B2C, if you aren’t an Amazon, eBay, or Netflix, it’s unlikely that shoppers will visit with any frequency, making data collection difficult. Yet bounce rates across verticals show that it is important to personalize as early as possible. Further, in B2B, you likely won’t have the advantage of aggregate data to help you create that personalized experience.

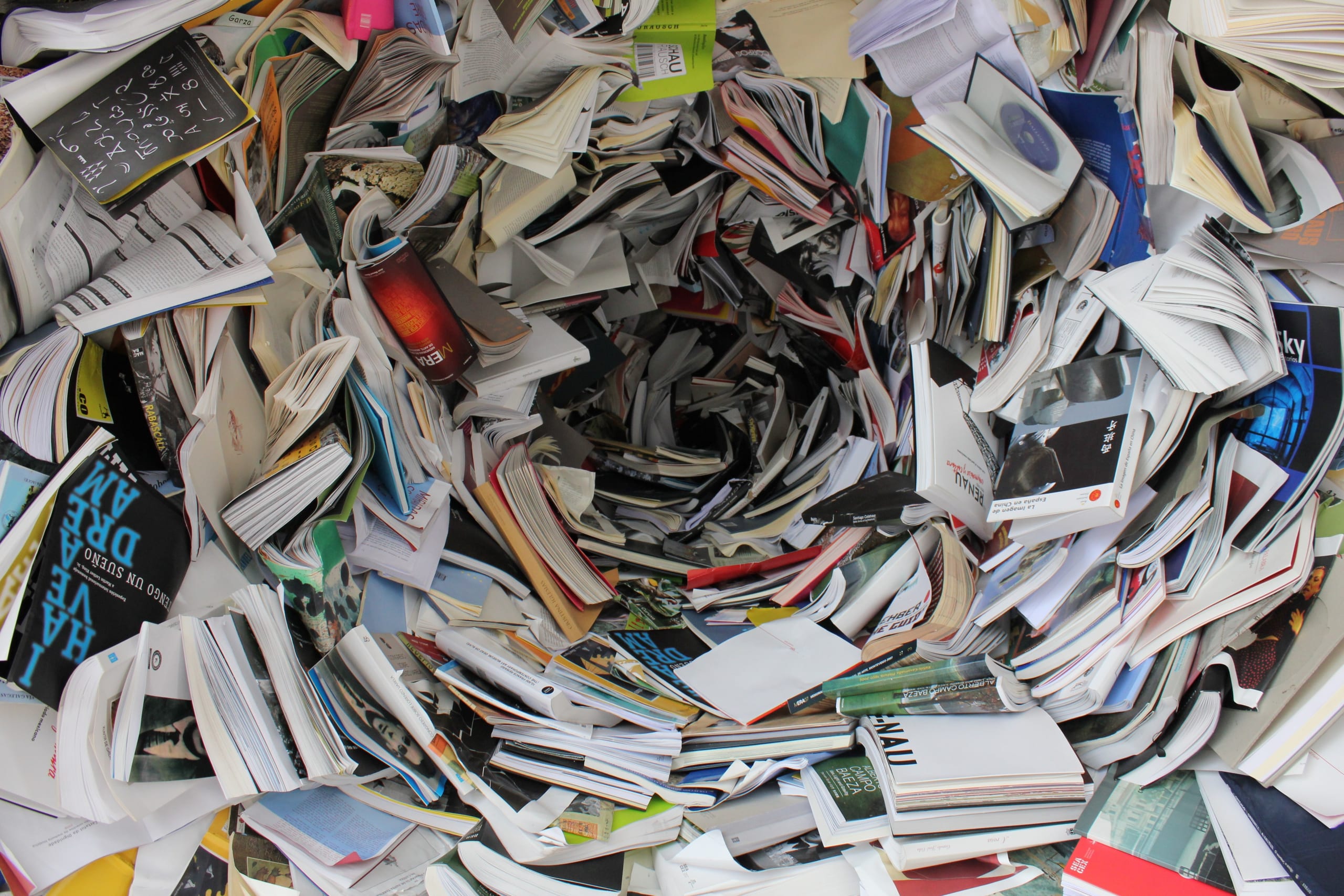

The good news is a fast evolving body of knowledge around personalization available. Here is our must-read list of some of the most interesting pieces on product search published within the last year.

1. Treating Cold Start in Product Search by Priors

How can you automatically recommend or boost new products that don’t yet have a history? Managing cold start on ecommerce platforms has been a huge challenge. In this paper presented at WWW’20, the authors from Amazon look at the behavioral data associated with what is known about the product. They look at attributes like brand, type, color, artist, author, etc. They then can apply a score to the new product.

This is an interesting approach. It’s also philosophically in-line with the product embeddings paper our Coveo colleagues presented at ACM’s RecSys conference (and a bonus paper if you are counting). Amazon’s paper addresses a general context of product representation for downstream tasks (not limited to learn-to-rank). Target tasks aside, the main technical difference between the two papers lies in how “proxied” entities are construed. Amazon looks at a regression built with behavioral and categorical features whereas we use a neural mapping from embeddings of catalog properties (e.g. BERT-encoded sentences) to regions of the product space.

2. Fantastic Embeddings and How to Align Them: Zero-Shot Inference in a Multi-Shop Scenario

Speaking of embeddings, this paper addresses the challenge of understanding user intent across multiple channels. This is key for multi-shop personalization, and proves that zero-shot inference is possible by transferring shopping intent from one website to another without manual intervention.

Our Coveo colleagues detail a machine learning pipeline to train and optimize embeddings within shops first, and support the quantitative findings with additional qualitative insights. They then turn to the harder task of using learned embeddings across shops: if products from different shops live in the same vector space, user intent – as represented by regions in this space – can then be transferred in a zero-shot fashion across websites.

They propose and benchmark unsupervised and supervised methods to “travel” between embedding spaces, each with its own assumptions on data quantity and quality. They then prove that zero-shot personalization is possible at scale by testing the shared embedding space with two downstream tasks, event prediction, and type-ahead suggestions. The release of the underlying dataset is scheduled for early 2021, hoping to foster an inclusive discussion of this important business scenario.

3. Why Do People Buy Seemingly Irrelevant Items in Voice Product Search?: On the Relation Between Product Relevance and Customer Satisfaction in Ecommerce

More relevance is always better, right? This paper, presented at WSDM (pronounced wisdom), actually analyzes an interesting phenomenon. In voice-assisted product search, customers are purchasing or engaging with seemingly irrelevant search results. The authors provide several hypotheses as to the reasons behind it, including users’ personalized preferences, the product’s popularity, the product’s indirect relation with the query, the user’s tolerance level, the query intent, and the product price.

4. Personalized Ranking in Ecommerce Search

Presented at WWW’20, this work discusses the challenges and benefits of incorporating personalization in ecommerce search. Interestingly, it recommends using a combination of content-based features and embedding-based features. This is a technique and topic that we at Coveo find fascinating and important.

The benefits the authors report – such as an improvement of 15 percent in Mean Reciprocal Rank (MRR) – is in line with our own research in product embeddings. In the multi-tenant scenario, we have proved similar gains with in-session personalization for type-ahead, category prediction, recommendations.

5. A Transformer-based Embedding Model for Personalized Product Search

Presented at SIGIR2020, this paper stands as a nice example of a piece of work by academicians (rather than industry practitioners) – which nevertheless addresses a topic of great relevance to the industry as well.

We all love talking about the potential benefits of personalization, but the authors point out that personalization does not always improve the quality of product search. It has been argued that personalized models can outperform non-personalized models only when the queries of individuals significantly differ from the group preference. In fact, while applying a universal personalization mechanism sometimes could be beneficial by providing more information about user preferences (especially when the query carries limited information), unreliable personal information could also harm the search quality due to data sparsity and the introduction of unnecessary noise.

The authors stress the value of conducting differential personalization adaptively under different contexts and propose a transformer-based embedding model that can conduct query-dependent personalization. The paper offers results on the Amazon product search dataset to support the better performance of their model over state-of-the-art baselines.

6. Query Reformulation in Ecommerce Search

This is another great piece out of SIGIR2020. This paper offers a thorough analysis of the role reformulation queries (query rewrites) play in ecommerce search. As you undoubtedly know, many website visitors quit when search results are irrelevant. But when they don’t quit, they typically refine. Sometimes they narrow their search, other times they broaden their search. The authors show that well over 50% of the queries take part in a reformulation session.

They also distinguish between three types of reformulations, add (addition of one or more words to the reformulated query), remove (removal of one word or more from the reformulated query), replace (replacement of one word or more in the reformulated query). And argue that replace is the most common type, followed by add and finally remove. Each reformulation leads to a thorough change in the results presented on the SERP, in many cases without any overlap with the SERP of the previous query.

7. Learning Robust Models for Ecommerce Product Search

Presented at ACL 2020, the paper attempts to address the problem of ecommerce search engines showing items that do not match search query intent in an attempt to enhance the ranking performance in product search. The problem is not trivial. As the authors state, simple modifications to the input query can completely change the search intent, and it is hard for distributed representations to capture the nuances. Moreover, signals from clicks and purchases can lead to biases in the ranking algorithms. The paper is technically interesting and features many ideas (e.g. synthetic query generation) that can be fruitfully applied to other use cases.

8. Debiasing Grid-based Product Search in E-commerce

This paper looks at position bias associated with two- and four-column grids in ecommerce. The widespread usage of ecommerce websites in daily life and the resulting wealth of implicit feedback data form the foundation for systems that train and test ecommerce search ranking algorithms. While convenient to collect, however, implicit feedback data inherently suffers from various types of bias.

It describes the phenomenon that items ranked higher are more likely to be examined than others, and therefore, are more probable to be observed with implicit feedback. Position bias confounds the causal effect of SERPs on users’ implicit feedback. Unbiased learning to rank methods have been proposed in recent years to mitigate this bias, and this work is a notable new attempt to address the unique challenges of debiasing product search for e-commerce.

9. Predicting Session Length for Product Search on E-commerce Platform

Session length prediction is an important problem in ecommerce search. Session length prediction can help with various downstream tasks, like user satisfaction prediction, personalization, and diversification. In this work, which was presented at SIGIR, the authors propose a neural network-based model for the session length prediction task, given the user’s session sequence – as a result of the model predictions, a digital shop could automatically control the degree of explore vs exploit in SERP.

10. Shopper intent prediction from clickstream Eommerce data with minimal browsing information

With the majority of all fashion interactions happening online, predicting whether a shopper will buy or not is highly valuable. In fact, this is known as the clickstream prediction challenge. In this paper published in Scientific Reports, the authors, including two of our colleagues Jacopo Tagliabue and Ciro Greco, address the problem via two conceptually different approaches: a hand-crafted feature-based classification and a deep learning-based classification.

Through extensive benchmarking and optimization, they not only provide state-of-the-art performance on the task at hand, but also explicitly address the “reproducibility” issue of many similar studies, carried over non-representative shops (e.g. Rakuten, Amazon, etc.). In particular, by systematically varying the “target conversion rate” in the test set, they provide precise guidelines to practitioners on how to optimize for their own cases.

This industry-academia collaboration (with authors from four countries) has a public companion dataset, which features more than 5M distinct shopping events and it is available freely for research and practitioners interested in replicating our findings or exploring different use cases (e.g. in-session personalization).