There may never have been a more exciting technological development in the past few years than generative AI tools such as ChatGPT. Almost everyone has an opinion on them and how they will change the workplace.

According to Gartner, 68% of executives feel this technology provides benefits that outweigh any vulnerabilities. If it improves business processes and helps move an organization into the future, that’s a good thing, right?

But not every executive may fully understand just what AI risks exist or the cost that comes with even just one of them. They may also not realize that there’s a secure AI alternative to the popular language learning models (LLMs) like ChatGPT — ones that companies can have more control over.

What Does It Mean to Be Secure?

Before we can explore secure AI, let’s look at the responsibilities an organization has to its data (and, as a result, the customers who own that data.)

Security ensures data is protected against authorized use, whether from outside entities — like hackers — or an employee getting access to information they shouldn’t have. Secure data can’t be damaged, corrupted, or misused. The systems that collect, use, and store it should also be secure.

Privacy involves all aspects of data that preserve an owner’s right to use and share data as they choose. It respects their wishes to be confidential or to decide which companies or applications have access to it and for how long. This may require consent as well as well-written privacy policies.

Compliance is anything related to the law or your own internal policies toward data handling. If a new law goes into effect, such as the California Consumer Privacy Act (AB 375), your data processes need flex to meet this new set of requirements. Compliance keeps you out of trouble legally, financially, and with the reputation of your brand.

How can AI be secure?

You may not have thought about the security of AI today at all. Whether your employees casually use ChatGPT to brainstorm new marketing ideas or to outline a presentation, the concern for data security doesn’t usually happen on the individual level.

Yet, legal teams have been wrestling with how to give employees the best of both worlds: up-to-date AI development and uncompromised data handling without undue risk. These teams also understand they can’t afford to get it wrong. The average data breach costs an organization $4.5 million in damages and lost business opportunities.

AI safety is a tricky balance, but one that we have an answer for: it’s Coveo’s “Secure By Design” mission.

How Secure By Design enhances AI

Generative AI has its own unique challenges, the first being that it seems so harmless. Everyone is using it, and it’s just too useful to ignore. But Coveo has proactively identified the four top challenges that come with AI security and has a commitment to solving them.

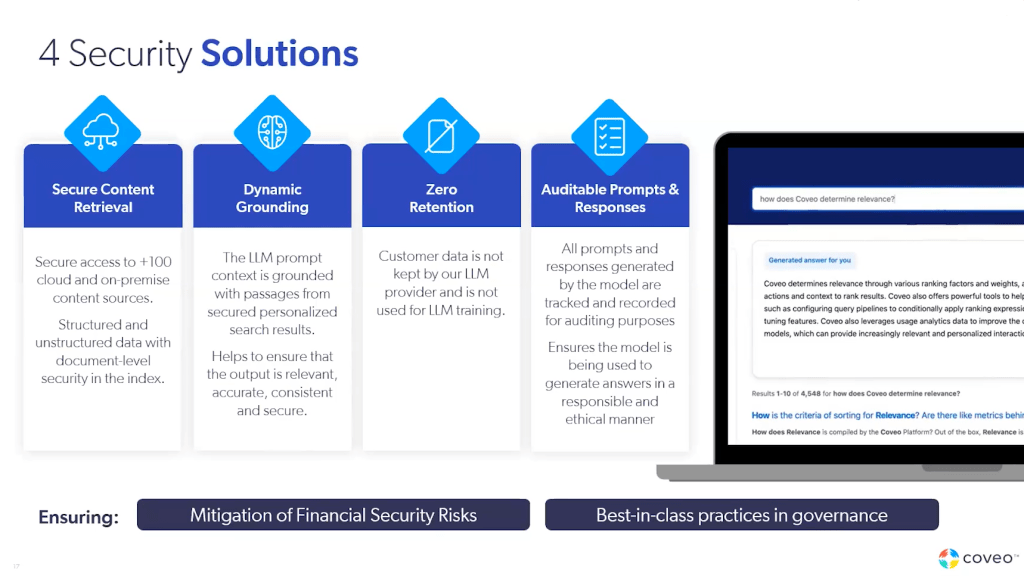

1. Security of prompts and retrieved content

An employee could conceivably type anything into the ChatGPT prompt box, even confidential customer financial information or details of medical records. Coveo’s AI solutions use safeguards to stop tampering, misuse, or authorized access.

Since we’ve connected securely to over 100 cloud and on-premise content source types, we can ensure secure interactions between them. Structure and unstructured data can have document-level security tied to the index to keep sensitive data types out of the wrong hands.

2. Dynamic grounding for high-value content

We’ve seen how ChatGPT can “hallucinate,” making up information that doesn’t exist and conflating two types of information as the same. Coveo’s LLM prompt context is grounded with passages from secured personalized search results. All results will be relevant to the user and their permissions.

Since the results come from our own documentation and data (and not someplace far off on the web), you can ensure it’s accurate and timely, too. You are the source of truth for all things pertaining to your company, not some study or tutorial that doesn’t even exist.

3. Zero retention after a response

Coveo’s customers have their own data, which will always be true. Your proprietary information isn’t used to create responses, and we don’t keep your prompts or responses for training data. It remains separate from our larger model, so you can ensure it’s only used for what you want it to be used for.

4. Trackable prompts

Aside from being secure, Coveo’s AI technology is also very eye-opening. While we don’t keep your information to train future ML models, we do give you the ability to see what your teams have searched for or put into the AI as prompts. Not only can this alert you to issues, such as when you see employees trying to input sensitive information, but you can also find patterns in their prompts that may lead you to a larger ethical issue within your teams.

This prompt history is also a learning opportunity. If you see a prompt for something you don’t yet have a resource for, that’s a signal to beef up your library with more of that content. It could also be the basis for a future training or upskilling opportunity in the future that you would have never known about otherwise.

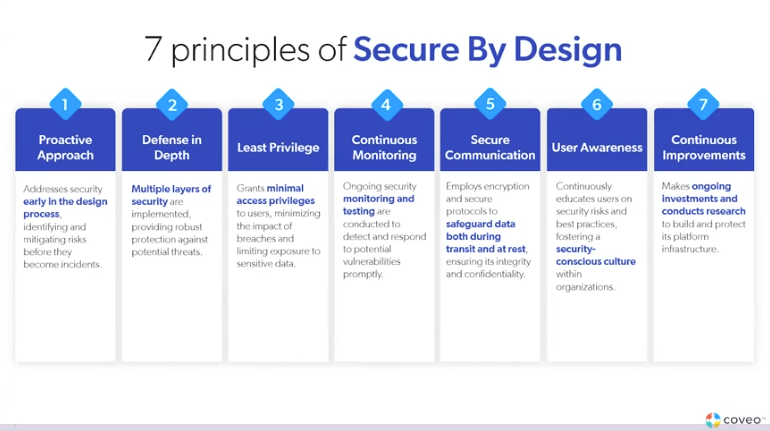

5. AI as part of a larger, secure environment

Coveo’s expansion into AI is a natural evolution since data security is a high priority, and not applying it to AI leaves companies vulnerable. Our Secure By Design mission follows these key principles in everything we do – which now includes artificial intelligence and machine learning models that your own teams can use in their day-to-day workflows.

6. Proactive approach.

Coveo doesn’t wait until a product or feature hits the testing stage. Security is in the DNA from the earliest idea stage. As the features make their way through the development process, risks are continually identified and mitigated so that they don’t pose problems after deployment.

7. Defense in depth.

Our data protection approach is multi-layered, so one attack can’t bring our defenses down. There’s strength in numbers, and that’s true for our cybersecurity protocols.

8. Least privilege.

Not everyone needs access to patient records or credit card numbers, and our solutions work best when access is identified and prioritized for the right user. This limits the exposure of sensitive information in the event of a security incident. With healthcare and financial services among the top industries to experience data breaches in 2022, it makes it more important to safeguard data at all levels – even from within.

9. Continuous monitoring.

What wasn’t a risk yesterday could very well cause a breach today. That’s why it’s not enough to set up a secure system and hope for the best. We keep an eye on systems 24/7 to react promptly through continual threat detection. We test our systems to ensure security measures meet the ever-changing standards.

10. Secure communication.

We take nothing for granted when sending or receiving data, using encryption and proven security protocols to keep data where it should be. This same principle is applied to data once it’s at rest, too, so it remains confidential from point A to B and back again.

11. User awareness.

Not every internal risk is from bad intentions. Well-meaning employees may use generative AI tools in a way that’s not in line with your privacy policies without even knowing it. Education can be a powerful force against accidental harm. We work to train and educate users on the best ways to collect, use, and store data, in addition to how our technology can help with compliance.

12. Continuous improvements.

We are a technology solution company, and that means we never stop trying to do better. With ongoing investment into our systems and research to make new ones, we hope to earn your trust as a business that does things before everyone else and does them securely. That includes AI advancements to meet teams where they work.

Why now is the time for securing AI?

Generative AI is here. Your employees are probably using it in at least one daily task. With more employees working remotely or doing office work in the evenings or weekends, the chances that they will take your company information and put it into a ChatGPT-like tool are very high.

Hallucinations can corrupt GenAI outputs and make them virtually unusable. Experts have suggested asking for sources and further vetting information, but this puts the onus on the prompter to verify all responses. It’s not only a time-consuming process, but it’s largely outside of some users’ level of comfort and expertise.

With our Relevance Generative Answering AI solution, you can replace the unproven, inaccurate, and risky behaviors of standard GenAI with one that points users in the right direction every time – and without putting your private data in danger.

We help you create an index of all your useful and truthful resources. When your teams put in their prompts, our deep learning technology scans your resources to find snippets that match their prompt. They only receive vetted, accurate, and sourced information that’s phrased like a human answering their question. This first-ever technology gives teams what they want while following responsible AI best practices.

Dig Deeper

Security is at the forefront of everyone’s mind – or at least it should be. From the death of third party cookies, to HIPAA, and Generative AI: companies are trying to understand how to keep data safe, secure, and compliant, all while still being able to deliver world-class digital experiences.

Watch this session to uncover how Coveo’s security practice and philosophy keep your data and users safe — without compromising on innovation.

Or download our free white paper on Enterprise Safety: 5 Pillars of GenAI Security.