Philosophy provides some big questions, such as “what do words mean?”

While finding an answer to those larger quandaries is outside the scope of this article, this is the type of thinking Coveo’s AI Labs uses when attempting to resolve real-world issues. Ciro Greco, the VP of Artificial Intelligence (AI) at Coveo, took viewers on a tour of the linguistic, philosophical, and mental heavy lifting needed to approach an as-yet unrepresented issue in the ecommerce industry: what he referred to as “reasonable scale.”

During his Relevance 360° Week presentation, entitled Dive Into AI Understanding User Intent with Language and Machines, Greco defined ‘reasonable scale’ as companies lacking access to large volumes of intent data similar to a tech giant such as Amazon or Google. How do you connect three words, like grey tennis shoes, to exactly what that unique shopper has in mind without gobs of historical data about an individual?

Well, it depends on how you frame the small data problem.

Can We Understand Complex Queries With AI Solutions?

A user enters a search for “Nike tennis shoes.”

For this query, the meaning is obvious to another person—show me Nike tennis shoes. However, it’s difficult for a machine to understand human behavior in the same way. With machine learning, how the search parameters are set determines the results. A broad query brings back several documents related to the item, but not necessarily the item itself. This can leave the customer with a lot to sort through.

Even worse, if the search is too narrow, customers receive limited results or no results at all if the query doesn’t precisely match items in the catalog. The narrow search won’t offer other items of interest.

The problem is not understanding the intent behind what the user typed in the search box. While the user knew what they were looking for, this lack of understanding their intent brought back results that weren’t on target.

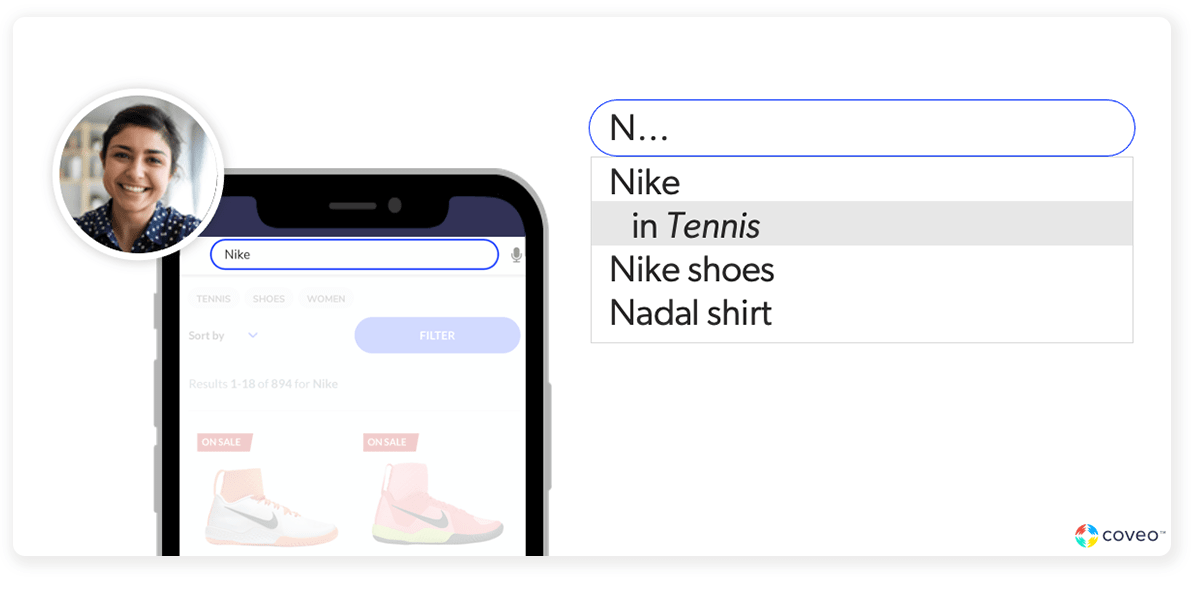

One way to overcome this uncertainty is through predictive analytics and query suggestions, as shown below:

A machine learning model can offer suggestions to the searcher, with the context pulled from the site’s product catalog. However, intent may change from shopper to shopper, so this list won’t be useful to someone looking for soccer shoes. We need to suggest the right queries—the most relevant queries—to help a shopper explore a site’s product inventory. However, by merely suggesting queries, we’re still only guessing at a shopper’s intent rather than interpreting a linguistic expression.

Greco explains that the problem is attempting to understand the user’s intent from a limited window. “We want to grasp the user intent from interaction with our system and we have a window that is not that big to guess what the intent is,” he says. “Search is a unique window where the user is telling you exactly what she wants.”

But all we have is three words: Nike+Tennis+Shoes. How do we teach a machine to interpret that query? While the user knows the context and meaning, a machine does not. And it can’t differentiate a term by topic like brand, sport, or type of footwear. This small dataset doesn’t tell us that.

Additionally, we have the problem of query similarity—for example, “sneakers” instead of “shoes.” While we can solve this with a synonym table, it’s something that the site must maintain manually.

Still, the question is, can we determine intent through machine learning? It all comes down to what clues and insight we can derive from the words in the query.

You’ll Know A Word By The Company It Keeps

Here we can start by looking to philosophy for a possible path.

“The question we are trying to answer is, ‘What do words mean?’” Greco says. “This is a philosophical question. We won’t solve it, but we will look at the solutions that have been provided.”

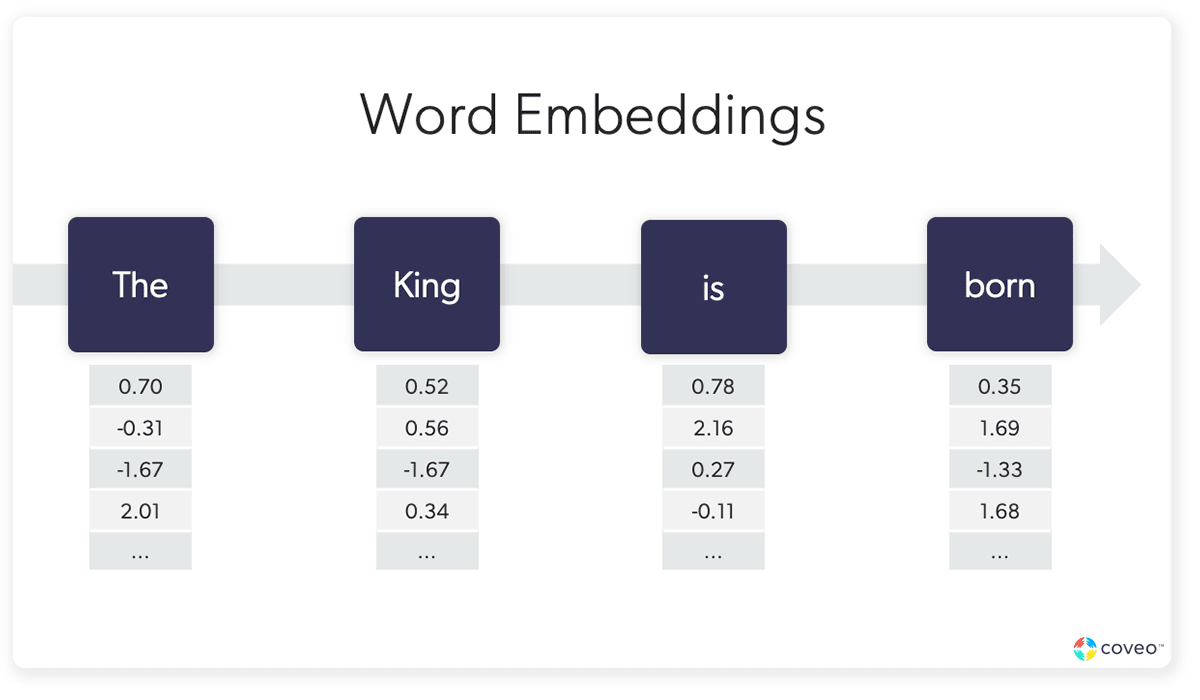

The above John Rupert Firth quote connects to a technique used in natural language processing (NLP) called word embeddings. This method assigns numbers to words based on the spatial relations and distribution of words relative to other words.

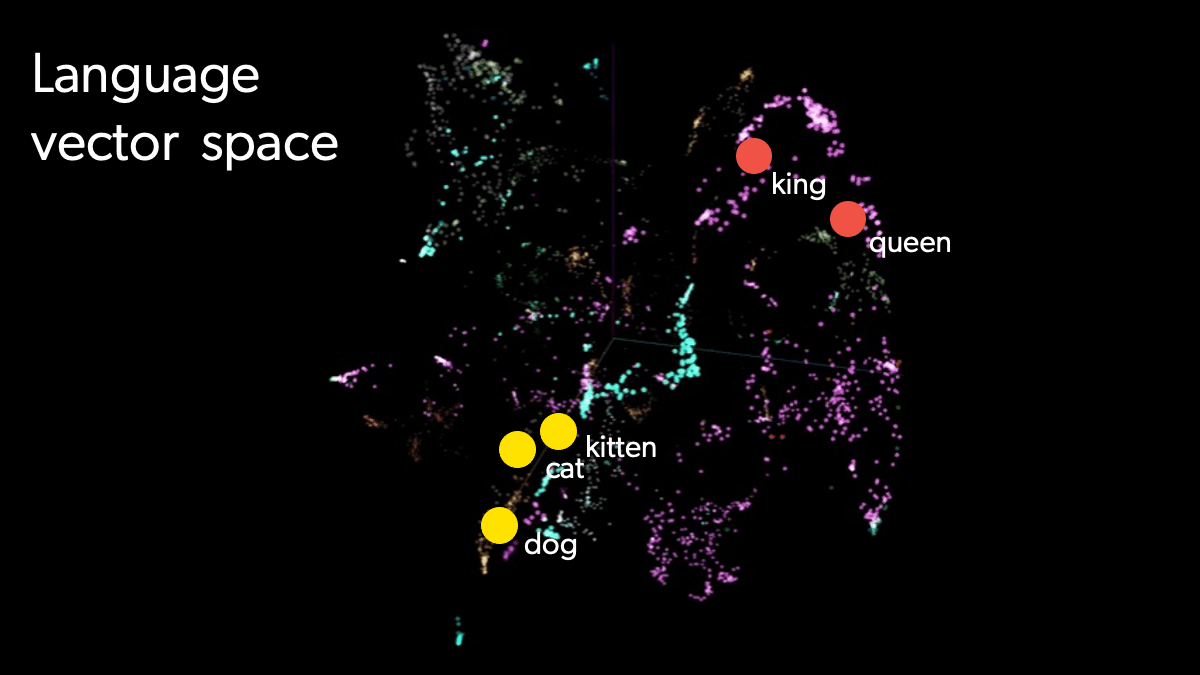

These embeddings provide us with word vectors (or, a set of numbers that relates words to each other). These vectors allow us to map words in space, as in the picture below.

We can also calculate their distance from each other, providing us with an idea of how closely related they are.

“[Word embeddings are] very useful because we turn strings of characters, words, into numbers. Numbers can be manipulated with methods we understand very well. And those are methods that computers are pretty good at,” Greco says. “Another important point is that any model built like this is built on the assumption that the more data or text you process the more accurate they get. Very good language models like this are usually data-hungry.”

Using Domain Knowledge to Find a Solution

So, the query “Nike, Tennis, Shoes” has a linguistic context of three vectors and our AI model can determine the semantic intent of this phrase. This is a great finding until you think about the amount of data in a typical ecommerce site-as it turns out, not very much. In addition, shopper’s search queries are short, as are the descriptions in the catalogs, etc. Guessing the linguistic context is hard given the amount of data.

Further complicating this issue is that an ecommerce site may have multiple languages. “Training a model in English is fairly easy. It is a very well represented language, you have a lot of libraries of linguistic resources you can use,” Greco says. “If you have to do the same thing in Italian, it will require a bit of legwork to do the same thing. Or Vietnamese it would require a lot of work. “

Now comes the moment for applying industry domain knowledge to the potential solution. Is it practical given the constraints? While this solution may work with big tech sites, most ecommerce websites have small data. Overall, an ecommerce website may have a reasonable amount of data, but because queries vary in popularity, many of the queries will have only a small amount of data.

Therefore, a data-hungry model is not practical. When domain knowledge meets science, we find that the word embeddings solution will only work for the largest ecommerce sites.

Reframing the Small Data Problem

This situation led Coveo to take a different approach to small data, inspired by Bertrand Russell, who said:

Linguistic expressions are signs of things other than themselves.

The meaning of “shoe” is an actual shoe. This idea helps us because every ecommerce website has two things. One is the product catalog; the second is your shoppers’ actions during a session (i.e., moving from page to page). Together they provide the nouns (product) and verbs (user actions) for our ecommerce language.

The key here is that we have reframed the problem. We are not interested in the intent of all words or actions—just the ones in the space defined by your product catalog and your pages, creating a far smaller search area.

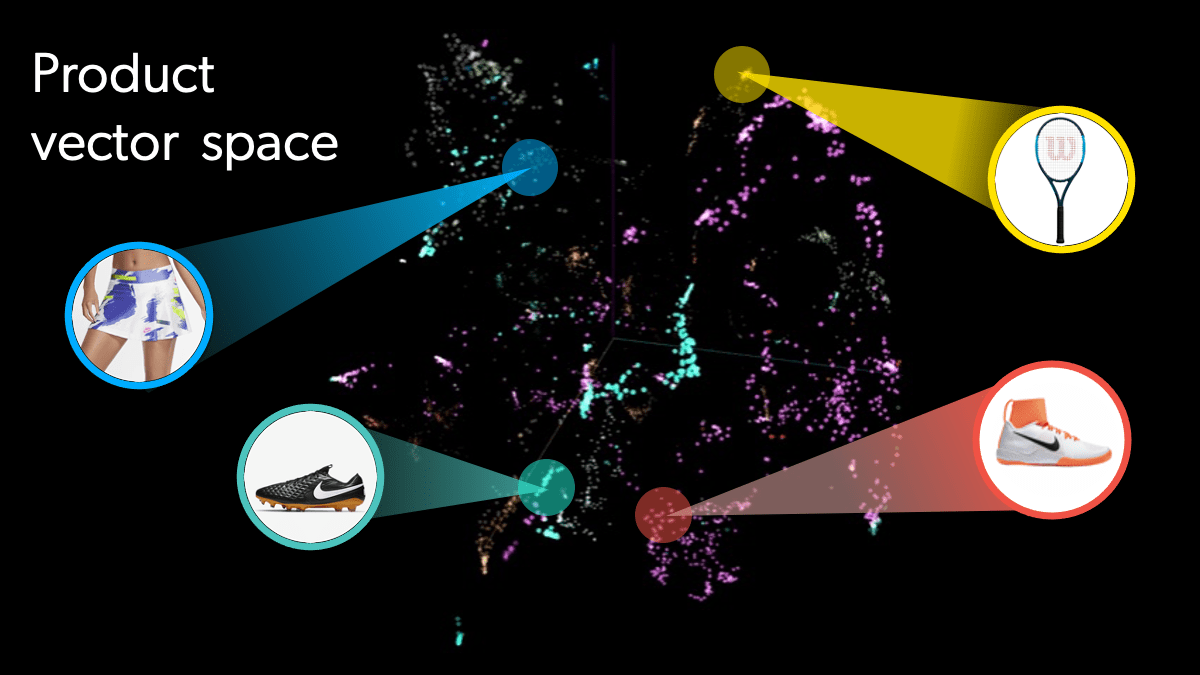

Using these two pieces of information, we can take products, and like word embeddings, create product embeddings. Product embeddings are based on the context in which they appear during a shopper’s session on an ecommerce website. We are then able to assign vectors indicating the relationship of the product with other products. Once again, since we are creating a vector, we can use it to plot and calculate the product’s relative position. We are producing a product vector space.

In the same way Word2Vec uses the linguistic relationship of words to identify them, we can understand the meaning of “tennis racket.” The same is true with shoes. This relationship allows us to represent the query “Nike Tennis Shoes” as the sum of three vectors. Product embeddings gives us a solution tailored to understanding a shopper’s intent in an ecommerce environment. One that is based on the product catalog and the customers’ actions, not the broader category of all words and actions.

This solution also solves the problem of synonyms because analysis will recognize that “sneakers” are a subset of “shoes.” It also allows us to generalize queries. For example, the structure of Nike tennis shoes is brand+sport+object type. If we train a machine learning model to recognize the structure, we can also use it as a guess in understanding Adidas+Basketball+Shorts. While the two queries have no words in common, they do have a common structure. Therefore, the model has an educated guess at a query that it has never seen before—a useful tool in cases where we have sparse data, such as product cold start situations.

Now, we apply science to test the effectiveness of this solution. Is this solution better than a given benchmark? “ We benchmarked it against several state of the art models and it outperforms these models significantly,” Greco reported. “It is better performing in small data scenarios…It is very efficient when there is little data to go around, which is super important to our clients. As it means that it is cost efficient. We can train this model with less data and can train this model on a lower build of AWS.”

The key is that product embedding solutions apply to ecommerce sites with terabytes rather than petabytes of data, making it practical for ecommerce sites outside the tech giants.

Leveling the Ecommerce Playing Field With Machine Learning

The research behind Greco’s presentation won the best industry paper award at NAACL 2021.

It’s important to note that big tech companies dominate research in ecommerce. Coveo is one of the few companies and the only major contributor to elite outlets of ecommerce research in 2020 that focuses on the broader ecommerce market. Its applied research goals are to bring AI and relevance capabilities to the wider ecommerce market.

“Coveo has a different type of customer. Our customers don’t necessarily process the same amount of data as Amazon,” Greco says. “They are often traditional retailers who are undergoing digital transformation. The range of problems that this kind of company has is not represented. We think this is important…Otherwise we will go on innovating from the point of view of the big data companies, which we do not think is good for these particular problems.”

Through its research and products, Coveo is leveling the playing field and raising the customer experience bar.

Dig Deeper

For more articles related to Coveo AI Labs, check out the AI Labs section of the Coveo Blog.

To find out more about Coveo’s contributions to the AI solutions research community, visit research.coveo.com.