Vector search uses machine learning (ML) techniques, such as approximate nearest neighbor (ANN) algorithm, to match and retrieve words and queries efficiently across unstructured data — which is why vector search is faster than most other kinds of search. What makes vector search powerful, is that we can turn up related concepts, not just keywords in an end user’s search result.

Information retrieval has long relied on keyword search, which looks for a word or phrase in a document as well as how often it appears in that document. It can’t match synonyms, understand sentence meaning, know the intent behind a query, or help with search relevance.

Fortunately, search has matured in the last 50 years to give us more relevant results that focus on user intent. Vector search is at the heart of that because we can ask a question in a conversational way and get relevant answers, fast.

Let’s break it down.

What Is A Vector Representation?

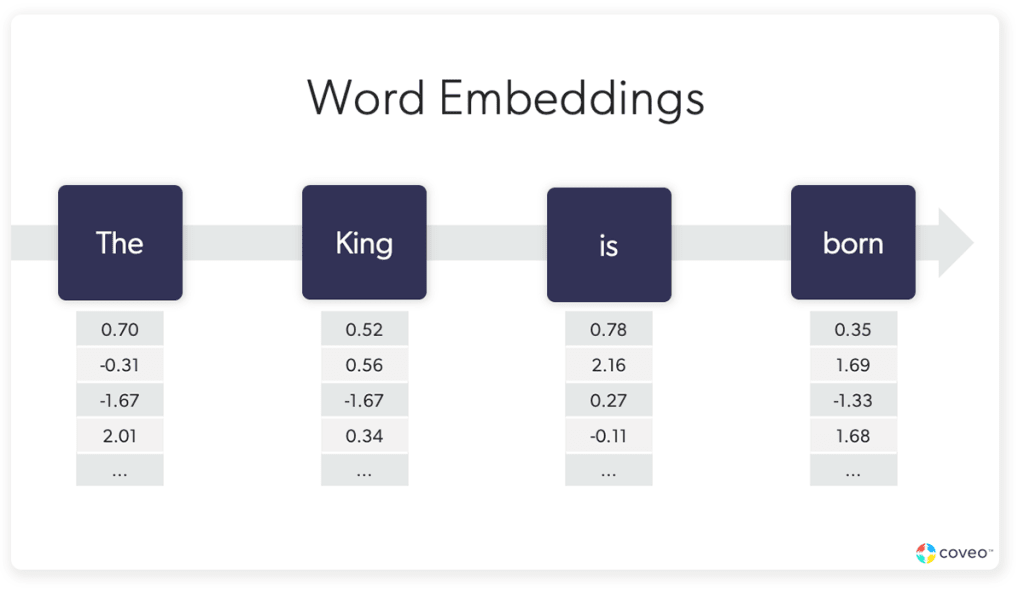

In a nutshell, vector search uses a procedure called embedding to turn words into lists of numbers (vectors) to measure vector similarity, or how similar those words are. The outputs of the process are called word embeddings, or also sometimes called a vector representation. These live in a vector space (a collection of vectors).

(In practice, “embeddings” gets used as shorthand to refer to both the mapping process and the end result, which can be a bit semantically confusing.)

This little workhorse is at the heart of other techniques that you hear more about, including semantic search, natural language processing, generative AI, and similarity search.

There are different technical variations in the exact way that vector search works, but the basic idea centers on the concept of ANN algorithm search in a vector space.

Why Is Vector Search Relevant for Unstructured Content?

Suppose we want to represent an idea, or a phrase of text, in a searchable way? Well, first we have to unpack what we mean by vector space. Think about a postal address of a person:

Clark Kent

366 Coolbaugh Street

Smallville, Iowa 52566 USA

Each of the parts of the address — name, house number, street name, town, state, ZIP code, and country — gives some unique information about where the person lives. The complete postal address ties all the address fragments together to nail down the location where postal mail should be sent.

There are methods to convert a phrase of text like “good food in paris” into a similar sort of address. This list of numbers (or vector representation), such as < 144 2 98 51 >, is where each number represents some word or salient part of the query. This list of numbers is an example of a vector embedding.

A vector embedding acts like a postal address, but instead of being made of pieces such as <street> <city> <ZIP>, these embeddings are made of numbers. A postal address lets you search a map for a place, while a vector lets you search a numerical map – a vector space – of concepts or other unstructured content.

While searching for places on a map, sometimes we are looking for a specific spot (366 Coolbaugh St), but often we are looking for something more general (“places to eat near Clark Kent’s house”). This idea of a search that returns closely related items (and not just exact matches) is what underpins the problem that vector search is trying to solve — how do we search for concepts that are related in meaning to my search query?

When we say, “The Buck Snort Restaurant is closer to Clark’s house than the Rainbow Cafe,” we are talking about a distance we would walk or drive. The closer place is a smaller distance from our starting point (say, one city block away from Clark’s house vs eight city blocks away).

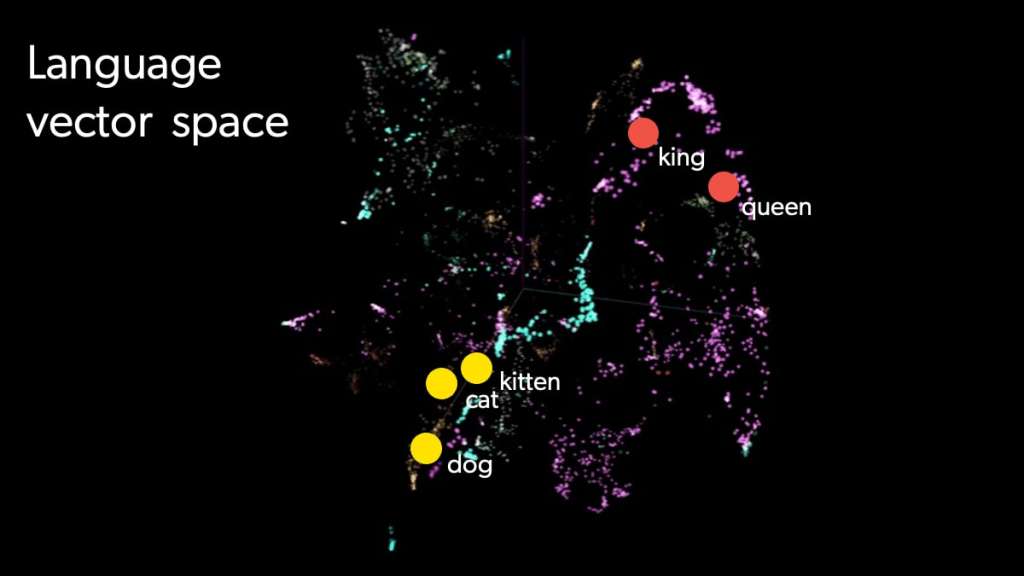

Searching for “businesses near Clark’s house” is a broader search than “cafes near Clark’s house”, but there is a clear relationship between restaurants and businesses. Vector searches use a sort of distance that works in the space of concepts, i.e., A is more closely related to B than C is.

Vectors Are a Math Thing

Vector search is a machine learning technique that’s been evolving for decades. It turns words into numbers and uses similarity metrics, or measures how similar those words are to one another. It’s (more than?) a little complicated, but we can make it more concrete by relying on some concepts from high-school math.

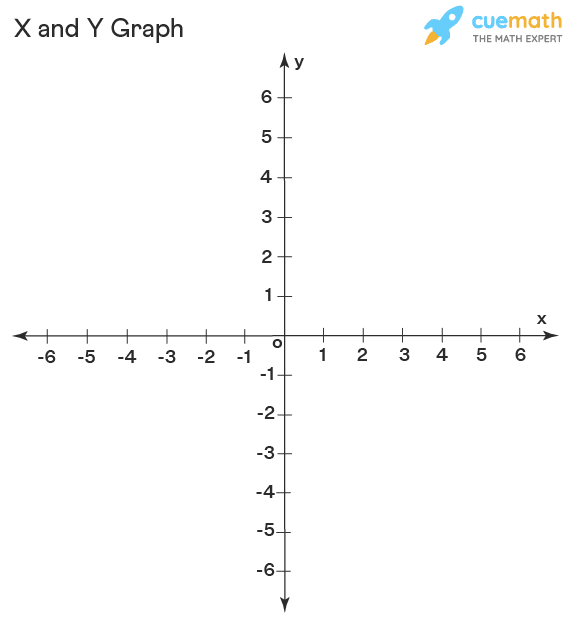

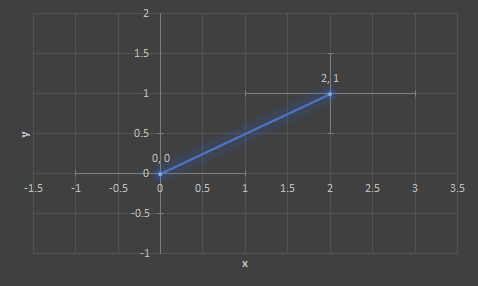

The line between two points is a vector, with one end at the origin, and the other end at a point. We represent it as the end point of the line segment.

Thinking of this in geometric terms makes it a bit more tangible. You have a line with a starting point (called the origin) and it extends out, say, six points to the left and six points to the right. From the same origin, the line extends six points up and six points down. (You can extend the lines to infinity, but for concreteness, we’re using a small range of numbers.)

If we turn these lines into a graph, the lines to the left and right are the x axis, and lines going up and down are the y axis. You can represent any point on the axis with numbers, with positive numbers to one side and negative to the other. We see this kind of two-dimensional figure, i.e., a flat plane, all of the time.

In Graph 2, our vector (or line end point) gets two numbers — one to represent the x axis and another to represent the y axis. Two dimensions means you need two numbers to describe a place in the vector space.

To imagine three dimensions, we would need to come out of the graph, as if we were coming off of a page. Three-dimensional points get three numbers.

For every dimension that is added (which is hard to imagine) a vector gets an additional number (which are sometimes referred to as dense vectors).

In machine learning applications, computer scientists will work with vectors in spaces with hundreds or thousands of dimensions. This certainly complicates our abilities to visualize them, as well as some of our intuitions about geometry, but the same principals of two and three dimensions apply.

Measuring Vector Similarity

So vectors allow us to turn unstructured data into numeric representations, and that data includes words, images, queries, and even products. Data and its vectors sync up through similarity – and show results that match searchers’ questions and intent.

We use similarity metrics to match data to queries. And this is where wading through the above paragraphs about lines, graphs, and vector space comes in.

When we talk about how related two pieces of unstructured data are, we need some way to measure the distance between them in the vector space. Vectors measure similarity (Remember the café and restaurant question above?) with angles. This means that the direction of the vector, rather than the length of the vector, is important. The direction of the lines determines the width of the angle, which is how we measure similarity.

Looking at our graph again, we see three vectors.

- Vector A is 2,1.

- Vector B is 3.2.

- Vector C is -1, 2.

The angle between vector A and vector B is much smaller than the angle between Vector A and Vector C.

Narrow angles tell us that things are closely related, even if one line segment is much longer than another. Again, we’re interested in the direction of the vector, not the length.

If there is 180-degree angle between two vectors, it tells you that they are anti-related, which can be valuable information. If the angle is 90 degrees, these two vectors have nothing to tell you about one another.

Measuring the similarity or distance between two vectors is called a cosine distance, because the actual computation of the distance (a number) uses the cosine function.

Look at a map of Manhattan and you’ll see that most of the streets run up-down (north/south) and left-right (east/west). When we need to see how far the best bagel store is from our hotel, someone will tell us that it’s three blocks up and one block down.

This is one way to measure distance — how far the bagel shop is from where I am (the origin) is called the Manhattan distance. But there’s also distance as the crow flies, which is a different measurement called Euclidean distance. There are many ways to measure distance, but these two examples give us the idea.

In vector search, closer means “more related” and further means “less related.”

How Does a Vector Search Engine Work?

There are a number of ways to calculate similarity in vector search. A simple cosine similarity match (looking at the angles between vectors) is called a naïve approach by technologists. It works, but it’s slow.

Other approaches use various types of indexing to speed up information retrieval. Thinking of indexing in terms of books is helpful. A geometry book might have an index of topics in the back. You would look for the word “vector”, find the page it was on, and flip to that page. It’s a lot faster than skimming the book to find information you need.

Indexing over a large document set achieves the same thing – you have a list of concepts and where you can find those concepts in your documents. When someone conducts a query, you can now quickly find the information that matches the query.

Vector search has several types of indexing, including the Nearest Neighbor Index (NNI), which is a type of similarity search. NNIs group similar vectors together and create short paths to vectors that are not similar. If we return to our book example, a NNI is kind of like a “see also” note in an index. If we look at our map of Manhattan, an NNI would group neighborhoods together and show you how they’re connected — Chinatown is nearer to Tribeca and farther from the Upper East Side.

In vector search, similarity is gauged by angles, whereas indexing makes finding that similarity easier by grouping vectors that are alike and creating paths between those that are alike and those that aren’t.

The result is that every vector in the index is connected to several other vectors in a structure that keeps the most similar vectors near to one another but ensures that even far away vectors have relatively short paths connecting them.

What Are the Limitations of Vector Search?

Vectors and embeddings represent the cutting edge of search architecture and address many of the pain points of traditional search methods, notes former Endeca chief scientist Daniel Tunkelang. For example, embedding vectors are less susceptible to words having multiple meanings or multiple words having the same meaning. And embeddings can be especially useful for handling long queries.

But, as is often the case with machine learning and other artificial intelligence techniques, it can be difficult to explain vector search and understand the results it returns.

Embeddings also tend to be task-dependent, meaning that they work best in environments they are created for. In contrast, word-based representations are more simplistic and flexible.

Despite these and more technical computational challenges, vector search continues to grow and return more relevant results for searchers.

Dig Deeper

Curious what Coveo is up to when it comes to artificial intelligence research? Check out our publications!