Have you thought about making a ChatGPT acceptable use policy yet?

Robots are in the workplace. According to McKinsey, 22% of employees using them for their jobs on a regular basis. And then there’s the fact that 40% of businesses are looking to invest more in AI technology because of generative AI. This has all the makings of a workplace revolution set to transform every industry across the globe.

But as AI use becomes more commonplace, the issues won’t solely be about efficiency. In fact, you should already be thinking about how your ChatGPT policy aligns with the risks and rewards the technology brings. It’s essential for fostering ethical, inclusive, and productive environments while harnessing the potential of generative AI.

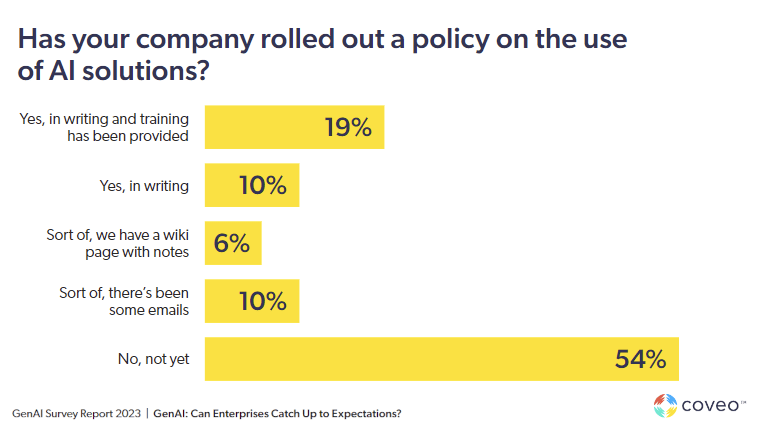

Don’t be one of the companies (54%) whose employees say they haven’t rolled out a workplace policy on the do’s and don’ts of leveraging generative AI for the workplace.

Understanding AI and ChatGPT in the Workplace

Here are the basics of this technology and what it means for your company.

What is AI?

Artificial intelligence (AI) is a function of digital computing devices that allows them to do human-like tasks. For example, scan text for meaning, create reasoned responses, and improve outputs over time through the analysis of past data and machine learning.

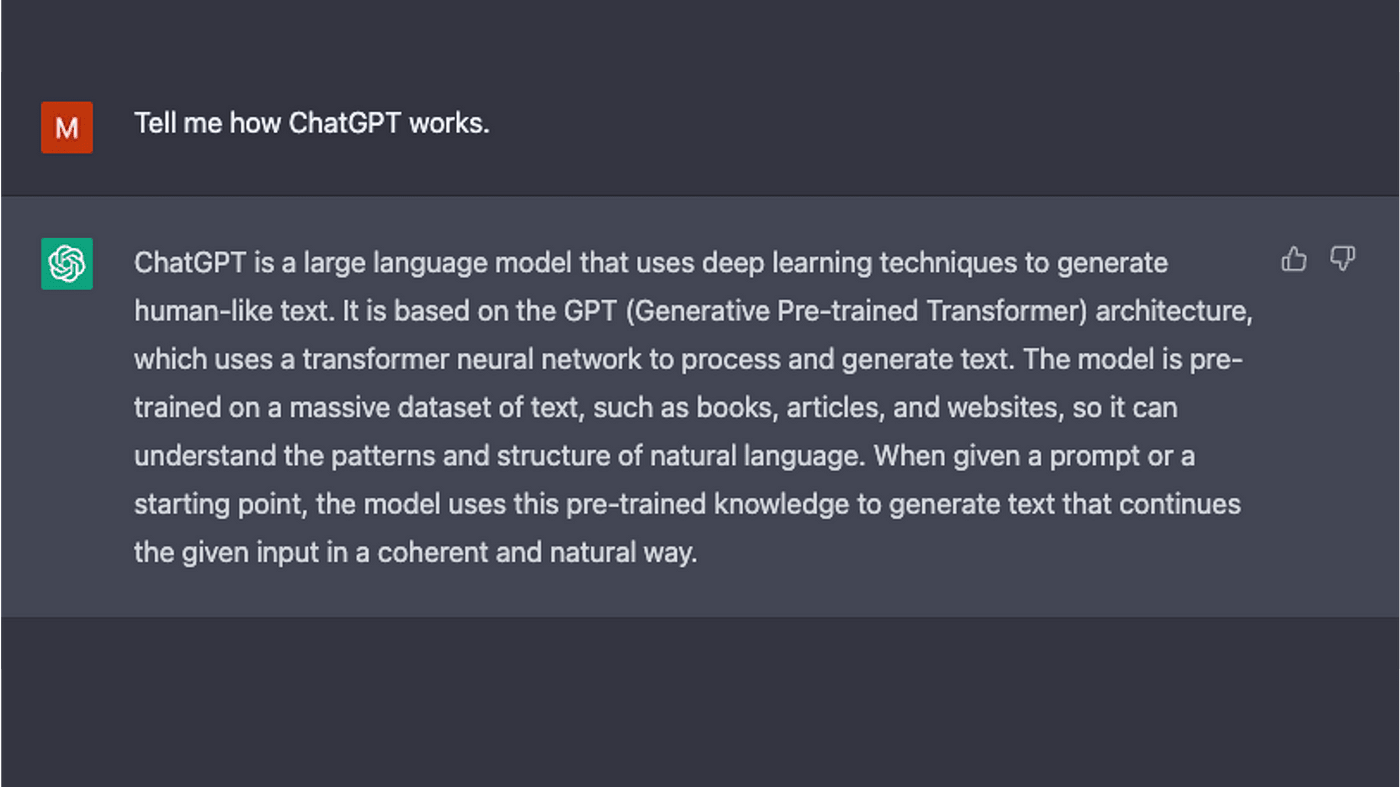

What is ChatGPT?

ChatGPT is one use of AI, specifically generative artificial intelligence. It is a chatbot created by OpenAI that uses large language models to anticipate the next word in a string of words. Users can ask it to answer questions of a set style, tone, or length. It’s the most popular AI chatbot used today and the basis for hundreds of tools for almost every kind of digital task.

Applications for ChatGPT

ChatGPT and other generative AI tools can be found in workplaces everywhere. Common use cases include:

- Education: language tutoring and personalized learning plans

- Customer service: automated scripts for case resolution and management

- Marketing: content ideation and creation

- Publishing: editing and content repurposing

- Academia: research and editing

Note that each of these applications requires a human, at some level, to create prompts, check the outputs, and put those outputs into action.

ChatGPT Benefits and Challenges

Generative AI technology has been hailed as a miracle for content production, idea generation, and task automation. There’s no doubt that it can free up teams to focus on their core competencies and not get bogged down in the minutiae of a typical workday.

But just as there are perks, the dangers shouldn’t be ignored.

Ethical Implications and Responsible AI Usage

AI is not without its shortcomings, and one of the top concerns is that it may be used in unethical ways.

Bias and Fairness

The content generated by ChatGPT and other tools is based on human language, and we know that human language can be deeply flawed in expression and perspective. Because of this, AI tools are not free from bias, and AI-generated content has been known to perpetuate stereotypes regarding race or gender.

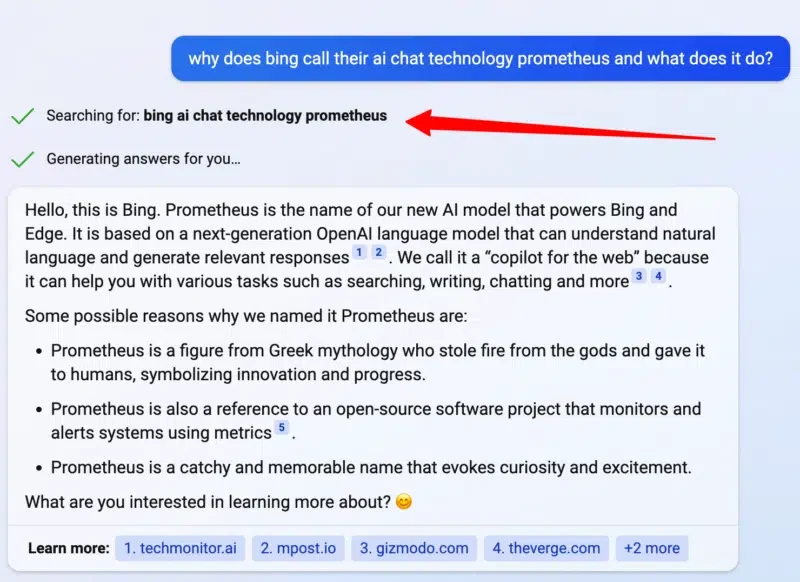

Generative AI has also been known to be terrible with citations (although Bing’s generative AI tool has shown promise with its sourcing.) When generative AI fabricates false facts, known as a “hallucination,” it risks your reputation as a source of truth.

Rather than risk harm to your brand, all generative AI products should be a starting point, examined for fairness and adherence to your own company mission and values.

Privacy and Data Security

Generative AI tools, like ChatGPT, refine their models based on the prompts provided by users. Your employees may have no idea their prompts may become part of a student’s future term paper or a new company’s product design. Since your data use is likely governed by law and your own internal best practices, employees should understand how AI tools may break these data governance policies. Train them on the right ways to use AI or limit use outside of certain UX environments.

Transparency

You don’t have to understand all the technical details of AI to know that it’s important to get ahead. Being absolutely open with your AI goals and the challenges you face can help you achieve the outcomes you desire. Remember, AI is a quickly changing landscape that will soon also be fraught with legal challenges. As these cases make their way through the court system, you’ll want your company to be fully transparent with your policies. Document everything.

Addressing Workforce Impact

With generative AI technologies already affecting how people work, it puts a new responsibility on employers to mitigate harm to their employees and provide new opportunities, too.

Job Displacement

You can’t look at the news or your LinkedIn feed without seeing speculation of how AI will “replace the humans.” But what does this mean for you? While some tasks, like data entry or transcription, will be outsourced more and more to AI, the more likely scenario is a blending of AI and human processes.

For those positions that may be eliminated from the new efficiencies AI creates, look to see if those employees can be reassigned to new positions within your company. If you don’t plan on making job cuts, communicate this early and often to minimize a culture of anxiety among your teams.

Upskilling and Training

One way to avoid job displacement and help your people meet the demands of the next decade is to train them in the latest AI technologies. Your upskilling initiatives can cover new ways to use AI in compliance with your internal AI policies. Employees who traditionally avoid new tech should be brought along and shown the benefits of how these new skills will make them better at their jobs and add value to their positions.

Collaboration with AI

AI is ripe with opportunities to enhance how we already work. From creating shortcuts to automating repetitive tasks, the possibilities for eliminating the most boring and redundant parts of a job are limitless. You may want to start with those tasks that employees have expressed dissatisfaction with, encouraging them to embrace AI for both idea generation and administrative duties.

Designing AI and ChatGPT Guidelines

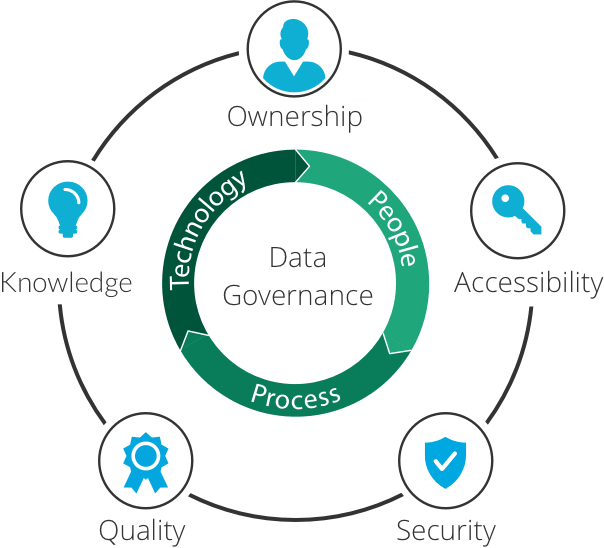

If you already have data governance policies in place, creating AI guidelines may feel familiar. It is, after all, a new way to manipulate and share data, and it can be treated with the same formality and intent. Best practices include:

- Create clear objectives: Define the main purpose of AI in your organization and set boundaries for how it may not be used. How will AI further your work and affect the world? Explore this early on and put it down on paper.

- Write explicit user guidelines: Leave nothing to interpretation. Employees should be able to see your guidelines and know if they can or can’t use ChatGPT for the task at hand. Guidelines should answer the questions of how they use it, where they use it, when they use it, and for what purpose. Share examples of approved and unapproved scenarios to help employees see them reflected in their daily work.

- Create decision-making frameworks: For any new task where AI hasn’t been used before, consult your framework. This is used by management and leadership to help inform what they allow.

Ensuring Legal and Regulatory Compliance

A slew of legal issues surrounds AI use, and courts everywhere will soon be handling cases where AI has been used questionably. Until case law has been established, it’s best to err on the side of caution, considering how AI is affected by these legal forces.

Labor Laws

The U.S. Department of Labor has already weighed in on the AI discussion with its proposed Blueprint for an AI Bill of Rights, which addresses data privacy issues and how employee data is used in the workplace. One other aspect of AI that can affect employee rights is the use of screening and hiring tools which run the risk of workplace discrimination.

Intellectual Property

Who owns the copyright on an AI-generated piece of art or content? The jury is still out (literally), and until these legal challenges are settled, there’s no guarantee you’ll own the work you make with open AI. It’s also a risk to your own IP to have your works put in as prompts.

Data Governance

AI works because it has access to large volumes of personal data, including the data your own teams put in. You should have protocols in place for how you collect, store, and use data as part of your existing data governance policies. Update these frequently in light of new AI development to ensure you stay in compliance.

A Culture of Trust and Open Communication

The best way to ensure your employees are using AI the right way and that you aren’t putting your company data at risk is to be very transparent about AI’s risks and benefits. Share how improper AI use can affect everyone. By guiding your organization on the transition to AI, you can avoid some of the unpleasant surprises that come when you don’t address it at all. Be sure to provide ample opportunity for employee feedback, as well. Ask teams how they feel about AI, what’s working, and what’s not; by hearing their struggles, you can create more pointed education and awareness campaigns that acknowledge this new way of work and how it’s truly affecting your teams.

In conclusion, creating workplace policies in the age of AI and ChatGPT is a complex task that requires careful consideration of ethical, legal, and workforce implications. Organizations must strike a balance between leveraging AI’s potential and safeguarding their employees’ well-being. By designing a comprehensive company policy, fostering a culture of trust, and staying adaptive to emerging challenges, businesses can navigate the future with confidence and success.

Dig Deeper

It’s one thing to equip your employees with the latest tools to help their productivity and proficiency. It’s another to ensure they have access to much-needed information.

In highly pressured times, employees need access to knowledge to perform to the best of their ability. Learn how you can give them proactive assistance in the flow of work, within familiar SaaS applications.