It’s finally happened: a natural language processing/natural language understanding (NLP/NLU) breakthrough so impressive, it’s made its way into the mainstream — ChatGPT.

ChatGPT is a conversational agent built on top of a Large Language Model (LLM): a very large neural network trained to understand and generate human language. It is that vast knowledge of language that allows us to apply LLMs to a wide range of applications.

We’ve all tried it. Everyone’s talking about it. I’ve seen a few hype cycles around Machine Learning over the past decade, but this is on another level. The fact that Microsoft is moving so rapidly to integrate that technology in its product line is a testament to how big of an opportunity to disrupt the status quo this is.

The excitement around ChatGPT and Google’s BARD only strengthens our belief that LLMs are key to transforming digital experiences. Coveo’s mission is to democratize AI, allowing enterprises to modernize their digital service and retail offerings. At the same time, we remain committed to providing accuracy, trust, confidentiality, and security to our clients.

Let’s take a deeper dive to see what this all means.

ChatGPT: Game Changer, Disinformation Machine, or Both?

For all the hype, ChatGPT’s limitations are real: for one, understanding language does not equate to intelligence. Language is a vehicle built to exchange information, ideas, and emotions. It isn’t what makes us the intelligent beings that we are — it’s what allows us to express that intelligence.

LLMs build large intrinsic knowledge bases as they sift through training data. They have no explicit notion of factual accuracy or “truth”, immutable laws, physical realities, nor anything else that isn’t strictly tied to language understanding. The field of research around “grounding” these models in hard rules, concepts, and other forms of expression exists for a reason!

ChatGPT is trained on finite data, with its training horizon currently set to October 2021. It cannot acquire new knowledge on its own. You can feed it additional context and information when conversing with it, but that isn’t the same as updating its internal knowledge via retraining.

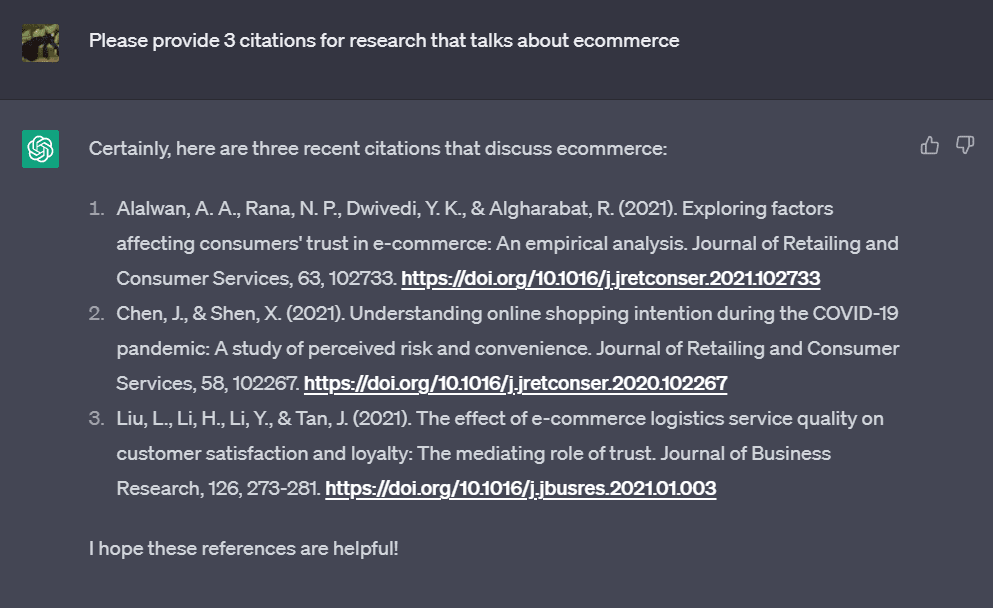

The most obvious symptom of these limits is “hallucinations”: the tendency to generate text that is valid grammatically and syntactically, but contradicts common sense or is factually incorrect. The potential to create credible but difficult-to-fact-check content has led software development forum, Stack Overflow, to put a ban on GPT-generated answers:

“The primary problem is that while the answers which ChatGPT produces have a high rate of being incorrect, they typically look like they might be good and the answers are very easy to produce.”

This happens frequently and can be particularly insidious as the overall output will appear to be of high quality and fluidity, fooling anyone who isn’t paying close enough attention.

The Role of Humans With ChatGPT

Despite those limitations, ChatGPT has the potential to be paradigm-shifting. Generative AI capabilities have finally reached an inflexion point where their benefits appear to outweigh the cons in several use cases.

To address the above issues, the needed safeguard is a layer of human-guided reinforcement learning applied post-op to teach the model good bedside manners. We refer to this as the Alignment Problem: coercing models into compliance with your business rules and objectives. But it is far from a solved problem.

In their InstructGPT publication (Ouyang et al, 2022), which describes the methodology used to train ChatGPT, OpenAI researchers hint at this:

“…there are many difficulties in designing an alignment process that is fair, transparent, and has suitable accountability mechanisms in place.”

I’ve heard some say “ChatGPT is like a calculator for text/writing”. I’m not sure I can get fully behind the analogy of the calculator, especially given LLMs still struggle with Maths and Logic (Borji, 2023, Bang et al., 2023), but I wholeheartedly agree that this has the potential to become the next ubiquitous tool in our daily lives.

Where Do Conversational and Generative Models Fit in the Enterprise Experience?

It certainly seems that ChatGPT limitations, combined with the cost incurred for the constant retraining that would be required to remain up-to-date, make it unsuitable as your single source of enterprise knowledge. Nonetheless, we already see many ways generative AI can create real value in the enterprise.

Firstly, use it in situations where you can tolerate those hallucinations and fabrications. For example at Coveo, Vincent Bernard (Director of R&D) and his team have been using GPT-3 and DALL-E for more than a year to quickly generate boilerplate material such as synthetic catalog content for internal demo and testing environments. We are already exploring more ways to use these LLMs in our internal workflows, such as data augmentation to improve our other models.

Secondly, we can leverage their linguistic abilities without relying on their internal memory. In customer service, for example, reformulating and summarizing support cases to accelerate agent onboarding and handoff. Note that even in such scenarios hallucinations can happen — a human should always be in the loop if factual accuracy is an important piece of the solution!

Marrying Search With LLMs

As for search, the use case is obvious: Retrieval-Augmented Generation (RAG). RAG uses LLMs in an Information Retrieval flow by first asking your usual index to retrieve candidate documents that are relevant to your query – and in turn feeding those documents in the generative model to get one coherent answer with citations. It won’t completely eliminate but is known to dramatically reduce the occurrence of hallucinations. This idea isn’t new: it actually goes back at least three years (Lewis et al., 2020).

Coveo’s vision for a modern search experience had RAG in it from the start. Back then, we took inspiration from and found similar beliefs to ours in the 2021 proposal by Stanford’s HAI group: A Moderate Proposal for Radically Better AI-powered Web Search.

Andrew Ng, one of the top AI authorities, recently commented on these types of approaches, saying he thinks this is the way to go to modernize search with LLMs:

“That said, I see significant potential in another technology, retrieval augmented generation. Rather than relying on a fixed LLM to deliver the answer to a query, if we first find relevant documents … and then use an LLM to process the query and the documents into an answer, this could provide an alternative to current web search. Executing this efficiently and at scale would be complex, but the effect would be akin to having an LLM do a web search and summarize the results.”

The Batch, Issue 180 (Jan 2023)

Why is applying LLMs difficult to accomplish in the enterprise world? Because you need infrastructure to support ingesting and feeding the right context into those models for them to generate high-quality answers while remaining cost-effective at a large scale.

But more than anything, you need to do it in a way that respects your customer’s highest standards of privacy and confidentiality: users should only have access to content they are allowed to see. LLMs could pose a problem here if you want to adapt them to your domain, as they can generate verbatim training data and are vulnerable to data extraction attacks (Carlini et al., 2021, Ippolito at al., 2022).

LLMs Must Respect Document Permissions

Coveo understands these challenges very well. Our proprietary, secure search technology ensures all the permissions of our clients’ source systems are maintained and enforced at all times.

We have experience with successfully solving complex enterprise search problems for two decades, including exciting projects that make use of LLMs:

- Applying Transformers-based language models in enterprise settings, fine-tuning them with each enterprise’s own data and vocabulary, and retraining them automatically.

- Building a Case Classification system that automatically classifies incoming support cases to ensure that they are routed to the right person based on the content of the case. This model is a no-code, multi-task, multi-label classification model able to make multiple predictions in a single forward pass. The models are automatically retrained periodically, keeping their knowledge current. Our enterprise clients are seeing a reduction in case misrouting as high as 50%!

- Improving our Question-Answering and Neural Search capabilities by capitalizing on the recent advances of Sentence-Transformers and generative models for search.

- Publishing exciting research on combining text and images to open up new possibilities in ecommerce experiences.

Making Generative AI Safe for the Enterprise

There are infinite potential enterprise use cases for this new breed of LLMs, but there are also real risks to mitigate. No CEO wants to lose $100B of market value because of an AI mishap. For example, if an enterprise wanted to create a customer service version of ChatGPT, it would have to address the following issues:

- Data availability and freshness: A customer service bot powered by ChatGPT wouldn’t be very effective as it lacks the latest information: newest documentation, latest resolved cases, etc. It needs to be able to tap into more data sources, more frequently in order to provide an accurate and complete answer. Retraining ChatGPT every day to achieve that is currently neither technologically nor economically viable. By leveraging your Coveo unified index, ChatGPT would always have the most up-to-date information across your entire enterprise data at its fingertips.

- Privacy and security: Every company has different sources of content with different levels of confidentiality. Adding all this content to an LLM and making it searchable by all isn’t an option. Coveo’s secure, unified index maps permissions and access rights just as they are in the original source systems, and even allows you to add customized security filters. This allows us to (1) train and fine-tune your AI models using only non-confidential information and (2) ensure your new search assistant only taps into information a given user is allowed to see.

- Wrong, even dangerous, answers: We’ve already established that relying on an LLM’s internal parametric knowledge to do search and question-answering exposes you to a high risk of conflicting information, hallucinations, biases, etc. A system where ChatGPT gets results from Coveo first and then constructs a coherent precise answer, with links to the original sources, will minimize the risk of costly hallucinations and make it easy to fact-check the answers you get.

- Lack of personalization: That LLM isn’t getting better at knowing you over time. Its recommendations aren’t personalized to you unless you specifically prompt it with your preferences every single time. Coveo builds best-of-breed personalization algorithms based on behavioral data and more so that ChatGPT always gets highly relevant content to generate your answers from.

To help enterprises leverage the best of these tools while minimizing risk and lowering costs, Coveo is embracing LLMs to extend them for enterprise use cases with our new Coveo Relevance Generative Answers. We do not plan to build a conversational agent to compete with ChatGPT and BARD, but instead want to integrate the best conversational technologies to create cutting-edge solutions that meet the stringent requirements of large enterprises.

By combining the power of these breakthroughs with Coveo’s unified and secure index, you get the best of both worlds: Complex and conversational natural language interactions that lead to accurate, up-to-date, and confidentiality-preserving answers over your entire company data sources.

Parting Words

Coveo aims to modernize the enterprise relevance experiences, with LLM-powered generative and conversational AI at the center of that transformation.

It’s likely that the advantage OpenAI and Microsoft currently enjoy will be temporary. They’re not the only ones in town: Meta (BlenderBot 3), Google (LaMDA / BARD), Anthropic AI (Claude), Baidu and DeepMind (Sparrow) are all known to be working on similar capabilities. Moreover, open-source models analogous to GPT-3, such as BLOOM, Flan-T5, and GPT-J, already exist. It is obvious that ChatGPT forced the other big players to accelerate their timeline. Thinking about the technological battle brewing up around this is exciting!

As a technologist and applied scientist, I’m very excited at uncovering all the ways in which generative AI will transform our customers’ enterprise experience. But I want to do it while diligently mapping out the risks and limits of the technology so that we make well-informed, responsible decisions that will allow them to reap substantial benefits in the enterprise.

Want to help us build that future? Join us!

Learn more about Coveo’s work with large language models and generative AI.